mirror of

https://gitee.com/dolphinscheduler/DolphinScheduler.git

synced 2024-11-30 19:27:38 +08:00

Merge branch 'dev' of https://github.com/apache/incubator-dolphinscheduler into dev

This commit is contained in:

commit

91e563daeb

1

.github/ISSUE_TEMPLATE/bug_report.md

vendored

1

.github/ISSUE_TEMPLATE/bug_report.md

vendored

@ -2,7 +2,6 @@

|

||||

name: Bug report

|

||||

about: Create a report to help us improve

|

||||

title: "[Bug][Module Name] Bug title "

|

||||

labels: bug

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

1

.github/ISSUE_TEMPLATE/feature_request.md

vendored

1

.github/ISSUE_TEMPLATE/feature_request.md

vendored

@ -2,7 +2,6 @@

|

||||

name: Feature request

|

||||

about: Suggest an idea for this project

|

||||

title: "[Feature][Module Name] Feature title"

|

||||

labels: new feature

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

@ -2,7 +2,6 @@

|

||||

name: Improvement suggestion

|

||||

about: Improvement suggestion for this project

|

||||

title: "[Improvement][Module Name] Improvement title"

|

||||

labels: improvement

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

1

.github/ISSUE_TEMPLATE/question.md

vendored

1

.github/ISSUE_TEMPLATE/question.md

vendored

@ -2,7 +2,6 @@

|

||||

name: Question

|

||||

about: Have a question wanted to be help

|

||||

title: "[Question] Question title"

|

||||

labels: question

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

2

.github/workflows/ci_backend.yml

vendored

2

.github/workflows/ci_backend.yml

vendored

@ -49,7 +49,7 @@ jobs:

|

||||

with:

|

||||

submodule: true

|

||||

- name: Check License Header

|

||||

uses: apache/skywalking-eyes@9bd5feb

|

||||

uses: apache/skywalking-eyes@ec88b7d850018c8983f87729ea88549e100c5c82

|

||||

- name: Set up JDK 1.8

|

||||

uses: actions/setup-java@v1

|

||||

with:

|

||||

|

||||

2

.github/workflows/ci_e2e.yml

vendored

2

.github/workflows/ci_e2e.yml

vendored

@ -33,7 +33,7 @@ jobs:

|

||||

with:

|

||||

submodule: true

|

||||

- name: Check License Header

|

||||

uses: apache/skywalking-eyes@9bd5feb

|

||||

uses: apache/skywalking-eyes@ec88b7d850018c8983f87729ea88549e100c5c82

|

||||

- uses: actions/cache@v1

|

||||

with:

|

||||

path: ~/.m2/repository

|

||||

|

||||

2

.github/workflows/ci_ut.yml

vendored

2

.github/workflows/ci_ut.yml

vendored

@ -36,7 +36,7 @@ jobs:

|

||||

with:

|

||||

submodule: true

|

||||

- name: Check License Header

|

||||

uses: apache/skywalking-eyes@9bd5feb

|

||||

uses: apache/skywalking-eyes@ec88b7d850018c8983f87729ea88549e100c5c82

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }} # Only enable review / suggestion here

|

||||

- uses: actions/cache@v1

|

||||

|

||||

4

.gitignore

vendored

4

.gitignore

vendored

@ -7,6 +7,10 @@

|

||||

.target

|

||||

.idea/

|

||||

target/

|

||||

dist/

|

||||

all-dependencies.txt

|

||||

self-modules.txt

|

||||

third-party-dependencies.txt

|

||||

.settings

|

||||

.nbproject

|

||||

.classpath

|

||||

|

||||

54

README.md

54

README.md

@ -7,46 +7,44 @@ Dolphin Scheduler Official Website

|

||||

[](https://sonarcloud.io/dashboard?id=apache-dolphinscheduler)

|

||||

|

||||

|

||||

> Dolphin Scheduler for Big Data

|

||||

|

||||

[](https://starchart.cc/apache/incubator-dolphinscheduler)

|

||||

|

||||

[](README.md)

|

||||

[](README_zh_CN.md)

|

||||

|

||||

|

||||

### Design features:

|

||||

### Design Features:

|

||||

|

||||

Dolphin Scheduler is a distributed and easy-to-extend visual DAG workflow scheduling system. It dedicates to solving the complex dependencies in data processing to make the scheduling system `out of the box` for the data processing process.

|

||||

DolphinScheduler is a distributed and extensible workflow scheduler platform with powerful DAG visual interfaces, dedicated to solving complex job dependencies in the data pipeline and providing various types of jobs available `out of the box`.

|

||||

|

||||

Its main objectives are as follows:

|

||||

|

||||

- Associate the tasks according to the dependencies of the tasks in a DAG graph, which can visualize the running state of the task in real-time.

|

||||

- Support many task types: Shell, MR, Spark, SQL (MySQL, PostgreSQL, hive, spark SQL), Python, Sub_Process, Procedure, etc.

|

||||

- Support process scheduling, dependency scheduling, manual scheduling, manual pause/stop/recovery, support for failed retry/alarm, recovery from specified nodes, Kill task, etc.

|

||||

- Support the priority of process & task, task failover, and task timeout alarm or failure.

|

||||

- Support process global parameters and node custom parameter settings.

|

||||

- Support online upload/download of resource files, management, etc. Support online file creation and editing.

|

||||

- Support task log online viewing and scrolling, online download log, etc.

|

||||

- Implement cluster HA, decentralize Master cluster and Worker cluster through Zookeeper.

|

||||

- Support various task types: Shell, MR, Spark, SQL (MySQL, PostgreSQL, hive, spark SQL), Python, Sub_Process, Procedure, etc.

|

||||

- Support scheduling of workflows and dependencies, manual scheduling to pause/stop/recover task, support failure task retry/alarm, recover specified nodes from failure, kill task, etc.

|

||||

- Support the priority of workflows & tasks, task failover, and task timeout alarm or failure.

|

||||

- Support workflow global parameters and node customized parameter settings.

|

||||

- Support online upload/download/management of resource files, etc. Support online file creation and editing.

|

||||

- Support task log online viewing and scrolling and downloading, etc.

|

||||

- Have implemented cluster HA, decentralize Master cluster and Worker cluster through Zookeeper.

|

||||

- Support the viewing of Master/Worker CPU load, memory, and CPU usage metrics.

|

||||

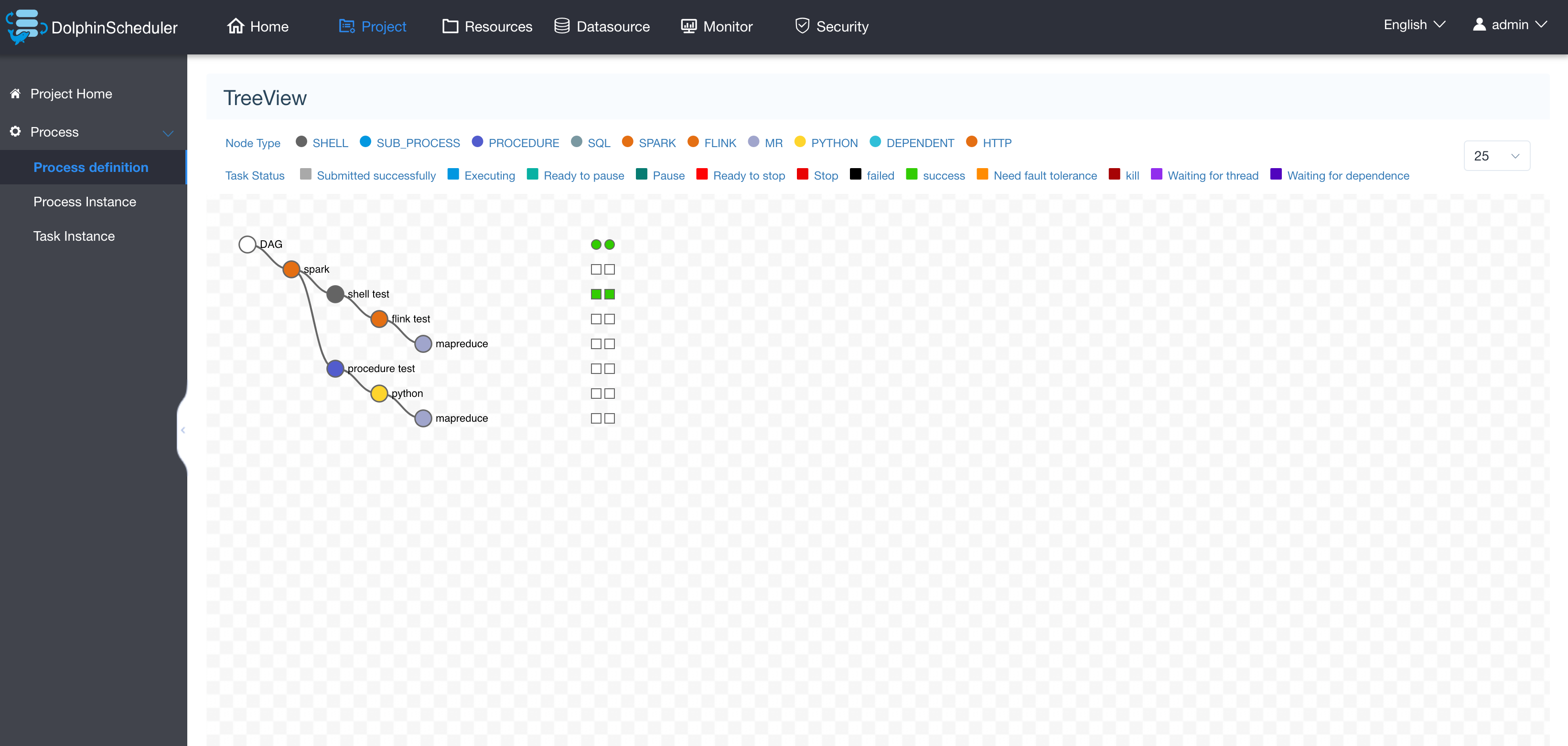

- Support presenting tree or Gantt chart of workflow history as well as the statistics results of task & process status in each workflow.

|

||||

- Support backfilling data.

|

||||

- Support displaying workflow history in tree/Gantt chart, as well as statistical analysis on the task status & process status in each workflow.

|

||||

- Support back-filling data.

|

||||

- Support multi-tenant.

|

||||

- Support internationalization.

|

||||

- There are more waiting for partners to explore...

|

||||

- More features waiting for partners to explore...

|

||||

|

||||

|

||||

### What's in Dolphin Scheduler

|

||||

### What's in DolphinScheduler

|

||||

|

||||

Stability | Easy to use | Features | Scalability |

|

||||

-- | -- | -- | --

|

||||

Decentralized multi-master and multi-worker | Visualization process defines key information such as task status, task type, retry times, task running machine, visual variables, and so on at a glance. | Support pause, recover operation | Support custom task types

|

||||

HA is supported by itself | All process definition operations are visualized, dragging tasks to draw DAGs, configuring data sources and resources. At the same time, for third-party systems, the API mode operation is provided. | Users on Dolphin Scheduler can achieve many-to-one or one-to-one mapping relationship through tenants and Hadoop users, which is very important for scheduling large data jobs. | The scheduler uses distributed scheduling, and the overall scheduling capability will increase linearly with the scale of the cluster. Master and Worker support dynamic online and offline.

|

||||

Overload processing: Overload processing: By using the task queue mechanism, the number of schedulable tasks on a single machine can be flexibly configured. Machine jam can be avoided with high tolerance to numbers of tasks cached in task queue. | One-click deployment | Support traditional shell tasks, and big data platform task scheduling: MR, Spark, SQL (MySQL, PostgreSQL, hive, spark SQL), Python, Procedure, Sub_Process | |

|

||||

Decentralized multi-master and multi-worker | Visualization of workflow key information, such as task status, task type, retry times, task operation machine information, visual variables, and so on at a glance. | Support pause, recover operation | Support customized task types

|

||||

support HA | Visualization of all workflow operations, dragging tasks to draw DAGs, configuring data sources and resources. At the same time, for third-party systems, provide API mode operations. | Users on DolphinScheduler can achieve many-to-one or one-to-one mapping relationship through tenants and Hadoop users, which is very important for scheduling large data jobs. | The scheduler supports distributed scheduling, and the overall scheduling capability will increase linearly with the scale of the cluster. Master and Worker support dynamic adjustment.

|

||||

Overload processing: By using the task queue mechanism, the number of schedulable tasks on a single machine can be flexibly configured. Machine jam can be avoided with high tolerance to numbers of tasks cached in task queue. | One-click deployment | Support traditional shell tasks, and big data platform task scheduling: MR, Spark, SQL (MySQL, PostgreSQL, hive, spark SQL), Python, Procedure, Sub_Process | |

|

||||

|

||||

|

||||

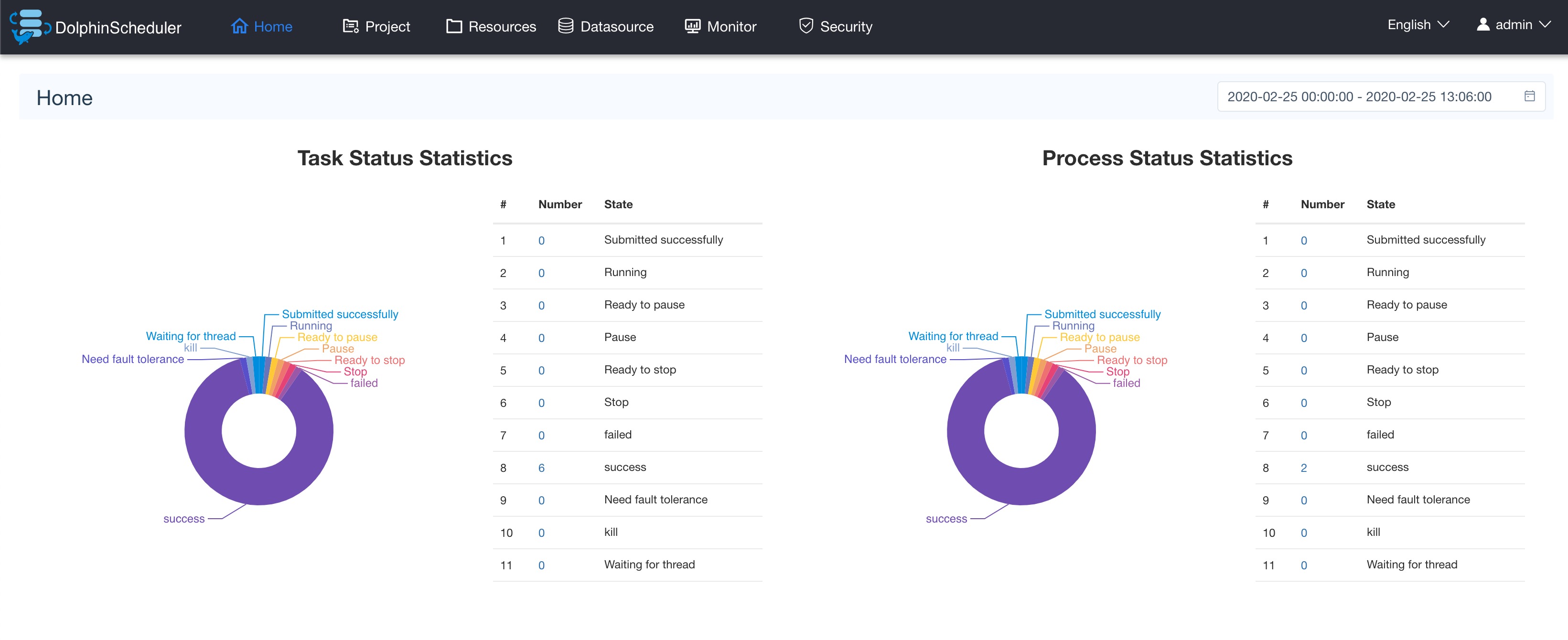

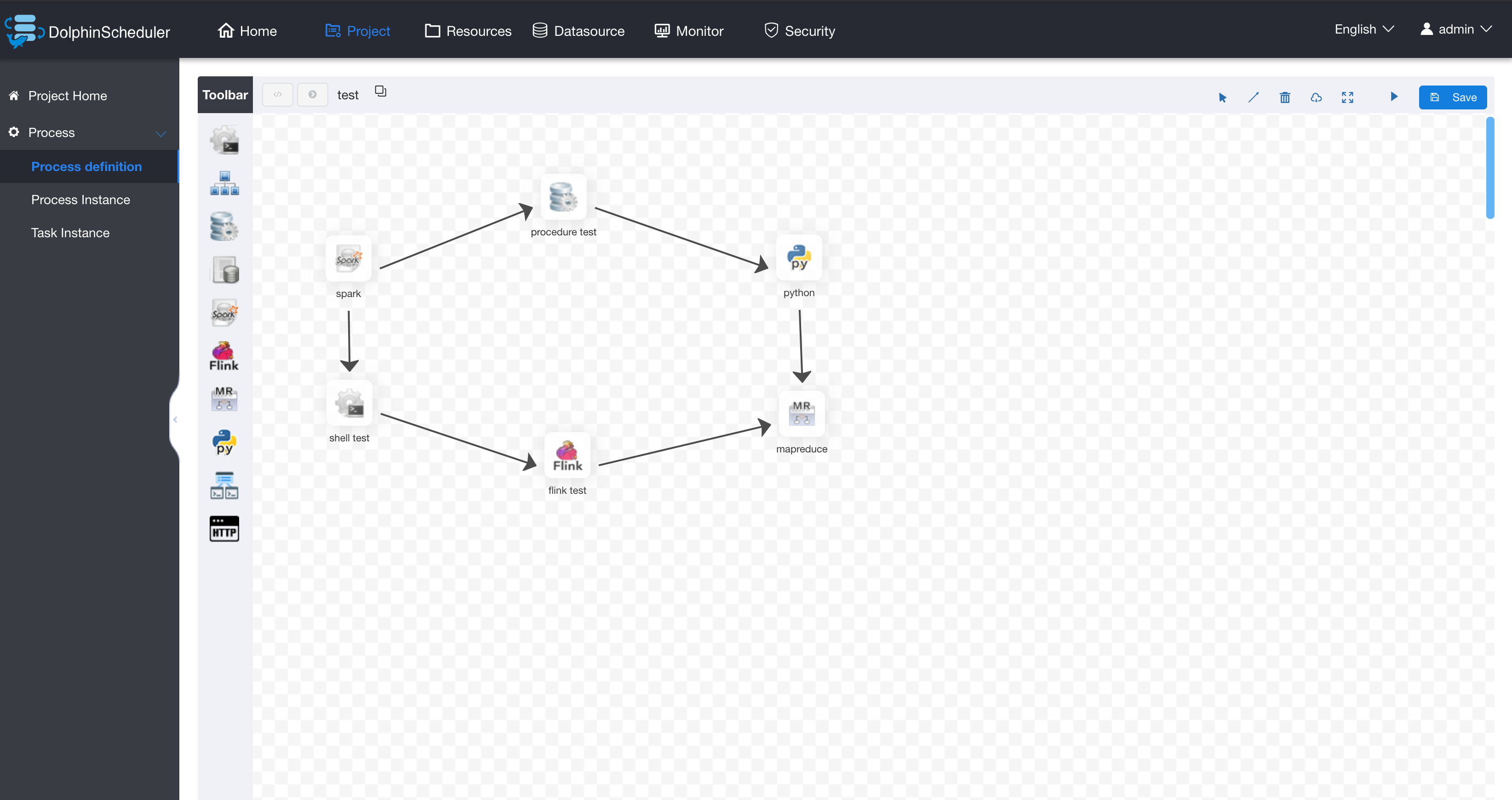

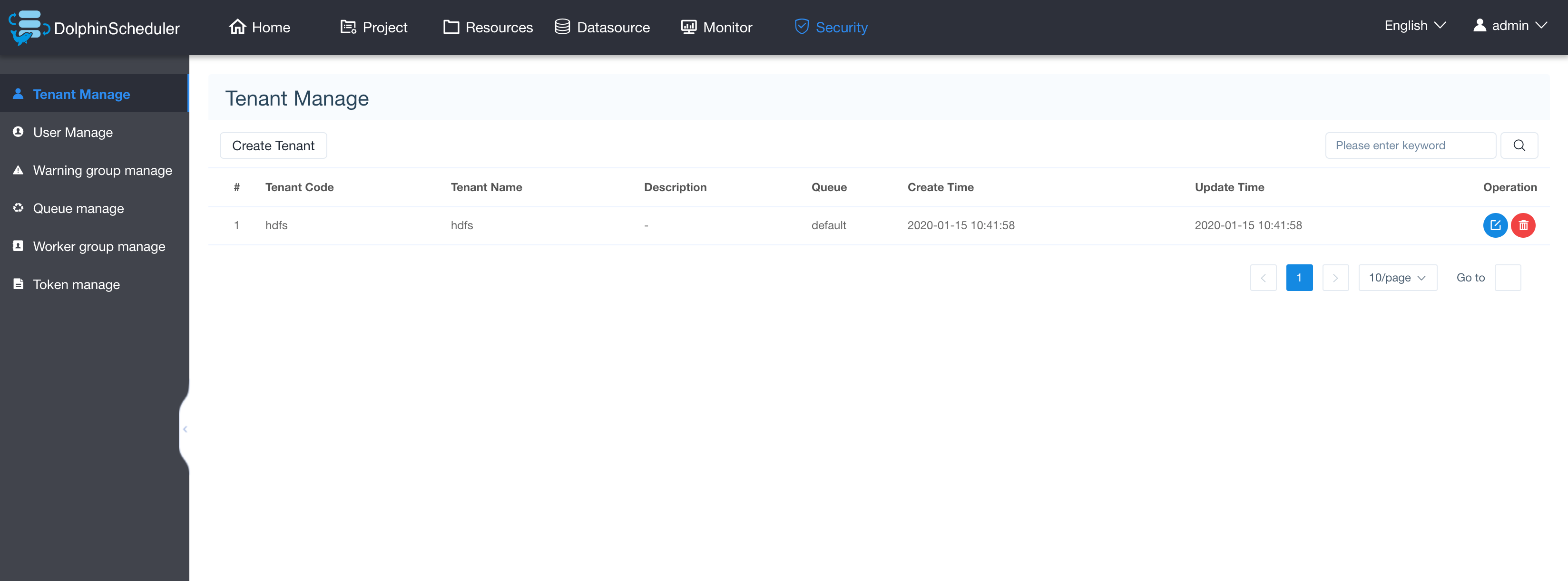

### System partial screenshot

|

||||

### User Interface Screenshots

|

||||

|

||||

|

||||

|

||||

@ -57,13 +55,9 @@ Overload processing: Overload processing: By using the task queue mechanism, the

|

||||

|

||||

|

||||

|

||||

### QuickStart in Docker

|

||||

Please referer the official website document:[[QuickStart in Docker](https://dolphinscheduler.apache.org/en-us/docs/1.3.4/user_doc/docker-deployment.html)]

|

||||

|

||||

### Recent R&D plan

|

||||

The work plan of Dolphin Scheduler: [R&D plan](https://github.com/apache/incubator-dolphinscheduler/projects/1), which `In Develop` card shows the features that are currently being developed and TODO card lists what needs to be done(including feature ideas).

|

||||

|

||||

### How to contribute

|

||||

|

||||

Welcome to participate in contributing, please refer to this website to find out more: [[How to contribute](https://dolphinscheduler.apache.org/en-us/docs/development/contribute.html)]

|

||||

|

||||

### How to Build

|

||||

|

||||

@ -80,14 +74,16 @@ dolphinscheduler-dist/target/apache-dolphinscheduler-incubating-${latest.release

|

||||

|

||||

### Thanks

|

||||

|

||||

Dolphin Scheduler is based on a lot of excellent open-source projects, such as google guava, guice, grpc, netty, ali bonecp, quartz, and many open-source projects of Apache and so on.

|

||||

We would like to express our deep gratitude to all the open-source projects which contribute to making the dream of Dolphin Scheduler comes true. We hope that we are not only the beneficiaries of open-source, but also give back to the community. Besides, we expect the partners who have the same passion and conviction to open-source will join in and contribute to the open-source community!

|

||||

|

||||

DolphinScheduler is based on a lot of excellent open-source projects, such as google guava, guice, grpc, netty, ali bonecp, quartz, and many open-source projects of Apache and so on.

|

||||

We would like to express our deep gratitude to all the open-source projects used in Dolphin Scheduler. We hope that we are not only the beneficiaries of open-source, but also give back to the community. Besides, we hope everyone who have the same enthusiasm and passion for open source could join in and contribute to the open-source community!

|

||||

|

||||

### Get Help

|

||||

1. Submit an issue

|

||||

1. Submit an [[issue](https://github.com/apache/incubator-dolphinscheduler/issues/new/choose)]

|

||||

1. Subscribe to the mail list: https://dolphinscheduler.apache.org/en-us/docs/development/subscribe.html, then email dev@dolphinscheduler.apache.org

|

||||

|

||||

### How to Contribute

|

||||

The community welcomes everyone to participate in contributing, please refer to this website to find out more: [[How to contribute](https://dolphinscheduler.apache.org/en-us/community/development/contribute.html)]

|

||||

|

||||

|

||||

### License

|

||||

Please refer to the [LICENSE](https://github.com/apache/incubator-dolphinscheduler/blob/dev/LICENSE) file.

|

||||

|

||||

@ -15,53 +15,64 @@

|

||||

~ limitations under the License.

|

||||

-->

|

||||

<configuration>

|

||||

<property>

|

||||

<name>worker.exec.threads</name>

|

||||

<value>100</value>

|

||||

<value-attributes>

|

||||

<type>int</type>

|

||||

</value-attributes>

|

||||

<description>worker execute thread num</description>

|

||||

<on-ambari-upgrade add="true"/>

|

||||

</property>

|

||||

<property>

|

||||

<name>worker.heartbeat.interval</name>

|

||||

<value>10</value>

|

||||

<value-attributes>

|

||||

<type>int</type>

|

||||

</value-attributes>

|

||||

<description>worker heartbeat interval</description>

|

||||

<on-ambari-upgrade add="true"/>

|

||||

</property>

|

||||

<property>

|

||||

<name>worker.max.cpuload.avg</name>

|

||||

<value>100</value>

|

||||

<value-attributes>

|

||||

<type>int</type>

|

||||

</value-attributes>

|

||||

<description>only less than cpu avg load, worker server can work. default value : the number of cpu cores * 2</description>

|

||||

<on-ambari-upgrade add="true"/>

|

||||

</property>

|

||||

<property>

|

||||

<name>worker.reserved.memory</name>

|

||||

<value>0.3</value>

|

||||

<description>only larger than reserved memory, worker server can work. default value : physical memory * 1/10, unit is G.</description>

|

||||

<on-ambari-upgrade add="true"/>

|

||||

</property>

|

||||

|

||||

<property>

|

||||

<name>worker.listen.port</name>

|

||||

<value>1234</value>

|

||||

<value-attributes>

|

||||

<type>int</type>

|

||||

</value-attributes>

|

||||

<description>worker listen port</description>

|

||||

<on-ambari-upgrade add="true"/>

|

||||

</property>

|

||||

<property>

|

||||

<name>worker.groups</name>

|

||||

<value>default</value>

|

||||

<description>default worker group</description>

|

||||

<on-ambari-upgrade add="true"/>

|

||||

</property>

|

||||

<property>

|

||||

<name>worker.exec.threads</name>

|

||||

<value>100</value>

|

||||

<value-attributes>

|

||||

<type>int</type>

|

||||

</value-attributes>

|

||||

<description>worker execute thread num</description>

|

||||

<on-ambari-upgrade add="true"/>

|

||||

</property>

|

||||

<property>

|

||||

<name>worker.heartbeat.interval</name>

|

||||

<value>10</value>

|

||||

<value-attributes>

|

||||

<type>int</type>

|

||||

</value-attributes>

|

||||

<description>worker heartbeat interval</description>

|

||||

<on-ambari-upgrade add="true"/>

|

||||

</property>

|

||||

<property>

|

||||

<name>worker.max.cpuload.avg</name>

|

||||

<value>100</value>

|

||||

<value-attributes>

|

||||

<type>int</type>

|

||||

</value-attributes>

|

||||

<description>only less than cpu avg load, worker server can work. default value : the number of cpu cores * 2

|

||||

</description>

|

||||

<on-ambari-upgrade add="true"/>

|

||||

</property>

|

||||

<property>

|

||||

<name>worker.reserved.memory</name>

|

||||

<value>0.3</value>

|

||||

<description>only larger than reserved memory, worker server can work. default value : physical memory * 1/10,

|

||||

unit is G.

|

||||

</description>

|

||||

<on-ambari-upgrade add="true"/>

|

||||

</property>

|

||||

<property>

|

||||

<name>worker.listen.port</name>

|

||||

<value>1234</value>

|

||||

<value-attributes>

|

||||

<type>int</type>

|

||||

</value-attributes>

|

||||

<description>worker listen port</description>

|

||||

<on-ambari-upgrade add="true"/>

|

||||

</property>

|

||||

<property>

|

||||

<name>worker.groups</name>

|

||||

<value>default</value>

|

||||

<description>default worker group</description>

|

||||

<on-ambari-upgrade add="true"/>

|

||||

</property>

|

||||

<property>

|

||||

<name>worker.weigth</name>

|

||||

<value>100</value>

|

||||

<value-attributes>

|

||||

<type>int</type>

|

||||

</value-attributes>

|

||||

<description>worker weight</description>

|

||||

<on-ambari-upgrade add="true"/>

|

||||

</property>

|

||||

</configuration>

|

||||

@ -15,55 +15,37 @@

|

||||

# limitations under the License.

|

||||

#

|

||||

|

||||

FROM nginx:alpine

|

||||

FROM openjdk:8-jdk-alpine

|

||||

|

||||

ARG VERSION

|

||||

|

||||

ENV TZ Asia/Shanghai

|

||||

ENV LANG C.UTF-8

|

||||

ENV DEBIAN_FRONTEND noninteractive

|

||||

ENV DOCKER true

|

||||

|

||||

#1. install dos2unix shadow bash openrc python sudo vim wget iputils net-tools ssh pip tini kazoo.

|

||||

#If install slowly, you can replcae alpine's mirror with aliyun's mirror, Example:

|

||||

#RUN sed -i "s/dl-cdn.alpinelinux.org/mirrors.aliyun.com/g" /etc/apk/repositories

|

||||

# 1. install command/library/software

|

||||

# If install slowly, you can replcae alpine's mirror with aliyun's mirror, Example:

|

||||

# RUN sed -i "s/dl-cdn.alpinelinux.org/mirrors.aliyun.com/g" /etc/apk/repositories

|

||||

# RUN sed -i 's/dl-cdn.alpinelinux.org/mirror.tuna.tsinghua.edu.cn/g' /etc/apk/repositories

|

||||

RUN apk update && \

|

||||

apk add --update --no-cache dos2unix shadow bash openrc python2 python3 sudo vim wget iputils net-tools openssh-server py-pip tini && \

|

||||

apk add --update --no-cache procps && \

|

||||

openrc boot && \

|

||||

pip install kazoo

|

||||

apk add --no-cache tzdata dos2unix bash python2 python3 procps sudo shadow tini postgresql-client && \

|

||||

cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && \

|

||||

apk del tzdata && \

|

||||

rm -rf /var/cache/apk/*

|

||||

|

||||

#2. install jdk

|

||||

RUN apk add --update --no-cache openjdk8

|

||||

ENV JAVA_HOME /usr/lib/jvm/java-1.8-openjdk

|

||||

ENV PATH $JAVA_HOME/bin:$PATH

|

||||

|

||||

#3. add dolphinscheduler

|

||||

# 2. add dolphinscheduler

|

||||

ADD ./apache-dolphinscheduler-incubating-${VERSION}-dolphinscheduler-bin.tar.gz /opt/

|

||||

RUN mv /opt/apache-dolphinscheduler-incubating-${VERSION}-dolphinscheduler-bin/ /opt/dolphinscheduler/

|

||||

RUN ln -s /opt/apache-dolphinscheduler-incubating-${VERSION}-dolphinscheduler-bin /opt/dolphinscheduler

|

||||

ENV DOLPHINSCHEDULER_HOME /opt/dolphinscheduler

|

||||

|

||||

#4. install database, if use mysql as your backend database, the `mysql-client` package should be installed

|

||||

RUN apk add --update --no-cache postgresql postgresql-contrib

|

||||

|

||||

#5. modify nginx

|

||||

RUN echo "daemon off;" >> /etc/nginx/nginx.conf && \

|

||||

rm -rf /etc/nginx/conf.d/*

|

||||

COPY ./conf/nginx/dolphinscheduler.conf /etc/nginx/conf.d

|

||||

|

||||

#6. add configuration and modify permissions and set soft links

|

||||

# 3. add configuration and modify permissions and set soft links

|

||||

COPY ./checkpoint.sh /root/checkpoint.sh

|

||||

COPY ./startup-init-conf.sh /root/startup-init-conf.sh

|

||||

COPY ./startup.sh /root/startup.sh

|

||||

COPY ./conf/dolphinscheduler/*.tpl /opt/dolphinscheduler/conf/

|

||||

COPY ./conf/dolphinscheduler/logback/* /opt/dolphinscheduler/conf/

|

||||

COPY conf/dolphinscheduler/env/dolphinscheduler_env.sh /opt/dolphinscheduler/conf/env/

|

||||

RUN chmod +x /root/checkpoint.sh && \

|

||||

chmod +x /root/startup-init-conf.sh && \

|

||||

chmod +x /root/startup.sh && \

|

||||

chmod +x /opt/dolphinscheduler/conf/env/dolphinscheduler_env.sh && \

|

||||

chmod +x /opt/dolphinscheduler/script/*.sh && \

|

||||

chmod +x /opt/dolphinscheduler/bin/*.sh && \

|

||||

dos2unix /root/checkpoint.sh && \

|

||||

COPY ./conf/dolphinscheduler/env/dolphinscheduler_env.sh /opt/dolphinscheduler/conf/env/

|

||||

RUN dos2unix /root/checkpoint.sh && \

|

||||

dos2unix /root/startup-init-conf.sh && \

|

||||

dos2unix /root/startup.sh && \

|

||||

dos2unix /opt/dolphinscheduler/conf/env/dolphinscheduler_env.sh && \

|

||||

@ -71,13 +53,10 @@ RUN chmod +x /root/checkpoint.sh && \

|

||||

dos2unix /opt/dolphinscheduler/bin/*.sh && \

|

||||

rm -rf /bin/sh && \

|

||||

ln -s /bin/bash /bin/sh && \

|

||||

mkdir -p /tmp/xls && \

|

||||

#7. remove apk index cache and disable coredup for sudo

|

||||

rm -rf /var/cache/apk/* && \

|

||||

mkdir -p /var/mail /tmp/xls && \

|

||||

echo "Set disable_coredump false" >> /etc/sudo.conf

|

||||

|

||||

|

||||

#8. expose port

|

||||

EXPOSE 2181 2888 3888 5432 5678 1234 12345 50051 8888

|

||||

# 4. expose port

|

||||

EXPOSE 5678 1234 12345 50051

|

||||

|

||||

ENTRYPOINT ["/sbin/tini", "--", "/root/startup.sh"]

|

||||

|

||||

@ -15,9 +15,9 @@ Official Website: https://dolphinscheduler.apache.org

|

||||

|

||||

#### You can start a dolphinscheduler instance

|

||||

```

|

||||

$ docker run -dit --name dolphinscheduler \

|

||||

$ docker run -dit --name dolphinscheduler \

|

||||

-e DATABASE_USERNAME=test -e DATABASE_PASSWORD=test -e DATABASE_DATABASE=dolphinscheduler \

|

||||

-p 8888:8888 \

|

||||

-p 12345:12345 \

|

||||

dolphinscheduler all

|

||||

```

|

||||

|

||||

@ -33,7 +33,7 @@ You can specify **existing postgres service**. Example:

|

||||

$ docker run -dit --name dolphinscheduler \

|

||||

-e DATABASE_HOST="192.168.x.x" -e DATABASE_PORT="5432" -e DATABASE_DATABASE="dolphinscheduler" \

|

||||

-e DATABASE_USERNAME="test" -e DATABASE_PASSWORD="test" \

|

||||

-p 8888:8888 \

|

||||

-p 12345:12345 \

|

||||

dolphinscheduler all

|

||||

```

|

||||

|

||||

@ -43,7 +43,7 @@ You can specify **existing zookeeper service**. Example:

|

||||

$ docker run -dit --name dolphinscheduler \

|

||||

-e ZOOKEEPER_QUORUM="l92.168.x.x:2181"

|

||||

-e DATABASE_USERNAME="test" -e DATABASE_PASSWORD="test" -e DATABASE_DATABASE="dolphinscheduler" \

|

||||

-p 8888:8888 \

|

||||

-p 12345:12345 \

|

||||

dolphinscheduler all

|

||||

```

|

||||

|

||||

@ -90,14 +90,6 @@ $ docker run -dit --name dolphinscheduler \

|

||||

dolphinscheduler alert-server

|

||||

```

|

||||

|

||||

* Start a **frontend**, For example:

|

||||

|

||||

```

|

||||

$ docker run -dit --name dolphinscheduler \

|

||||

-e FRONTEND_API_SERVER_HOST="192.168.x.x" -e FRONTEND_API_SERVER_PORT="12345" \

|

||||

-p 8888:8888 \

|

||||

dolphinscheduler frontend

|

||||

```

|

||||

|

||||

**Note**: You must be specify `DATABASE_HOST` `DATABASE_PORT` `DATABASE_DATABASE` `DATABASE_USERNAME` `DATABASE_PASSWORD` `ZOOKEEPER_QUORUM` when start a standalone dolphinscheduler server.

|

||||

|

||||

@ -146,7 +138,7 @@ This environment variable sets the host for database. The default value is `127.

|

||||

|

||||

This environment variable sets the port for database. The default value is `5432`.

|

||||

|

||||

**Note**: You must be specify it when start a standalone dolphinscheduler server. Like `master-server`, `worker-server`, `api-server`, `alert-server`.

|

||||

**Note**: You must be specify it when start a standalone dolphinscheduler server. Like `master-server`, `worker-server`, `api-server`, `alert-server`.

|

||||

|

||||

**`DATABASE_USERNAME`**

|

||||

|

||||

@ -306,18 +298,6 @@ This environment variable sets enterprise wechat agent id for `alert-server`. Th

|

||||

|

||||

This environment variable sets enterprise wechat users for `alert-server`. The default value is empty.

|

||||

|

||||

**`FRONTEND_API_SERVER_HOST`**

|

||||

|

||||

This environment variable sets api server host for `frontend`. The default value is `127.0.0.1`.

|

||||

|

||||

**Note**: You must be specify it when start a standalone dolphinscheduler server. Like `api-server`.

|

||||

|

||||

**`FRONTEND_API_SERVER_PORT`**

|

||||

|

||||

This environment variable sets api server port for `frontend`. The default value is `123451`.

|

||||

|

||||

**Note**: You must be specify it when start a standalone dolphinscheduler server. Like `api-server`.

|

||||

|

||||

## Initialization scripts

|

||||

|

||||

If you would like to do additional initialization in an image derived from this one, add one or more environment variable under `/root/start-init-conf.sh`, and modify template files in `/opt/dolphinscheduler/conf/*.tpl`.

|

||||

@ -326,7 +306,7 @@ For example, to add an environment variable `API_SERVER_PORT` in `/root/start-in

|

||||

|

||||

```

|

||||

export API_SERVER_PORT=5555

|

||||

```

|

||||

```

|

||||

|

||||

and to modify `/opt/dolphinscheduler/conf/application-api.properties.tpl` template file, add server port:

|

||||

```

|

||||

@ -343,8 +323,4 @@ $(cat ${DOLPHINSCHEDULER_HOME}/conf/${line})

|

||||

EOF

|

||||

" > ${DOLPHINSCHEDULER_HOME}/conf/${line%.*}

|

||||

done

|

||||

|

||||

echo "generate nginx config"

|

||||

sed -i "s/FRONTEND_API_SERVER_HOST/${FRONTEND_API_SERVER_HOST}/g" /etc/nginx/conf.d/dolphinscheduler.conf

|

||||

sed -i "s/FRONTEND_API_SERVER_PORT/${FRONTEND_API_SERVER_PORT}/g" /etc/nginx/conf.d/dolphinscheduler.conf

|

||||

```

|

||||

|

||||

@ -15,9 +15,9 @@ Official Website: https://dolphinscheduler.apache.org

|

||||

|

||||

#### 你可以运行一个dolphinscheduler实例

|

||||

```

|

||||

$ docker run -dit --name dolphinscheduler \

|

||||

$ docker run -dit --name dolphinscheduler \

|

||||

-e DATABASE_USERNAME=test -e DATABASE_PASSWORD=test -e DATABASE_DATABASE=dolphinscheduler \

|

||||

-p 8888:8888 \

|

||||

-p 12345:12345 \

|

||||

dolphinscheduler all

|

||||

```

|

||||

|

||||

@ -33,7 +33,7 @@ dolphinscheduler all

|

||||

$ docker run -dit --name dolphinscheduler \

|

||||

-e DATABASE_HOST="192.168.x.x" -e DATABASE_PORT="5432" -e DATABASE_DATABASE="dolphinscheduler" \

|

||||

-e DATABASE_USERNAME="test" -e DATABASE_PASSWORD="test" \

|

||||

-p 8888:8888 \

|

||||

-p 12345:12345 \

|

||||

dolphinscheduler all

|

||||

```

|

||||

|

||||

@ -43,7 +43,7 @@ dolphinscheduler all

|

||||

$ docker run -dit --name dolphinscheduler \

|

||||

-e ZOOKEEPER_QUORUM="l92.168.x.x:2181"

|

||||

-e DATABASE_USERNAME="test" -e DATABASE_PASSWORD="test" -e DATABASE_DATABASE="dolphinscheduler" \

|

||||

-p 8888:8888 \

|

||||

-p 12345:12345 \

|

||||

dolphinscheduler all

|

||||

```

|

||||

|

||||

@ -90,15 +90,6 @@ $ docker run -dit --name dolphinscheduler \

|

||||

dolphinscheduler alert-server

|

||||

```

|

||||

|

||||

* 启动一个 **frontend**, 如下:

|

||||

|

||||

```

|

||||

$ docker run -dit --name dolphinscheduler \

|

||||

-e FRONTEND_API_SERVER_HOST="192.168.x.x" -e FRONTEND_API_SERVER_PORT="12345" \

|

||||

-p 8888:8888 \

|

||||

dolphinscheduler frontend

|

||||

```

|

||||

|

||||

**注意**: 当你运行dolphinscheduler中的部分服务时,你必须指定这些环境变量 `DATABASE_HOST` `DATABASE_PORT` `DATABASE_DATABASE` `DATABASE_USERNAME` `DATABASE_PASSWORD` `ZOOKEEPER_QUORUM`。

|

||||

|

||||

## 如何构建一个docker镜像

|

||||

@ -306,18 +297,6 @@ Dolphin Scheduler映像使用了几个容易遗漏的环境变量。虽然这些

|

||||

|

||||

配置`alert-server`的邮件服务企业微信`USERS`,默认值 `空`。

|

||||

|

||||

**`FRONTEND_API_SERVER_HOST`**

|

||||

|

||||

配置`frontend`的连接`api-server`的地址,默认值 `127.0.0.1`。

|

||||

|

||||

**Note**: 当单独运行`api-server`时,你应该指定`api-server`这个值。

|

||||

|

||||

**`FRONTEND_API_SERVER_PORT`**

|

||||

|

||||

配置`frontend`的连接`api-server`的端口,默认值 `12345`。

|

||||

|

||||

**Note**: 当单独运行`api-server`时,你应该指定`api-server`这个值。

|

||||

|

||||

## 初始化脚本

|

||||

|

||||

如果你想在编译的时候或者运行的时候附加一些其它的操作及新增一些环境变量,你可以在`/root/start-init-conf.sh`文件中进行修改,同时如果涉及到配置文件的修改,请在`/opt/dolphinscheduler/conf/*.tpl`中修改相应的配置文件

|

||||

@ -326,7 +305,7 @@ Dolphin Scheduler映像使用了几个容易遗漏的环境变量。虽然这些

|

||||

|

||||

```

|

||||

export API_SERVER_PORT=5555

|

||||

```

|

||||

```

|

||||

|

||||

当添加以上环境变量后,你应该在相应的模板文件`/opt/dolphinscheduler/conf/application-api.properties.tpl`中添加这个环境变量配置:

|

||||

```

|

||||

@ -343,8 +322,4 @@ $(cat ${DOLPHINSCHEDULER_HOME}/conf/${line})

|

||||

EOF

|

||||

" > ${DOLPHINSCHEDULER_HOME}/conf/${line%.*}

|

||||

done

|

||||

|

||||

echo "generate nginx config"

|

||||

sed -i "s/FRONTEND_API_SERVER_HOST/${FRONTEND_API_SERVER_HOST}/g" /etc/nginx/conf.d/dolphinscheduler.conf

|

||||

sed -i "s/FRONTEND_API_SERVER_PORT/${FRONTEND_API_SERVER_PORT}/g" /etc/nginx/conf.d/dolphinscheduler.conf

|

||||

```

|

||||

|

||||

0

docker/build/checkpoint.sh

Normal file → Executable file

0

docker/build/checkpoint.sh

Normal file → Executable file

12

docker/build/conf/dolphinscheduler/env/dolphinscheduler_env.sh

vendored

Normal file → Executable file

12

docker/build/conf/dolphinscheduler/env/dolphinscheduler_env.sh

vendored

Normal file → Executable file

@ -15,6 +15,14 @@

|

||||

# limitations under the License.

|

||||

#

|

||||

|

||||

export PYTHON_HOME=/usr/bin/python2

|

||||

export HADOOP_HOME=/opt/soft/hadoop

|

||||

export HADOOP_CONF_DIR=/opt/soft/hadoop/etc/hadoop

|

||||

export SPARK_HOME1=/opt/soft/spark1

|

||||

export SPARK_HOME2=/opt/soft/spark2

|

||||

export PYTHON_HOME=/usr/bin/python

|

||||

export JAVA_HOME=/usr/lib/jvm/java-1.8-openjdk

|

||||

export PATH=$PYTHON_HOME/bin:$JAVA_HOME/bin:$PATH

|

||||

export HIVE_HOME=/opt/soft/hive

|

||||

export FLINK_HOME=/opt/soft/flink

|

||||

export DATAX_HOME=/opt/soft/datax/bin/datax.py

|

||||

|

||||

export PATH=$HADOOP_HOME/bin:$SPARK_HOME1/bin:$SPARK_HOME2/bin:$PYTHON_HOME:$JAVA_HOME/bin:$HIVE_HOME/bin:$PATH:$FLINK_HOME/bin:$DATAX_HOME:$PATH

|

||||

|

||||

@ -20,14 +20,6 @@

|

||||

<configuration scan="true" scanPeriod="120 seconds"> <!--debug="true" -->

|

||||

|

||||

<property name="log.base" value="logs"/>

|

||||

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">

|

||||

<encoder>

|

||||

<pattern>

|

||||

[%level] %date{yyyy-MM-dd HH:mm:ss.SSS} %logger{96}:[%line] - %msg%n

|

||||

</pattern>

|

||||

<charset>UTF-8</charset>

|

||||

</encoder>

|

||||

</appender>

|

||||

|

||||

<appender name="ALERTLOGFILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

|

||||

<file>${log.base}/dolphinscheduler-alert.log</file>

|

||||

@ -45,7 +37,6 @@

|

||||

</appender>

|

||||

|

||||

<root level="INFO">

|

||||

<appender-ref ref="STDOUT"/>

|

||||

<appender-ref ref="ALERTLOGFILE"/>

|

||||

</root>

|

||||

|

||||

|

||||

@ -20,14 +20,6 @@

|

||||

<configuration scan="true" scanPeriod="120 seconds"> <!--debug="true" -->

|

||||

|

||||

<property name="log.base" value="logs"/>

|

||||

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">

|

||||

<encoder>

|

||||

<pattern>

|

||||

[%level] %date{yyyy-MM-dd HH:mm:ss.SSS} %logger{96}:[%line] - %msg%n

|

||||

</pattern>

|

||||

<charset>UTF-8</charset>

|

||||

</encoder>

|

||||

</appender>

|

||||

|

||||

<!-- api server logback config start -->

|

||||

<appender name="APILOGFILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

|

||||

@ -55,7 +47,6 @@

|

||||

|

||||

|

||||

<root level="INFO">

|

||||

<appender-ref ref="STDOUT"/>

|

||||

<appender-ref ref="APILOGFILE"/>

|

||||

</root>

|

||||

|

||||

|

||||

@ -20,14 +20,6 @@

|

||||

<configuration scan="true" scanPeriod="120 seconds"> <!--debug="true" -->

|

||||

|

||||

<property name="log.base" value="logs"/>

|

||||

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">

|

||||

<encoder>

|

||||

<pattern>

|

||||

[%level] %date{yyyy-MM-dd HH:mm:ss.SSS} %logger{96}:[%line] - %msg%n

|

||||

</pattern>

|

||||

<charset>UTF-8</charset>

|

||||

</encoder>

|

||||

</appender>

|

||||

|

||||

<conversionRule conversionWord="messsage"

|

||||

converterClass="org.apache.dolphinscheduler.server.log.SensitiveDataConverter"/>

|

||||

@ -74,7 +66,6 @@

|

||||

<!-- master server logback config end -->

|

||||

|

||||

<root level="INFO">

|

||||

<appender-ref ref="STDOUT"/>

|

||||

<appender-ref ref="TASKLOGFILE"/>

|

||||

<appender-ref ref="MASTERLOGFILE"/>

|

||||

</root>

|

||||

|

||||

@ -20,14 +20,6 @@

|

||||

<configuration scan="true" scanPeriod="120 seconds"> <!--debug="true" -->

|

||||

|

||||

<property name="log.base" value="logs"/>

|

||||

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">

|

||||

<encoder>

|

||||

<pattern>

|

||||

[%level] %date{yyyy-MM-dd HH:mm:ss.SSS} %logger{96}:[%line] - %msg%n

|

||||

</pattern>

|

||||

<charset>UTF-8</charset>

|

||||

</encoder>

|

||||

</appender>

|

||||

|

||||

<!-- worker server logback config start -->

|

||||

<conversionRule conversionWord="messsage"

|

||||

@ -75,7 +67,6 @@

|

||||

<!-- worker server logback config end -->

|

||||

|

||||

<root level="INFO">

|

||||

<appender-ref ref="STDOUT"/>

|

||||

<appender-ref ref="TASKLOGFILE"/>

|

||||

<appender-ref ref="WORKERLOGFILE"/>

|

||||

</root>

|

||||

|

||||

@ -1,51 +0,0 @@

|

||||

#

|

||||

# Licensed to the Apache Software Foundation (ASF) under one or more

|

||||

# contributor license agreements. See the NOTICE file distributed with

|

||||

# this work for additional information regarding copyright ownership.

|

||||

# The ASF licenses this file to You under the Apache License, Version 2.0

|

||||

# (the "License"); you may not use this file except in compliance with

|

||||

# the License. You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

#

|

||||

|

||||

server {

|

||||

listen 8888;

|

||||

server_name localhost;

|

||||

#charset koi8-r;

|

||||

#access_log /var/log/nginx/host.access.log main;

|

||||

location / {

|

||||

root /opt/dolphinscheduler/ui;

|

||||

index index.html index.html;

|

||||

}

|

||||

location /dolphinscheduler/ui{

|

||||

alias /opt/dolphinscheduler/ui;

|

||||

}

|

||||

location /dolphinscheduler {

|

||||

proxy_pass http://FRONTEND_API_SERVER_HOST:FRONTEND_API_SERVER_PORT;

|

||||

proxy_set_header Host $host;

|

||||

proxy_set_header X-Real-IP $remote_addr;

|

||||

proxy_set_header x_real_ipP $remote_addr;

|

||||

proxy_set_header remote_addr $remote_addr;

|

||||

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

|

||||

proxy_http_version 1.1;

|

||||

proxy_connect_timeout 300s;

|

||||

proxy_read_timeout 300s;

|

||||

proxy_send_timeout 300s;

|

||||

proxy_set_header Upgrade $http_upgrade;

|

||||

proxy_set_header Connection "upgrade";

|

||||

}

|

||||

#error_page 404 /404.html;

|

||||

# redirect server error pages to the static page /50x.html

|

||||

#

|

||||

error_page 500 502 503 504 /50x.html;

|

||||

location = /50x.html {

|

||||

root /usr/share/nginx/html;

|

||||

}

|

||||

}

|

||||

@ -1,47 +0,0 @@

|

||||

#

|

||||

# Licensed to the Apache Software Foundation (ASF) under one or more

|

||||

# contributor license agreements. See the NOTICE file distributed with

|

||||

# this work for additional information regarding copyright ownership.

|

||||

# The ASF licenses this file to You under the Apache License, Version 2.0

|

||||

# (the "License"); you may not use this file except in compliance with

|

||||

# the License. You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

#

|

||||

|

||||

# The number of milliseconds of each tick

|

||||

tickTime=2000

|

||||

# The number of ticks that the initial

|

||||

# synchronization phase can take

|

||||

initLimit=10

|

||||

# The number of ticks that can pass between

|

||||

# sending a request and getting an acknowledgement

|

||||

syncLimit=5

|

||||

# the directory where the snapshot is stored.

|

||||

# do not use /tmp for storage, /tmp here is just

|

||||

# example sakes.

|

||||

dataDir=/tmp/zookeeper

|

||||

# the port at which the clients will connect

|

||||

clientPort=2181

|

||||

# the maximum number of client connections.

|

||||

# increase this if you need to handle more clients

|

||||

#maxClientCnxns=60

|

||||

#

|

||||

# Be sure to read the maintenance section of the

|

||||

# administrator guide before turning on autopurge.

|

||||

#

|

||||

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

|

||||

#

|

||||

# The number of snapshots to retain in dataDir

|

||||

#autopurge.snapRetainCount=3

|

||||

# Purge task interval in hours

|

||||

# Set to "0" to disable auto purge feature

|

||||

#autopurge.purgeInterval=1

|

||||

#Four Letter Words commands:stat,ruok,conf,isro

|

||||

4lw.commands.whitelist=*

|

||||

9

docker/build/hooks/build

Normal file → Executable file

9

docker/build/hooks/build

Normal file → Executable file

@ -48,7 +48,12 @@ echo -e "mv $(pwd)/dolphinscheduler-dist/target/apache-dolphinscheduler-incubati

|

||||

mv "$(pwd)"/dolphinscheduler-dist/target/apache-dolphinscheduler-incubating-"${VERSION}"-dolphinscheduler-bin.tar.gz $(pwd)/docker/build/

|

||||

|

||||

# docker build

|

||||

echo -e "docker build --build-arg VERSION=${VERSION} -t $DOCKER_REPO:${VERSION} $(pwd)/docker/build/\n"

|

||||

sudo docker build --build-arg VERSION="${VERSION}" -t $DOCKER_REPO:"${VERSION}" "$(pwd)/docker/build/"

|

||||

BUILD_COMMAND="docker build --build-arg VERSION=${VERSION} -t $DOCKER_REPO:${VERSION} $(pwd)/docker/build/"

|

||||

echo -e "$BUILD_COMMAND\n"

|

||||

if (docker info 2> /dev/null | grep -i "ERROR"); then

|

||||

sudo $BUILD_COMMAND

|

||||

else

|

||||

$BUILD_COMMAND

|

||||

fi

|

||||

|

||||

echo "------ dolphinscheduler end - build -------"

|

||||

|

||||

0

docker/build/hooks/push

Normal file → Executable file

0

docker/build/hooks/push

Normal file → Executable file

18

docker/build/startup-init-conf.sh

Normal file → Executable file

18

docker/build/startup-init-conf.sh

Normal file → Executable file

@ -39,9 +39,9 @@ export DATABASE_PARAMS=${DATABASE_PARAMS:-"characterEncoding=utf8"}

|

||||

export DOLPHINSCHEDULER_ENV_PATH=${DOLPHINSCHEDULER_ENV_PATH:-"/opt/dolphinscheduler/conf/env/dolphinscheduler_env.sh"}

|

||||

export DOLPHINSCHEDULER_DATA_BASEDIR_PATH=${DOLPHINSCHEDULER_DATA_BASEDIR_PATH:-"/tmp/dolphinscheduler"}

|

||||

export DOLPHINSCHEDULER_OPTS=${DOLPHINSCHEDULER_OPTS:-""}

|

||||

export RESOURCE_STORAGE_TYPE=${RESOURCE_STORAGE_TYPE:-"NONE"}

|

||||

export RESOURCE_UPLOAD_PATH=${RESOURCE_UPLOAD_PATH:-"/ds"}

|

||||

export FS_DEFAULT_FS=${FS_DEFAULT_FS:-"s3a://xxxx"}

|

||||

export RESOURCE_STORAGE_TYPE=${RESOURCE_STORAGE_TYPE:-"HDFS"}

|

||||

export RESOURCE_UPLOAD_PATH=${RESOURCE_UPLOAD_PATH:-"/dolphinscheduler"}

|

||||

export FS_DEFAULT_FS=${FS_DEFAULT_FS:-"file:///"}

|

||||

export FS_S3A_ENDPOINT=${FS_S3A_ENDPOINT:-"s3.xxx.amazonaws.com"}

|

||||

export FS_S3A_ACCESS_KEY=${FS_S3A_ACCESS_KEY:-"xxxxxxx"}

|

||||

export FS_S3A_SECRET_KEY=${FS_S3A_SECRET_KEY:-"xxxxxxx"}

|

||||

@ -81,7 +81,7 @@ export WORKER_WEIGHT=${WORKER_WEIGHT:-"100"}

|

||||

#============================================================================

|

||||

# alert plugin dir

|

||||

export ALERT_PLUGIN_DIR=${ALERT_PLUGIN_DIR:-"/opt/dolphinscheduler"}

|

||||

# XLS FILE

|

||||

# xls file

|

||||

export XLS_FILE_PATH=${XLS_FILE_PATH:-"/tmp/xls"}

|

||||

# mail

|

||||

export MAIL_SERVER_HOST=${MAIL_SERVER_HOST:-""}

|

||||

@ -99,12 +99,6 @@ export ENTERPRISE_WECHAT_SECRET=${ENTERPRISE_WECHAT_SECRET:-""}

|

||||

export ENTERPRISE_WECHAT_AGENT_ID=${ENTERPRISE_WECHAT_AGENT_ID:-""}

|

||||

export ENTERPRISE_WECHAT_USERS=${ENTERPRISE_WECHAT_USERS:-""}

|

||||

|

||||

#============================================================================

|

||||

# Frontend

|

||||

#============================================================================

|

||||

export FRONTEND_API_SERVER_HOST=${FRONTEND_API_SERVER_HOST:-"127.0.0.1"}

|

||||

export FRONTEND_API_SERVER_PORT=${FRONTEND_API_SERVER_PORT:-"12345"}

|

||||

|

||||

echo "generate app config"

|

||||

ls ${DOLPHINSCHEDULER_HOME}/conf/ | grep ".tpl" | while read line; do

|

||||

eval "cat << EOF

|

||||

@ -112,7 +106,3 @@ $(cat ${DOLPHINSCHEDULER_HOME}/conf/${line})

|

||||

EOF

|

||||

" > ${DOLPHINSCHEDULER_HOME}/conf/${line%.*}

|

||||

done

|

||||

|

||||

echo "generate nginx config"

|

||||

sed -i "s/FRONTEND_API_SERVER_HOST/${FRONTEND_API_SERVER_HOST}/g" /etc/nginx/conf.d/dolphinscheduler.conf

|

||||

sed -i "s/FRONTEND_API_SERVER_PORT/${FRONTEND_API_SERVER_PORT}/g" /etc/nginx/conf.d/dolphinscheduler.conf

|

||||

55

docker/build/startup.sh

Normal file → Executable file

55

docker/build/startup.sh

Normal file → Executable file

@ -22,8 +22,8 @@ DOLPHINSCHEDULER_BIN=${DOLPHINSCHEDULER_HOME}/bin

|

||||

DOLPHINSCHEDULER_SCRIPT=${DOLPHINSCHEDULER_HOME}/script

|

||||

DOLPHINSCHEDULER_LOGS=${DOLPHINSCHEDULER_HOME}/logs

|

||||

|

||||

# start database

|

||||

initDatabase() {

|

||||

# wait database

|

||||

waitDatabase() {

|

||||

echo "test ${DATABASE_TYPE} service"

|

||||

while ! nc -z ${DATABASE_HOST} ${DATABASE_PORT}; do

|

||||

counter=$((counter+1))

|

||||

@ -43,19 +43,22 @@ initDatabase() {

|

||||

exit 1

|

||||

fi

|

||||

else

|

||||

v=$(sudo -u postgres PGPASSWORD=${DATABASE_PASSWORD} psql -h ${DATABASE_HOST} -p ${DATABASE_PORT} -U ${DATABASE_USERNAME} -d ${DATABASE_DATABASE} -tAc "select 1")

|

||||

v=$(PGPASSWORD=${DATABASE_PASSWORD} psql -h ${DATABASE_HOST} -p ${DATABASE_PORT} -U ${DATABASE_USERNAME} -d ${DATABASE_DATABASE} -tAc "select 1")

|

||||

if [ "$(echo ${v} | grep 'FATAL' | wc -l)" -eq 1 ]; then

|

||||

echo "Error: Can't connect to database...${v}"

|

||||

exit 1

|

||||

fi

|

||||

fi

|

||||

}

|

||||

|

||||

# init database

|

||||

initDatabase() {

|

||||

echo "import sql data"

|

||||

${DOLPHINSCHEDULER_SCRIPT}/create-dolphinscheduler.sh

|

||||

}

|

||||

|

||||

# start zk

|

||||

initZK() {

|

||||

# wait zk

|

||||

waitZK() {

|

||||

echo "connect remote zookeeper"

|

||||

echo "${ZOOKEEPER_QUORUM}" | awk -F ',' 'BEGIN{ i=1 }{ while( i <= NF ){ print $i; i++ } }' | while read line; do

|

||||

while ! nc -z ${line%:*} ${line#*:}; do

|

||||

@ -70,12 +73,6 @@ initZK() {

|

||||

done

|

||||

}

|

||||

|

||||

# start nginx

|

||||

initNginx() {

|

||||

echo "start nginx"

|

||||

nginx &

|

||||

}

|

||||

|

||||

# start master-server

|

||||

initMasterServer() {

|

||||

echo "start master-server"

|

||||

@ -115,59 +112,54 @@ initAlertServer() {

|

||||

printUsage() {

|

||||

echo -e "Dolphin Scheduler is a distributed and easy-to-expand visual DAG workflow scheduling system,"

|

||||

echo -e "dedicated to solving the complex dependencies in data processing, making the scheduling system out of the box for data processing.\n"

|

||||

echo -e "Usage: [ all | master-server | worker-server | api-server | alert-server | frontend ]\n"

|

||||

printf "%-13s: %s\n" "all" "Run master-server, worker-server, api-server, alert-server and frontend."

|

||||

echo -e "Usage: [ all | master-server | worker-server | api-server | alert-server ]\n"

|

||||

printf "%-13s: %s\n" "all" "Run master-server, worker-server, api-server and alert-server"

|

||||

printf "%-13s: %s\n" "master-server" "MasterServer is mainly responsible for DAG task split, task submission monitoring."

|

||||

printf "%-13s: %s\n" "worker-server" "WorkerServer is mainly responsible for task execution and providing log services.."

|

||||

printf "%-13s: %s\n" "api-server" "ApiServer is mainly responsible for processing requests from the front-end UI layer."

|

||||

printf "%-13s: %s\n" "worker-server" "WorkerServer is mainly responsible for task execution and providing log services."

|

||||

printf "%-13s: %s\n" "api-server" "ApiServer is mainly responsible for processing requests and providing the front-end UI layer."

|

||||

printf "%-13s: %s\n" "alert-server" "AlertServer mainly include Alarms."

|

||||

printf "%-13s: %s\n" "frontend" "Frontend mainly provides various visual operation interfaces of the system."

|

||||

}

|

||||

|

||||

# init config file

|

||||

source /root/startup-init-conf.sh

|

||||

|

||||

LOGFILE=/var/log/nginx/access.log

|

||||

case "$1" in

|

||||

(all)

|

||||

initZK

|

||||

waitZK

|

||||

waitDatabase

|

||||

initDatabase

|

||||

initMasterServer

|

||||

initWorkerServer

|

||||

initApiServer

|

||||

initAlertServer

|

||||

initLoggerServer

|

||||

initNginx

|

||||

LOGFILE=/var/log/nginx/access.log

|

||||

LOGFILE=${DOLPHINSCHEDULER_LOGS}/dolphinscheduler-api-server.log

|

||||

;;

|

||||

(master-server)

|

||||

initZK

|

||||

initDatabase

|

||||

waitZK

|

||||

waitDatabase

|

||||

initMasterServer

|

||||

LOGFILE=${DOLPHINSCHEDULER_LOGS}/dolphinscheduler-master.log

|

||||

;;

|

||||

(worker-server)

|

||||

initZK

|

||||

initDatabase

|

||||

waitZK

|

||||

waitDatabase

|

||||

initWorkerServer

|

||||

initLoggerServer

|

||||

LOGFILE=${DOLPHINSCHEDULER_LOGS}/dolphinscheduler-worker.log

|

||||

;;

|

||||

(api-server)

|

||||

initZK

|

||||

waitZK

|

||||

waitDatabase

|

||||

initDatabase

|

||||

initApiServer

|

||||

LOGFILE=${DOLPHINSCHEDULER_LOGS}/dolphinscheduler-api-server.log

|

||||

;;

|

||||

(alert-server)

|

||||

initDatabase

|

||||

waitDatabase

|

||||

initAlertServer

|

||||

LOGFILE=${DOLPHINSCHEDULER_LOGS}/dolphinscheduler-alert.log

|

||||

;;

|

||||

(frontend)

|

||||

initNginx

|

||||

LOGFILE=/var/log/nginx/access.log

|

||||

;;

|

||||

(help)

|

||||

printUsage

|

||||

exit 1

|

||||

@ -179,8 +171,7 @@ case "$1" in

|

||||

esac

|

||||

|

||||

# init directories and log files

|

||||

mkdir -p ${DOLPHINSCHEDULER_LOGS} && mkdir -p /var/log/nginx/ && cat /dev/null >> ${LOGFILE}

|

||||

mkdir -p ${DOLPHINSCHEDULER_LOGS} && cat /dev/null >> ${LOGFILE}

|

||||

|

||||

echo "tail begin"

|

||||

exec bash -c "tail -n 1 -f ${LOGFILE}"

|

||||

|

||||

|

||||

2

docker/docker-swarm/check

Normal file → Executable file

2

docker/docker-swarm/check

Normal file → Executable file

@ -25,7 +25,7 @@ else

|

||||

echo "Server start failed "$server_num

|

||||

exit 1

|

||||

fi

|

||||

ready=`curl http://127.0.0.1:8888/dolphinscheduler/login -d 'userName=admin&userPassword=dolphinscheduler123' -v | grep "login success" | wc -l`

|

||||

ready=`curl http://127.0.0.1:12345/dolphinscheduler/login -d 'userName=admin&userPassword=dolphinscheduler123' -v | grep "login success" | wc -l`

|

||||

if [ $ready -eq 1 ]

|

||||

then

|

||||

echo "Servers is ready"

|

||||

|

||||

@ -31,6 +31,7 @@ services:

|

||||

volumes:

|

||||

- dolphinscheduler-postgresql:/bitnami/postgresql

|

||||

- dolphinscheduler-postgresql-initdb:/docker-entrypoint-initdb.d

|

||||

restart: unless-stopped

|

||||

networks:

|

||||

- dolphinscheduler

|

||||

|

||||

@ -45,13 +46,14 @@ services:

|

||||

ZOO_4LW_COMMANDS_WHITELIST: srvr,ruok,wchs,cons

|

||||

volumes:

|

||||

- dolphinscheduler-zookeeper:/bitnami/zookeeper

|

||||

restart: unless-stopped

|

||||

networks:

|

||||

- dolphinscheduler

|

||||

|

||||

dolphinscheduler-api:

|

||||

image: apache/dolphinscheduler:latest

|

||||

container_name: dolphinscheduler-api

|

||||

command: ["api-server"]

|

||||

command: api-server

|

||||

ports:

|

||||

- 12345:12345

|

||||

environment:

|

||||

@ -62,6 +64,9 @@ services:

|

||||

DATABASE_PASSWORD: root

|

||||

DATABASE_DATABASE: dolphinscheduler

|

||||

ZOOKEEPER_QUORUM: dolphinscheduler-zookeeper:2181

|

||||

RESOURCE_STORAGE_TYPE: HDFS

|

||||

RESOURCE_UPLOAD_PATH: /dolphinscheduler

|

||||

FS_DEFAULT_FS: file:///

|

||||

healthcheck:

|

||||

test: ["CMD", "/root/checkpoint.sh", "ApiApplicationServer"]

|

||||

interval: 30s

|

||||

@ -72,37 +77,16 @@ services:

|

||||

- dolphinscheduler-postgresql

|

||||

- dolphinscheduler-zookeeper

|

||||

volumes:

|

||||

- ./dolphinscheduler-logs:/opt/dolphinscheduler/logs

|

||||

networks:

|

||||

- dolphinscheduler

|

||||

|

||||

dolphinscheduler-frontend:

|

||||

image: apache/dolphinscheduler:latest

|

||||

container_name: dolphinscheduler-frontend

|

||||

command: ["frontend"]

|

||||

ports:

|

||||

- 8888:8888

|

||||

environment:

|

||||

TZ: Asia/Shanghai

|

||||

FRONTEND_API_SERVER_HOST: dolphinscheduler-api

|

||||

FRONTEND_API_SERVER_PORT: 12345

|

||||

healthcheck:

|

||||

test: ["CMD", "nc", "-z", "localhost", "8888"]

|

||||

interval: 30s

|

||||

timeout: 5s

|

||||

retries: 3

|

||||

start_period: 30s

|

||||

depends_on:

|

||||

- dolphinscheduler-api

|

||||

volumes:

|

||||

- ./dolphinscheduler-logs:/var/log/nginx

|

||||

- dolphinscheduler-logs:/opt/dolphinscheduler/logs

|

||||

- dolphinscheduler-resource-local:/dolphinscheduler

|

||||

restart: unless-stopped

|

||||

networks:

|

||||

- dolphinscheduler

|

||||

|

||||

dolphinscheduler-alert:

|

||||

image: apache/dolphinscheduler:latest

|

||||

container_name: dolphinscheduler-alert

|

||||

command: ["alert-server"]

|

||||

command: alert-server

|

||||

environment:

|

||||

TZ: Asia/Shanghai

|

||||

XLS_FILE_PATH: "/tmp/xls"

|

||||

@ -133,14 +117,15 @@ services:

|

||||

depends_on:

|

||||

- dolphinscheduler-postgresql

|

||||

volumes:

|

||||

- ./dolphinscheduler-logs:/opt/dolphinscheduler/logs

|

||||

- dolphinscheduler-logs:/opt/dolphinscheduler/logs

|

||||

restart: unless-stopped

|

||||

networks:

|

||||

- dolphinscheduler

|

||||

|

||||

dolphinscheduler-master:

|

||||

image: apache/dolphinscheduler:latest

|

||||

container_name: dolphinscheduler-master

|

||||

command: ["master-server"]

|

||||

command: master-server

|

||||

ports:

|

||||

- 5678:5678

|

||||

environment:

|

||||

@ -168,14 +153,15 @@ services:

|

||||

- dolphinscheduler-postgresql

|

||||

- dolphinscheduler-zookeeper

|

||||

volumes:

|

||||

- ./dolphinscheduler-logs:/opt/dolphinscheduler/logs

|

||||

- dolphinscheduler-logs:/opt/dolphinscheduler/logs

|

||||

restart: unless-stopped

|

||||

networks:

|

||||

- dolphinscheduler

|

||||

|

||||

dolphinscheduler-worker:

|

||||

image: apache/dolphinscheduler:latest

|

||||

container_name: dolphinscheduler-worker

|

||||

command: ["worker-server"]

|

||||

command: worker-server

|

||||

ports:

|

||||

- 1234:1234

|

||||

- 50051:50051

|

||||

@ -188,30 +174,40 @@ services:

|

||||

WORKER_RESERVED_MEMORY: "0.1"

|

||||

WORKER_GROUP: "default"

|

||||

WORKER_WEIGHT: "100"

|

||||

DOLPHINSCHEDULER_DATA_BASEDIR_PATH: "/tmp/dolphinscheduler"

|

||||

DOLPHINSCHEDULER_DATA_BASEDIR_PATH: /tmp/dolphinscheduler

|

||||

XLS_FILE_PATH: "/tmp/xls"

|

||||

MAIL_SERVER_HOST: ""

|

||||

MAIL_SERVER_PORT: ""

|

||||

MAIL_SENDER: ""

|

||||

MAIL_USER: ""

|

||||

MAIL_PASSWD: ""

|

||||

MAIL_SMTP_STARTTLS_ENABLE: "false"

|

||||

MAIL_SMTP_SSL_ENABLE: "false"

|

||||

MAIL_SMTP_SSL_TRUST: ""

|

||||

DATABASE_HOST: dolphinscheduler-postgresql

|

||||

DATABASE_PORT: 5432

|

||||

DATABASE_USERNAME: root

|

||||

DATABASE_PASSWORD: root

|

||||

DATABASE_DATABASE: dolphinscheduler

|

||||

ZOOKEEPER_QUORUM: dolphinscheduler-zookeeper:2181

|

||||

RESOURCE_STORAGE_TYPE: HDFS

|

||||

RESOURCE_UPLOAD_PATH: /dolphinscheduler

|

||||

FS_DEFAULT_FS: file:///

|

||||

healthcheck:

|

||||

test: ["CMD", "/root/checkpoint.sh", "WorkerServer"]

|

||||

interval: 30s

|

||||

timeout: 5s

|

||||

retries: 3

|

||||

start_period: 30s

|

||||

depends_on:

|

||||

depends_on:

|

||||

- dolphinscheduler-postgresql

|

||||

- dolphinscheduler-zookeeper

|

||||

volumes:

|

||||

- type: bind

|

||||

source: ./dolphinscheduler_env.sh

|

||||

target: /opt/dolphinscheduler/conf/env/dolphinscheduler_env.sh

|

||||

- type: volume

|

||||

source: dolphinscheduler-worker-data

|

||||

target: /tmp/dolphinscheduler

|

||||

- ./dolphinscheduler-logs:/opt/dolphinscheduler/logs

|

||||

- ./dolphinscheduler_env.sh:/opt/dolphinscheduler/conf/env/dolphinscheduler_env.sh

|

||||

- dolphinscheduler-worker-data:/tmp/dolphinscheduler

|

||||

- dolphinscheduler-logs:/opt/dolphinscheduler/logs

|

||||

- dolphinscheduler-resource-local:/dolphinscheduler

|

||||

restart: unless-stopped

|

||||

networks:

|

||||

- dolphinscheduler

|

||||

|

||||

@ -224,7 +220,5 @@ volumes:

|

||||

dolphinscheduler-postgresql-initdb:

|

||||

dolphinscheduler-zookeeper:

|

||||

dolphinscheduler-worker-data:

|

||||

|

||||

configs:

|

||||

dolphinscheduler-worker-task-env:

|

||||

file: ./dolphinscheduler_env.sh

|

||||

dolphinscheduler-logs:

|

||||

dolphinscheduler-resource-local:

|

||||

@ -20,13 +20,13 @@ services:

|

||||

|

||||

dolphinscheduler-postgresql:

|

||||

image: bitnami/postgresql:latest

|

||||

ports:

|

||||

- 5432:5432

|

||||

environment:

|

||||

TZ: Asia/Shanghai

|

||||

POSTGRESQL_USERNAME: root

|

||||

POSTGRESQL_PASSWORD: root

|

||||

POSTGRESQL_DATABASE: dolphinscheduler

|

||||

ports:

|

||||

- 5432:5432

|

||||

volumes:

|

||||

- dolphinscheduler-postgresql:/bitnami/postgresql

|

||||

networks:

|

||||

@ -37,12 +37,12 @@ services:

|

||||

|

||||

dolphinscheduler-zookeeper:

|

||||

image: bitnami/zookeeper:latest

|

||||

ports:

|

||||

- 2181:2181

|

||||

environment:

|

||||

TZ: Asia/Shanghai

|

||||

ALLOW_ANONYMOUS_LOGIN: "yes"

|

||||

ZOO_4LW_COMMANDS_WHITELIST: srvr,ruok,wchs,cons

|

||||

ports:

|

||||

- 2181:2181

|

||||

volumes:

|

||||

- dolphinscheduler-zookeeper:/bitnami/zookeeper

|

||||

networks:

|

||||

@ -53,7 +53,9 @@ services:

|

||||

|

||||

dolphinscheduler-api:

|

||||

image: apache/dolphinscheduler:latest

|

||||

command: ["api-server"]

|

||||

command: api-server

|

||||

ports:

|

||||

- 12345:12345

|

||||

environment:

|

||||

TZ: Asia/Shanghai

|

||||

DATABASE_HOST: dolphinscheduler-postgresql

|

||||

@ -62,39 +64,17 @@ services:

|

||||

DATABASE_PASSWORD: root

|

||||

DATABASE_DATABASE: dolphinscheduler

|

||||

ZOOKEEPER_QUORUM: dolphinscheduler-zookeeper:2181

|

||||

ports:

|

||||

- 12345:12345

|

||||

RESOURCE_STORAGE_TYPE: HDFS

|

||||

RESOURCE_UPLOAD_PATH: /dolphinscheduler

|

||||

FS_DEFAULT_FS: file:///

|

||||

healthcheck:

|

||||

test: ["CMD", "/root/checkpoint.sh", "ApiApplicationServer"]

|

||||

interval: 30

|

||||

interval: 30s

|

||||

timeout: 5s

|

||||

retries: 3

|

||||

start_period: 30s

|

||||

volumes:

|

||||

- dolphinscheduler-logs:/opt/dolphinscheduler/logs

|

||||

networks:

|

||||

- dolphinscheduler

|

||||

deploy:

|

||||

mode: replicated

|

||||

replicas: 1

|

||||

|

||||

dolphinscheduler-frontend:

|

||||

image: apache/dolphinscheduler:latest

|

||||

command: ["frontend"]

|

||||

ports:

|

||||

- 8888:8888

|

||||

environment:

|

||||

TZ: Asia/Shanghai

|

||||

FRONTEND_API_SERVER_HOST: dolphinscheduler-api

|

||||

FRONTEND_API_SERVER_PORT: 12345

|

||||

healthcheck:

|

||||

test: ["CMD", "nc", "-z", "localhost", "8888"]

|

||||

interval: 30

|

||||

timeout: 5s

|

||||

retries: 3

|

||||

start_period: 30s

|

||||

volumes:

|

||||

- dolphinscheduler-logs:/var/log/nginx

|

||||

networks:

|

||||

- dolphinscheduler

|

||||

deploy:

|

||||

@ -103,7 +83,7 @@ services:

|

||||

|

||||

dolphinscheduler-alert:

|

||||

image: apache/dolphinscheduler:latest

|

||||

command: ["alert-server"]

|

||||

command: alert-server

|

||||

environment:

|

||||

TZ: Asia/Shanghai

|

||||

XLS_FILE_PATH: "/tmp/xls"

|

||||

@ -127,13 +107,13 @@ services:

|

||||

DATABASE_DATABASE: dolphinscheduler

|

||||

healthcheck:

|

||||

test: ["CMD", "/root/checkpoint.sh", "AlertServer"]

|

||||

interval: 30

|

||||

interval: 30s

|

||||

timeout: 5s

|

||||

retries: 3

|

||||

start_period: 30s

|

||||

volumes:

|

||||

- dolphinscheduler-logs:/opt/dolphinscheduler/logs

|

||||

networks:

|

||||

networks:

|

||||

- dolphinscheduler

|

||||

deploy:

|

||||

mode: replicated

|

||||

@ -141,10 +121,10 @@ services:

|

||||

|

||||

dolphinscheduler-master:

|

||||

image: apache/dolphinscheduler:latest

|

||||

command: ["master-server"]

|

||||

ports:

|

||||

command: master-server

|

||||

ports:

|

||||

- 5678:5678

|

||||

environment:

|

||||

environment:

|

||||

TZ: Asia/Shanghai

|

||||

MASTER_EXEC_THREADS: "100"

|

||||

MASTER_EXEC_TASK_NUM: "20"

|

||||

@ -161,7 +141,7 @@ services:

|

||||

ZOOKEEPER_QUORUM: dolphinscheduler-zookeeper:2181

|

||||

healthcheck:

|

||||

test: ["CMD", "/root/checkpoint.sh", "MasterServer"]

|

||||

interval: 30

|

||||

interval: 30s

|

||||

timeout: 5s

|

||||

retries: 3

|

||||

start_period: 30s

|

||||

@ -175,11 +155,11 @@ services:

|

||||