This reverts commit 1baa1f4279.

32

.github/PULL_REQUEST_TEMPLATE.md

vendored

@ -1,32 +0,0 @@

|

||||

## *Tips*

|

||||

- *Thanks very much for contributing to Apache DolphinScheduler.*

|

||||

- *Please review https://dolphinscheduler.apache.org/en-us/community/index.html before opening a pull request.*

|

||||

|

||||

## What is the purpose of the pull request

|

||||

|

||||

*(For example: This pull request adds checkstyle plugin.)*

|

||||

|

||||

## Brief change log

|

||||

|

||||

*(for example:)*

|

||||

- *Add maven-checkstyle-plugin to root pom.xml*

|

||||

|

||||

## Verify this pull request

|

||||

|

||||

*(Please pick either of the following options)*

|

||||

|

||||

This pull request is code cleanup without any test coverage.

|

||||

|

||||

*(or)*

|

||||

|

||||

This pull request is already covered by existing tests, such as *(please describe tests)*.

|

||||

|

||||

(or)

|

||||

|

||||

This change added tests and can be verified as follows:

|

||||

|

||||

*(example:)*

|

||||

|

||||

- *Added dolphinscheduler-dao tests for end-to-end.*

|

||||

- *Added CronUtilsTest to verify the change.*

|

||||

- *Manually verified the change by testing locally.*

|

||||

64

.github/workflows/ci_backend.yml

vendored

@ -1,64 +0,0 @@

|

||||

#

|

||||

# Licensed to the Apache Software Foundation (ASF) under one or more

|

||||

# contributor license agreements. See the NOTICE file distributed with

|

||||

# this work for additional information regarding copyright ownership.

|

||||

# The ASF licenses this file to You under the Apache License, Version 2.0

|

||||

# (the "License"); you may not use this file except in compliance with

|

||||

# the License. You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

#

|

||||

|

||||

name: Backend

|

||||

|

||||

on:

|

||||

push:

|

||||

paths:

|

||||

- '.github/workflows/ci_backend.yml'

|

||||

- 'package.xml'

|

||||

- 'pom.xml'

|

||||

- 'dolphinscheduler-alert/**'

|

||||

- 'dolphinscheduler-api/**'

|

||||

- 'dolphinscheduler-common/**'

|

||||

- 'dolphinscheduler-dao/**'

|

||||

- 'dolphinscheduler-rpc/**'

|

||||

- 'dolphinscheduler-server/**'

|

||||

pull_request:

|

||||

paths:

|

||||

- '.github/workflows/ci_backend.yml'

|

||||

- 'package.xml'

|

||||

- 'pom.xml'

|

||||

- 'dolphinscheduler-alert/**'

|

||||

- 'dolphinscheduler-api/**'

|

||||

- 'dolphinscheduler-common/**'

|

||||

- 'dolphinscheduler-dao/**'

|

||||

- 'dolphinscheduler-rpc/**'

|

||||

- 'dolphinscheduler-server/**'

|

||||

|

||||

jobs:

|

||||

Compile-check:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v1

|

||||

- name: Set up JDK 1.8

|

||||

uses: actions/setup-java@v1

|

||||

with:

|

||||

java-version: 1.8

|

||||

- name: Compile

|

||||

run: mvn -U -B -T 1C clean install -Prelease -Dmaven.compile.fork=true -Dmaven.test.skip=true

|

||||

License-check:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v1

|

||||

- name: Set up JDK 1.8

|

||||

uses: actions/setup-java@v1

|

||||

with:

|

||||

java-version: 1.8

|

||||

- name: Check

|

||||

run: mvn -B apache-rat:check

|

||||

58

.github/workflows/ci_frontend.yml

vendored

@ -1,58 +0,0 @@

|

||||

#

|

||||

# Licensed to the Apache Software Foundation (ASF) under one or more

|

||||

# contributor license agreements. See the NOTICE file distributed with

|

||||

# this work for additional information regarding copyright ownership.

|

||||

# The ASF licenses this file to You under the Apache License, Version 2.0

|

||||

# (the "License"); you may not use this file except in compliance with

|

||||

# the License. You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

#

|

||||

|

||||

name: Frontend

|

||||

|

||||

on:

|

||||

push:

|

||||

paths:

|

||||

- '.github/workflows/ci_frontend.yml'

|

||||

- 'dolphinscheduler-ui/**'

|

||||

pull_request:

|

||||

paths:

|

||||

- '.github/workflows/ci_frontend.yml'

|

||||

- 'dolphinscheduler-ui/**'

|

||||

|

||||

jobs:

|

||||

Compile-check:

|

||||

runs-on: ${{ matrix.os }}

|

||||

strategy:

|

||||

matrix:

|

||||

os: [ubuntu-latest, macos-latest]

|

||||

steps:

|

||||

- uses: actions/checkout@v1

|

||||

- name: Set up Node.js

|

||||

uses: actions/setup-node@v1

|

||||

with:

|

||||

version: 8

|

||||

- name: Compile

|

||||

run: |

|

||||

cd dolphinscheduler-ui

|

||||

npm install node-sass --unsafe-perm

|

||||

npm install

|

||||

npm run build

|

||||

|

||||

License-check:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v1

|

||||

- name: Set up JDK 1.8

|

||||

uses: actions/setup-java@v1

|

||||

with:

|

||||

java-version: 1.8

|

||||

- name: Check

|

||||

run: mvn -B apache-rat:check

|

||||

218

.gitignore

vendored

@ -35,112 +35,112 @@ config.gypi

|

||||

test/coverage

|

||||

/docs/zh_CN/介绍

|

||||

/docs/zh_CN/贡献代码.md

|

||||

/dolphinscheduler-common/src/main/resources/zookeeper.properties

|

||||

dolphinscheduler-alert/logs/

|

||||

dolphinscheduler-alert/src/main/resources/alert.properties_bak

|

||||

dolphinscheduler-alert/src/main/resources/logback.xml

|

||||

dolphinscheduler-server/src/main/resources/logback.xml

|

||||

dolphinscheduler-ui/dist/css/common.16ac5d9.css

|

||||

dolphinscheduler-ui/dist/css/home/index.b444b91.css

|

||||

dolphinscheduler-ui/dist/css/login/index.5866c64.css

|

||||

dolphinscheduler-ui/dist/js/0.ac94e5d.js

|

||||

dolphinscheduler-ui/dist/js/0.ac94e5d.js.map

|

||||

dolphinscheduler-ui/dist/js/1.0b043a3.js

|

||||

dolphinscheduler-ui/dist/js/1.0b043a3.js.map

|

||||

dolphinscheduler-ui/dist/js/10.1bce3dc.js

|

||||

dolphinscheduler-ui/dist/js/10.1bce3dc.js.map

|

||||

dolphinscheduler-ui/dist/js/11.79f04d8.js

|

||||

dolphinscheduler-ui/dist/js/11.79f04d8.js.map

|

||||

dolphinscheduler-ui/dist/js/12.420daa5.js

|

||||

dolphinscheduler-ui/dist/js/12.420daa5.js.map

|

||||

dolphinscheduler-ui/dist/js/13.e5bae1c.js

|

||||

dolphinscheduler-ui/dist/js/13.e5bae1c.js.map

|

||||

dolphinscheduler-ui/dist/js/14.f2a0dca.js

|

||||

dolphinscheduler-ui/dist/js/14.f2a0dca.js.map

|

||||

dolphinscheduler-ui/dist/js/15.45373e8.js

|

||||

dolphinscheduler-ui/dist/js/15.45373e8.js.map

|

||||

dolphinscheduler-ui/dist/js/16.fecb0fc.js

|

||||

dolphinscheduler-ui/dist/js/16.fecb0fc.js.map

|

||||

dolphinscheduler-ui/dist/js/17.84be279.js

|

||||

dolphinscheduler-ui/dist/js/17.84be279.js.map

|

||||

dolphinscheduler-ui/dist/js/18.307ea70.js

|

||||

dolphinscheduler-ui/dist/js/18.307ea70.js.map

|

||||

dolphinscheduler-ui/dist/js/19.144db9c.js

|

||||

dolphinscheduler-ui/dist/js/19.144db9c.js.map

|

||||

dolphinscheduler-ui/dist/js/2.8b4ef29.js

|

||||

dolphinscheduler-ui/dist/js/2.8b4ef29.js.map

|

||||

dolphinscheduler-ui/dist/js/20.4c527e9.js

|

||||

dolphinscheduler-ui/dist/js/20.4c527e9.js.map

|

||||

dolphinscheduler-ui/dist/js/21.831b2a2.js

|

||||

dolphinscheduler-ui/dist/js/21.831b2a2.js.map

|

||||

dolphinscheduler-ui/dist/js/22.2b4bb2a.js

|

||||

dolphinscheduler-ui/dist/js/22.2b4bb2a.js.map

|

||||

dolphinscheduler-ui/dist/js/23.81467ef.js

|

||||

dolphinscheduler-ui/dist/js/23.81467ef.js.map

|

||||

dolphinscheduler-ui/dist/js/24.54a00e4.js

|

||||

dolphinscheduler-ui/dist/js/24.54a00e4.js.map

|

||||

dolphinscheduler-ui/dist/js/25.8d7bd36.js

|

||||

dolphinscheduler-ui/dist/js/25.8d7bd36.js.map

|

||||

dolphinscheduler-ui/dist/js/26.2ec5e78.js

|

||||

dolphinscheduler-ui/dist/js/26.2ec5e78.js.map

|

||||

dolphinscheduler-ui/dist/js/27.3ab48c2.js

|

||||

dolphinscheduler-ui/dist/js/27.3ab48c2.js.map

|

||||

dolphinscheduler-ui/dist/js/28.363088a.js

|

||||

dolphinscheduler-ui/dist/js/28.363088a.js.map

|

||||

dolphinscheduler-ui/dist/js/29.6c5853a.js

|

||||

dolphinscheduler-ui/dist/js/29.6c5853a.js.map

|

||||

dolphinscheduler-ui/dist/js/3.a0edb5b.js

|

||||

dolphinscheduler-ui/dist/js/3.a0edb5b.js.map

|

||||

dolphinscheduler-ui/dist/js/30.940fdd3.js

|

||||

dolphinscheduler-ui/dist/js/30.940fdd3.js.map

|

||||

dolphinscheduler-ui/dist/js/31.168a460.js

|

||||

dolphinscheduler-ui/dist/js/31.168a460.js.map

|

||||

dolphinscheduler-ui/dist/js/32.8df6594.js

|

||||

dolphinscheduler-ui/dist/js/32.8df6594.js.map

|

||||

dolphinscheduler-ui/dist/js/33.4480bbe.js

|

||||

dolphinscheduler-ui/dist/js/33.4480bbe.js.map

|

||||

dolphinscheduler-ui/dist/js/34.b407fe1.js

|

||||

dolphinscheduler-ui/dist/js/34.b407fe1.js.map

|

||||

dolphinscheduler-ui/dist/js/35.f340b0a.js

|

||||

dolphinscheduler-ui/dist/js/35.f340b0a.js.map

|

||||

dolphinscheduler-ui/dist/js/36.8880c2d.js

|

||||

dolphinscheduler-ui/dist/js/36.8880c2d.js.map

|

||||

dolphinscheduler-ui/dist/js/37.ea2a25d.js

|

||||

dolphinscheduler-ui/dist/js/37.ea2a25d.js.map

|

||||

dolphinscheduler-ui/dist/js/38.98a59ee.js

|

||||

dolphinscheduler-ui/dist/js/38.98a59ee.js.map

|

||||

dolphinscheduler-ui/dist/js/39.a5e958a.js

|

||||

dolphinscheduler-ui/dist/js/39.a5e958a.js.map

|

||||

dolphinscheduler-ui/dist/js/4.4ca44db.js

|

||||

dolphinscheduler-ui/dist/js/4.4ca44db.js.map

|

||||

dolphinscheduler-ui/dist/js/40.e187b1e.js

|

||||

dolphinscheduler-ui/dist/js/40.e187b1e.js.map

|

||||

dolphinscheduler-ui/dist/js/41.0e89182.js

|

||||

dolphinscheduler-ui/dist/js/41.0e89182.js.map

|

||||

dolphinscheduler-ui/dist/js/42.341047c.js

|

||||

dolphinscheduler-ui/dist/js/42.341047c.js.map

|

||||

dolphinscheduler-ui/dist/js/43.27b8228.js

|

||||

dolphinscheduler-ui/dist/js/43.27b8228.js.map

|

||||

dolphinscheduler-ui/dist/js/44.e8869bc.js

|

||||

dolphinscheduler-ui/dist/js/44.e8869bc.js.map

|

||||

dolphinscheduler-ui/dist/js/45.8d54901.js

|

||||

dolphinscheduler-ui/dist/js/45.8d54901.js.map

|

||||

dolphinscheduler-ui/dist/js/5.e1ed7f3.js

|

||||

dolphinscheduler-ui/dist/js/5.e1ed7f3.js.map

|

||||

dolphinscheduler-ui/dist/js/6.241ba07.js

|

||||

dolphinscheduler-ui/dist/js/6.241ba07.js.map

|

||||

dolphinscheduler-ui/dist/js/7.ab2e297.js

|

||||

dolphinscheduler-ui/dist/js/7.ab2e297.js.map

|

||||

dolphinscheduler-ui/dist/js/8.83ff814.js

|

||||

dolphinscheduler-ui/dist/js/8.83ff814.js.map

|

||||

dolphinscheduler-ui/dist/js/9.39cb29f.js

|

||||

dolphinscheduler-ui/dist/js/9.39cb29f.js.map

|

||||

dolphinscheduler-ui/dist/js/common.733e342.js

|

||||

dolphinscheduler-ui/dist/js/common.733e342.js.map

|

||||

dolphinscheduler-ui/dist/js/home/index.78a5d12.js

|

||||

dolphinscheduler-ui/dist/js/home/index.78a5d12.js.map

|

||||

dolphinscheduler-ui/dist/js/login/index.291b8e3.js

|

||||

dolphinscheduler-ui/dist/js/login/index.291b8e3.js.map

|

||||

dolphinscheduler-ui/dist/lib/external/

|

||||

dolphinscheduler-ui/src/js/conf/home/pages/projects/pages/taskInstance/index.vue

|

||||

/dolphinscheduler-dao/src/main/resources/dao/data_source.properties

|

||||

/escheduler-common/src/main/resources/zookeeper.properties

|

||||

escheduler-alert/logs/

|

||||

escheduler-alert/src/main/resources/alert.properties_bak

|

||||

escheduler-alert/src/main/resources/logback.xml

|

||||

escheduler-server/src/main/resources/logback.xml

|

||||

escheduler-ui/dist/css/common.16ac5d9.css

|

||||

escheduler-ui/dist/css/home/index.b444b91.css

|

||||

escheduler-ui/dist/css/login/index.5866c64.css

|

||||

escheduler-ui/dist/js/0.ac94e5d.js

|

||||

escheduler-ui/dist/js/0.ac94e5d.js.map

|

||||

escheduler-ui/dist/js/1.0b043a3.js

|

||||

escheduler-ui/dist/js/1.0b043a3.js.map

|

||||

escheduler-ui/dist/js/10.1bce3dc.js

|

||||

escheduler-ui/dist/js/10.1bce3dc.js.map

|

||||

escheduler-ui/dist/js/11.79f04d8.js

|

||||

escheduler-ui/dist/js/11.79f04d8.js.map

|

||||

escheduler-ui/dist/js/12.420daa5.js

|

||||

escheduler-ui/dist/js/12.420daa5.js.map

|

||||

escheduler-ui/dist/js/13.e5bae1c.js

|

||||

escheduler-ui/dist/js/13.e5bae1c.js.map

|

||||

escheduler-ui/dist/js/14.f2a0dca.js

|

||||

escheduler-ui/dist/js/14.f2a0dca.js.map

|

||||

escheduler-ui/dist/js/15.45373e8.js

|

||||

escheduler-ui/dist/js/15.45373e8.js.map

|

||||

escheduler-ui/dist/js/16.fecb0fc.js

|

||||

escheduler-ui/dist/js/16.fecb0fc.js.map

|

||||

escheduler-ui/dist/js/17.84be279.js

|

||||

escheduler-ui/dist/js/17.84be279.js.map

|

||||

escheduler-ui/dist/js/18.307ea70.js

|

||||

escheduler-ui/dist/js/18.307ea70.js.map

|

||||

escheduler-ui/dist/js/19.144db9c.js

|

||||

escheduler-ui/dist/js/19.144db9c.js.map

|

||||

escheduler-ui/dist/js/2.8b4ef29.js

|

||||

escheduler-ui/dist/js/2.8b4ef29.js.map

|

||||

escheduler-ui/dist/js/20.4c527e9.js

|

||||

escheduler-ui/dist/js/20.4c527e9.js.map

|

||||

escheduler-ui/dist/js/21.831b2a2.js

|

||||

escheduler-ui/dist/js/21.831b2a2.js.map

|

||||

escheduler-ui/dist/js/22.2b4bb2a.js

|

||||

escheduler-ui/dist/js/22.2b4bb2a.js.map

|

||||

escheduler-ui/dist/js/23.81467ef.js

|

||||

escheduler-ui/dist/js/23.81467ef.js.map

|

||||

escheduler-ui/dist/js/24.54a00e4.js

|

||||

escheduler-ui/dist/js/24.54a00e4.js.map

|

||||

escheduler-ui/dist/js/25.8d7bd36.js

|

||||

escheduler-ui/dist/js/25.8d7bd36.js.map

|

||||

escheduler-ui/dist/js/26.2ec5e78.js

|

||||

escheduler-ui/dist/js/26.2ec5e78.js.map

|

||||

escheduler-ui/dist/js/27.3ab48c2.js

|

||||

escheduler-ui/dist/js/27.3ab48c2.js.map

|

||||

escheduler-ui/dist/js/28.363088a.js

|

||||

escheduler-ui/dist/js/28.363088a.js.map

|

||||

escheduler-ui/dist/js/29.6c5853a.js

|

||||

escheduler-ui/dist/js/29.6c5853a.js.map

|

||||

escheduler-ui/dist/js/3.a0edb5b.js

|

||||

escheduler-ui/dist/js/3.a0edb5b.js.map

|

||||

escheduler-ui/dist/js/30.940fdd3.js

|

||||

escheduler-ui/dist/js/30.940fdd3.js.map

|

||||

escheduler-ui/dist/js/31.168a460.js

|

||||

escheduler-ui/dist/js/31.168a460.js.map

|

||||

escheduler-ui/dist/js/32.8df6594.js

|

||||

escheduler-ui/dist/js/32.8df6594.js.map

|

||||

escheduler-ui/dist/js/33.4480bbe.js

|

||||

escheduler-ui/dist/js/33.4480bbe.js.map

|

||||

escheduler-ui/dist/js/34.b407fe1.js

|

||||

escheduler-ui/dist/js/34.b407fe1.js.map

|

||||

escheduler-ui/dist/js/35.f340b0a.js

|

||||

escheduler-ui/dist/js/35.f340b0a.js.map

|

||||

escheduler-ui/dist/js/36.8880c2d.js

|

||||

escheduler-ui/dist/js/36.8880c2d.js.map

|

||||

escheduler-ui/dist/js/37.ea2a25d.js

|

||||

escheduler-ui/dist/js/37.ea2a25d.js.map

|

||||

escheduler-ui/dist/js/38.98a59ee.js

|

||||

escheduler-ui/dist/js/38.98a59ee.js.map

|

||||

escheduler-ui/dist/js/39.a5e958a.js

|

||||

escheduler-ui/dist/js/39.a5e958a.js.map

|

||||

escheduler-ui/dist/js/4.4ca44db.js

|

||||

escheduler-ui/dist/js/4.4ca44db.js.map

|

||||

escheduler-ui/dist/js/40.e187b1e.js

|

||||

escheduler-ui/dist/js/40.e187b1e.js.map

|

||||

escheduler-ui/dist/js/41.0e89182.js

|

||||

escheduler-ui/dist/js/41.0e89182.js.map

|

||||

escheduler-ui/dist/js/42.341047c.js

|

||||

escheduler-ui/dist/js/42.341047c.js.map

|

||||

escheduler-ui/dist/js/43.27b8228.js

|

||||

escheduler-ui/dist/js/43.27b8228.js.map

|

||||

escheduler-ui/dist/js/44.e8869bc.js

|

||||

escheduler-ui/dist/js/44.e8869bc.js.map

|

||||

escheduler-ui/dist/js/45.8d54901.js

|

||||

escheduler-ui/dist/js/45.8d54901.js.map

|

||||

escheduler-ui/dist/js/5.e1ed7f3.js

|

||||

escheduler-ui/dist/js/5.e1ed7f3.js.map

|

||||

escheduler-ui/dist/js/6.241ba07.js

|

||||

escheduler-ui/dist/js/6.241ba07.js.map

|

||||

escheduler-ui/dist/js/7.ab2e297.js

|

||||

escheduler-ui/dist/js/7.ab2e297.js.map

|

||||

escheduler-ui/dist/js/8.83ff814.js

|

||||

escheduler-ui/dist/js/8.83ff814.js.map

|

||||

escheduler-ui/dist/js/9.39cb29f.js

|

||||

escheduler-ui/dist/js/9.39cb29f.js.map

|

||||

escheduler-ui/dist/js/common.733e342.js

|

||||

escheduler-ui/dist/js/common.733e342.js.map

|

||||

escheduler-ui/dist/js/home/index.78a5d12.js

|

||||

escheduler-ui/dist/js/home/index.78a5d12.js.map

|

||||

escheduler-ui/dist/js/login/index.291b8e3.js

|

||||

escheduler-ui/dist/js/login/index.291b8e3.js.map

|

||||

escheduler-ui/dist/lib/external/

|

||||

escheduler-ui/src/js/conf/home/pages/projects/pages/taskInstance/index.vue

|

||||

/escheduler-dao/src/main/resources/dao/data_source.properties

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

* First from the remote repository *https://github.com/apache/incubator-dolphinscheduler.git* fork code to your own repository

|

||||

* First from the remote repository *https://github.com/analysys/EasyScheduler.git* fork code to your own repository

|

||||

|

||||

* there are three branches in the remote repository currently:

|

||||

* master normal delivery branch

|

||||

@ -7,14 +7,17 @@

|

||||

* dev daily development branch

|

||||

The daily development branch, the newly submitted code can pull requests to this branch.

|

||||

|

||||

* branch-1.0.0 release version branch

|

||||

Release version branch, there will be 2.0 ... and other version branches, the version

|

||||

branch only changes the error, does not add new features.

|

||||

|

||||

* Clone your own warehouse to your local

|

||||

|

||||

`git clone https://github.com/apache/incubator-dolphinscheduler.git`

|

||||

`git clone https://github.com/analysys/EasyScheduler.git`

|

||||

|

||||

* Add remote repository address, named upstream

|

||||

|

||||

`git remote add upstream https://github.com/apache/incubator-dolphinscheduler.git`

|

||||

`git remote add upstream https://github.com/analysys/EasyScheduler.git`

|

||||

|

||||

* View repository:

|

||||

|

||||

@ -60,6 +63,71 @@ git push --set-upstream origin dev1.0

|

||||

|

||||

* Next, the administrator is responsible for **merging** to complete the pull request

|

||||

|

||||

---

|

||||

|

||||

* 首先从远端仓库*https://github.com/analysys/EasyScheduler.git* fork一份代码到自己的仓库中

|

||||

|

||||

* 远端仓库中目前有三个分支:

|

||||

* master 正常交付分支

|

||||

发布稳定版本以后,将稳定版本分支的代码合并到master上。

|

||||

|

||||

* dev 日常开发分支

|

||||

日常dev开发分支,新提交的代码都可以pull request到这个分支上。

|

||||

|

||||

* branch-1.0.0 发布版本分支

|

||||

发布版本分支,后续会有2.0...等版本分支,版本分支只修改bug,不增加新功能。

|

||||

|

||||

* 把自己仓库clone到本地

|

||||

|

||||

`git clone https://github.com/analysys/EasyScheduler.git`

|

||||

|

||||

* 添加远端仓库地址,命名为upstream

|

||||

|

||||

` git remote add upstream https://github.com/analysys/EasyScheduler.git `

|

||||

|

||||

* 查看仓库:

|

||||

|

||||

` git remote -v`

|

||||

|

||||

> 此时会有两个仓库:origin(自己的仓库)和upstream(远端仓库)

|

||||

|

||||

* 获取/更新远端仓库代码(已经是最新代码,就跳过)

|

||||

|

||||

`git fetch upstream `

|

||||

|

||||

|

||||

* 同步远端仓库代码到本地仓库

|

||||

|

||||

```

|

||||

git checkout origin/dev

|

||||

git merge --no-ff upstream/dev

|

||||

```

|

||||

|

||||

如果远端分支有新加的分支`dev-1.0`,需要同步这个分支到本地仓库

|

||||

|

||||

```

|

||||

git checkout -b dev-1.0 upstream/dev-1.0

|

||||

git push --set-upstream origin dev1.0

|

||||

```

|

||||

|

||||

* 在本地修改代码以后,提交到自己仓库:

|

||||

|

||||

`git commit -m 'test commit'`

|

||||

`git push`

|

||||

|

||||

* 将修改提交到远端仓库

|

||||

|

||||

* 在github页面,点击New pull request.

|

||||

<p align="center">

|

||||

<img src="http://geek.analysys.cn/static/upload/221/2019-04-02/90f3abbf-70ef-4334-b8d6-9014c9cf4c7f.png" width="60%" />

|

||||

</p>

|

||||

|

||||

* 选择修改完的本地分支和要合并过去的分支,Create pull request.

|

||||

<p align="center">

|

||||

<img src="http://geek.analysys.cn/static/upload/221/2019-04-02/fe7eecfe-2720-4736-951b-b3387cf1ae41.png" width="60%" />

|

||||

</p>

|

||||

|

||||

* 接下来由管理员负责将**Merge**完成此次pull request

|

||||

|

||||

|

||||

|

||||

|

||||

@ -1,5 +0,0 @@

|

||||

Apache DolphinScheduler (incubating) is an effort undergoing incubation at The Apache Software Foundation (ASF), sponsored by the Apache Incubator PMC.

|

||||

Incubation is required of all newly accepted projects until a further review indicates that the infrastructure,

|

||||

communications, and decision making process have stabilized in a manner consistent with other successful ASF projects.

|

||||

While incubation status is not necessarily a reflection of the completeness or stability of the code,

|

||||

it does indicate that the project has yet to be fully endorsed by the ASF.

|

||||

8

NOTICE

@ -1,5 +1,7 @@

|

||||

Apache DolphinScheduler (incubating)

|

||||

Copyright 2019 The Apache Software Foundation

|

||||

Easy Scheduler

|

||||

Copyright 2019 The Analysys Foundation

|

||||

|

||||

This product includes software developed at

|

||||

The Apache Software Foundation (http://www.apache.org/).

|

||||

The Analysys Foundation (https://www.analysys.cn/).

|

||||

|

||||

|

||||

|

||||

30

README.md

@ -11,7 +11,7 @@ Dolphin Scheduler

|

||||

[](README_zh_CN.md)

|

||||

|

||||

|

||||

### Design features:

|

||||

### Design features:

|

||||

|

||||

A distributed and easy-to-expand visual DAG workflow scheduling system. Dedicated to solving the complex dependencies in data processing, making the scheduling system `out of the box` for data processing.

|

||||

Its main objectives are as follows:

|

||||

@ -36,8 +36,8 @@ Its main objectives are as follows:

|

||||

|

||||

Stability | Easy to use | Features | Scalability |

|

||||

-- | -- | -- | --

|

||||

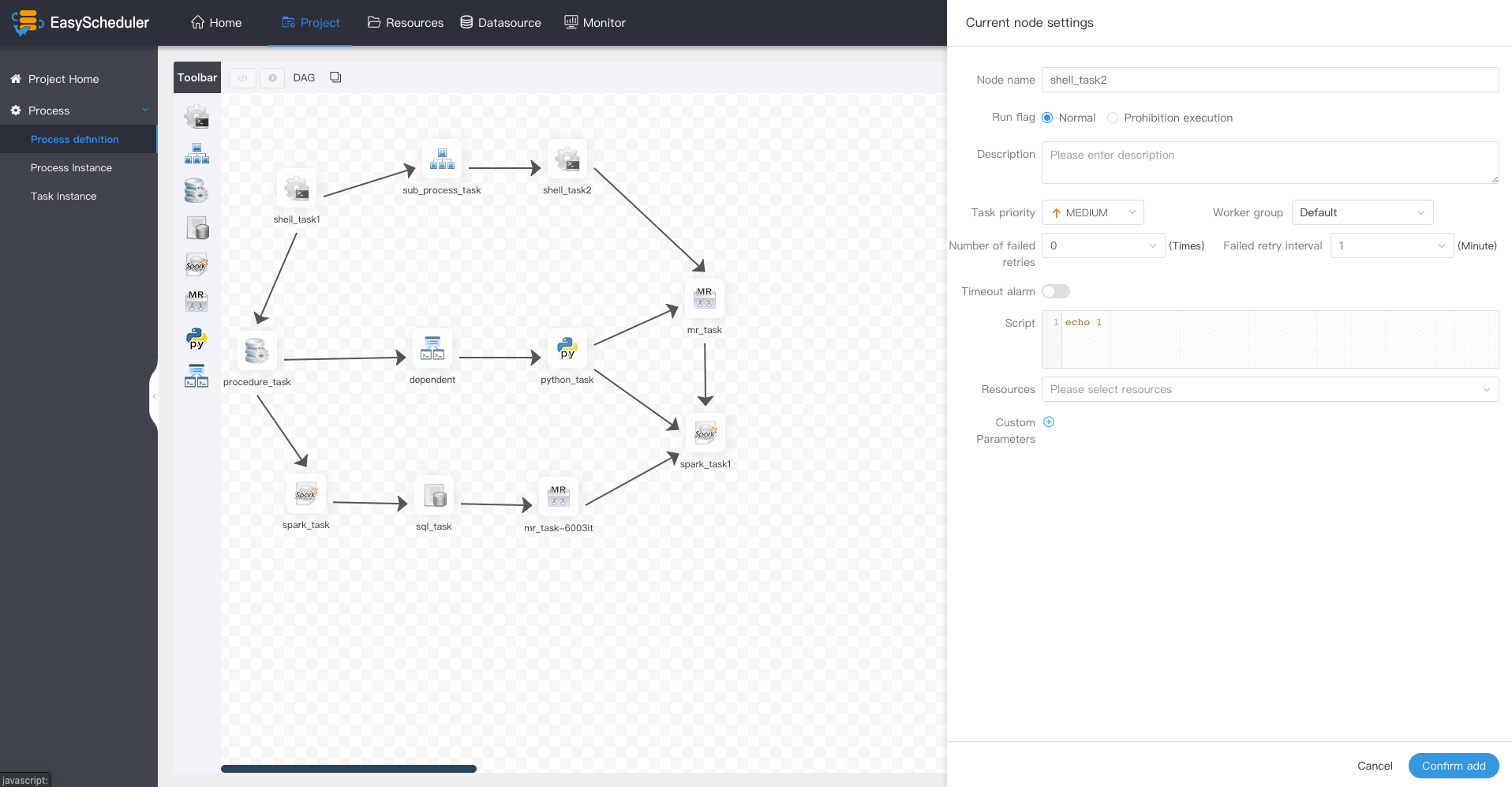

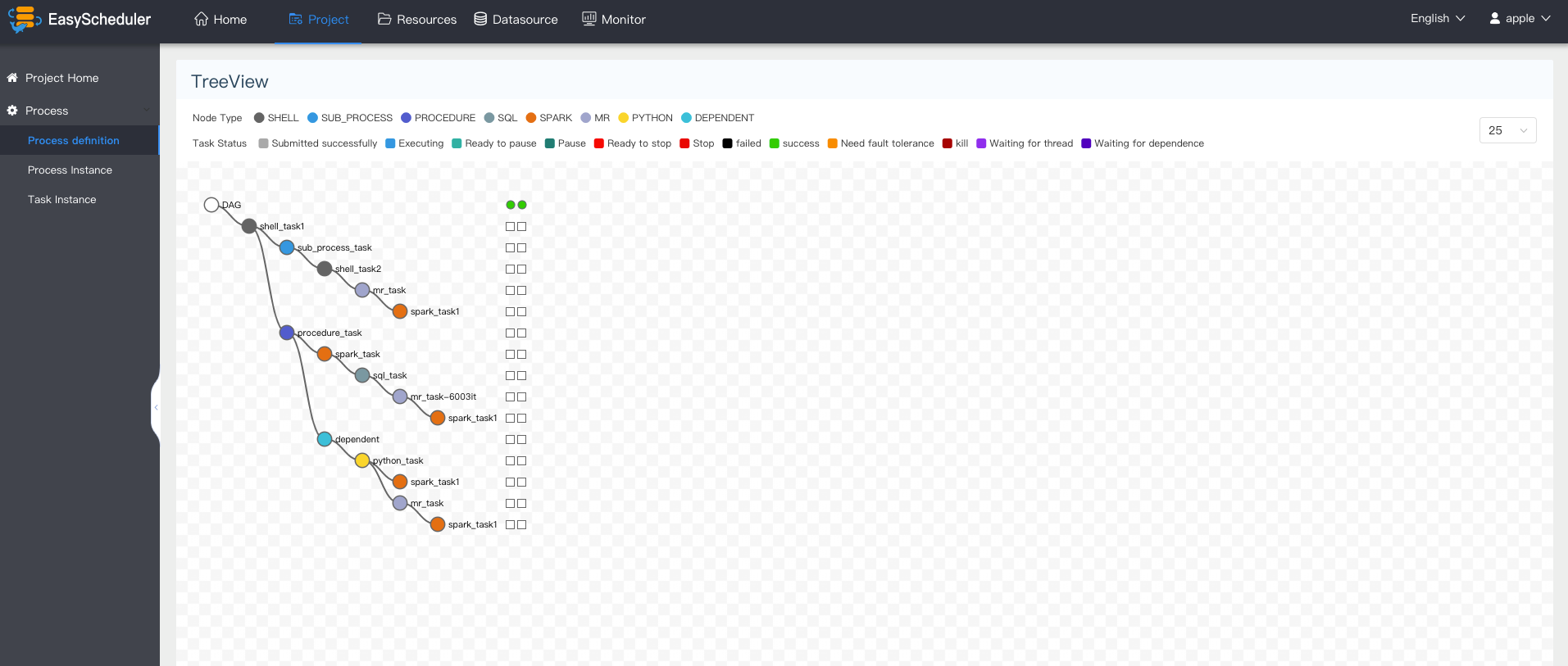

Decentralized multi-master and multi-worker | Visualization process defines key information such as task status, task type, retry times, task running machine, visual variables and so on at a glance. | Support pause, recover operation | support custom task types

|

||||

HA is supported by itself | All process definition operations are visualized, dragging tasks to draw DAGs, configuring data sources and resources. At the same time, for third-party systems, the api mode operation is provided. | Users on DolphinScheduler can achieve many-to-one or one-to-one mapping relationship through tenants and Hadoop users, which is very important for scheduling large data jobs. " | The scheduler uses distributed scheduling, and the overall scheduling capability will increase linearly with the scale of the cluster. Master and Worker support dynamic online and offline.

|

||||

Decentralized multi-master and multi-worker | Visualization process defines key information such as task status, task type, retry times, task running machine, visual variables and so on at a glance. | Support pause, recover operation | support custom task types

|

||||

HA is supported by itself | All process definition operations are visualized, dragging tasks to draw DAGs, configuring data sources and resources. At the same time, for third-party systems, the api mode operation is provided. | Users on DolphinScheduler can achieve many-to-one or one-to-one mapping relationship through tenants and Hadoop users, which is very important for scheduling large data jobs. " | The scheduler uses distributed scheduling, and the overall scheduling capability will increase linearly with the scale of the cluster. Master and Worker support dynamic online and offline.

|

||||

Overload processing: Task queue mechanism, the number of schedulable tasks on a single machine can be flexibly configured, when too many tasks will be cached in the task queue, will not cause machine jam. | One-click deployment | Supports traditional shell tasks, and also support big data platform task scheduling: MR, Spark, SQL (mysql, postgresql, hive, sparksql), Python, Procedure, Sub_Process | |

|

||||

|

||||

|

||||

@ -58,11 +58,11 @@ Overload processing: Task queue mechanism, the number of schedulable tasks on a

|

||||

|

||||

- <a href="https://dolphinscheduler.apache.org/en-us/docs/user_doc/frontend-deployment.html" target="_blank">Front-end deployment documentation</a>

|

||||

|

||||

- [**User manual**](https://dolphinscheduler.apache.org/en-us/docs/user_doc/system-manual.html?_blank "System manual")

|

||||

- [**User manual**](https://dolphinscheduler.apache.org/en-us/docs/user_doc/system-manual.html?_blank "System manual")

|

||||

|

||||

- [**Upgrade document**](https://dolphinscheduler.apache.org/en-us/docs/release/upgrade.html?_blank "Upgrade document")

|

||||

- [**Upgrade document**](https://dolphinscheduler.apache.org/en-us/docs/release/upgrade.html?_blank "Upgrade document")

|

||||

|

||||

- <a href="http://106.75.43.194:8888" target="_blank">Online Demo</a>

|

||||

- <a href="http://106.75.43.194:8888" target="_blank">Online Demo</a>

|

||||

|

||||

More documentation please refer to <a href="https://dolphinscheduler.apache.org/en-us/docs/user_doc/quick-start.html" target="_blank">[DolphinScheduler online documentation]</a>

|

||||

|

||||

@ -74,20 +74,6 @@ Work plan of Dolphin Scheduler: [R&D plan](https://github.com/apache/incubator-d

|

||||

Welcome to participate in contributing code, please refer to the process of submitting the code:

|

||||

[[How to contribute code](https://github.com/apache/incubator-dolphinscheduler/issues/310)]

|

||||

|

||||

### How to Build

|

||||

|

||||

```bash

|

||||

mvn clean install -Prelease

|

||||

```

|

||||

|

||||

Artifact:

|

||||

|

||||

```

|

||||

dolphinscheduler-dist/dolphinscheduler-backend/target/apache-dolphinscheduler-incubating-${latest.release.version}-dolphinscheduler-backend-bin.tar.gz: Binary package of DolphinScheduler-Backend

|

||||

dolphinscheduler-dist/dolphinscheduler-front/target/apache-dolphinscheduler-incubating-${latest.release.version}-dolphinscheduler-front-bin.tar.gz: Binary package of DolphinScheduler-UI

|

||||

dolphinscheduler-dist/dolphinscheduler-src/target/apache-dolphinscheduler-incubating-${latest.release.version}-src.zip: Source code package of DolphinScheduler

|

||||

```

|

||||

|

||||

### Thanks

|

||||

|

||||

Dolphin Scheduler uses a lot of excellent open source projects, such as google guava, guice, grpc, netty, ali bonecp, quartz, and many open source projects of apache, etc.

|

||||

@ -100,8 +86,8 @@ It is because of the shoulders of these open source projects that the birth of t

|

||||

|

||||

### License

|

||||

Please refer to [LICENSE](https://github.com/apache/incubator-dolphinscheduler/blob/dev/LICENSE) file.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

@ -1,13 +1,13 @@

|

||||

Dolphin Scheduler

|

||||

============

|

||||

[](https://www.apache.org/licenses/LICENSE-2.0.html)

|

||||

[](https://github.com/apache/Incubator-DolphinScheduler)

|

||||

[](https://github.com/analysys/EasyScheduler)

|

||||

|

||||

> Dolphin Scheduler for Big Data

|

||||

|

||||

|

||||

|

||||

[](https://starchart.cc/apache/incubator-dolphinscheduler)

|

||||

[](https://starchart.cc/analysys/EasyScheduler)

|

||||

|

||||

[](README_zh_CN.md)

|

||||

[](README.md)

|

||||

@ -45,11 +45,11 @@ Dolphin Scheduler

|

||||

|

||||

- <a href="https://dolphinscheduler.apache.org/zh-cn/docs/user_doc/frontend-deployment.html" target="_blank">前端部署文档</a>

|

||||

|

||||

- [**使用手册**](https://dolphinscheduler.apache.org/zh-cn/docs/user_doc/system-manual.html?_blank "系统使用手册")

|

||||

- [**使用手册**](https://dolphinscheduler.apache.org/zh-cn/docs/user_doc/system-manual.html?_blank "系统使用手册")

|

||||

|

||||

- [**升级文档**](https://dolphinscheduler.apache.org/zh-cn/docs/release/upgrade.html?_blank "升级文档")

|

||||

- [**升级文档**](https://dolphinscheduler.apache.org/zh-cn/docs/release/upgrade.html?_blank "升级文档")

|

||||

|

||||

- <a href="http://106.75.43.194:8888" target="_blank">我要体验</a>

|

||||

- <a href="http://106.75.43.194:8888" target="_blank">我要体验</a>

|

||||

|

||||

更多文档请参考 <a href="https://dolphinscheduler.apache.org/zh-cn/docs/user_doc/quick-start.html" target="_blank">DolphinScheduler中文在线文档</a>

|

||||

|

||||

@ -63,24 +63,11 @@ DolphinScheduler的工作计划:<a href="https://github.com/apache/incubator-d

|

||||

非常欢迎大家来参与贡献代码,提交代码流程请参考:

|

||||

[[How to contribute code](https://github.com/apache/incubator-dolphinscheduler/issues/310)]

|

||||

|

||||

### How to Build

|

||||

|

||||

```bash

|

||||

mvn clean install -Prelease

|

||||

```

|

||||

|

||||

Artifact:

|

||||

|

||||

```

|

||||

dolphinscheduler-dist/dolphinscheduler-backend/target/apache-dolphinscheduler-incubating-${latest.release.version}-dolphinscheduler-backend-bin.tar.gz: Binary package of DolphinScheduler-Backend

|

||||

dolphinscheduler-dist/dolphinscheduler-front/target/apache-dolphinscheduler-incubating-${latest.release.version}-dolphinscheduler-front-bin.tar.gz: Binary package of DolphinScheduler-UI

|

||||

dolphinscheduler-dist/dolphinscheduler-src/target/apache-dolphinscheduler-incubating-${latest.release.version}-src.zip: Source code package of DolphinScheduler

|

||||

```

|

||||

|

||||

### 感谢

|

||||

|

||||

Dolphin Scheduler使用了很多优秀的开源项目,比如google的guava、guice、grpc,netty,ali的bonecp,quartz,以及apache的众多开源项目等等,

|

||||

正是由于站在这些开源项目的肩膀上,才有Dolphin Scheduler的诞生的可能。对此我们对使用的所有开源软件表示非常的感谢!我们也希望自己不仅是开源的受益者,也能成为开源的

|

||||

正是由于站在这些开源项目的肩膀上,才有Easy Scheduler的诞生的可能。对此我们对使用的所有开源软件表示非常的感谢!我们也希望自己不仅是开源的受益者,也能成为开源的

|

||||

贡献者,于是我们决定把易调度贡献出来,并承诺长期维护。也希望对开源有同样热情和信念的伙伴加入进来,一起为开源献出一份力!

|

||||

|

||||

|

||||

@ -91,7 +78,7 @@ Dolphin Scheduler使用了很多优秀的开源项目,比如google的guava、g

|

||||

|

||||

### 版权

|

||||

Please refer to [LICENSE](https://github.com/apache/incubator-dolphinscheduler/blob/dev/LICENSE) file.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

@ -1,55 +0,0 @@

|

||||

## 1.2.0

|

||||

|

||||

### New Feature

|

||||

1. Support postgre sql

|

||||

2. Change all Chinese names to English

|

||||

3. Add flink and http task support

|

||||

4. Cross project dependencies

|

||||

5. Modify mybatis to mybatisplus, support multy databases.

|

||||

6. Add export and import definition feaure

|

||||

7. Github actions ci compile check

|

||||

8. Add method and parameters comments

|

||||

9. Add java doc for common module

|

||||

|

||||

|

||||

### Enhancement

|

||||

1. Add license and notice files

|

||||

2. Move batchDelete Process Define/Instance Outside for transactional

|

||||

3. Remove space before and after login user name

|

||||

4. Dockerfile optimization

|

||||

5. Change mysql-connector-java scope to test

|

||||

6. Owners and administrators can delete schedule

|

||||

7. DB page rename and background color modification

|

||||

8. Add postgre performance monitor

|

||||

9. Resolve style conflict, recipient cannot tab and value verification

|

||||

10. Checkbox change background color and env to Chinese

|

||||

11. Change chinese sql to english

|

||||

12. Change sqlSessionTemplate singleton and reformat code

|

||||

13. The value of loadaverage should be two decimal places

|

||||

14. Delete alert group need delete the relation of user and alert group

|

||||

15. Remove check resources when delete tenant

|

||||

16. Check processInstance state before delete worker group

|

||||

17. Add check user and definitions function when delete tenant

|

||||

18. Delete before check to avoid KeeperException$NoNodeException

|

||||

|

||||

### Bug Fixes

|

||||

1. Fix #1245, make scanCommand transactional

|

||||

2. Fix ZKWorkerClient not close PathChildrenCache

|

||||

3. Data type convert error ,email send error bug fix

|

||||

4. Catch exception transaction method does not take effect to modify

|

||||

5. Fix the spring transaction not worker bug

|

||||

6. Task log print worker log bug fix

|

||||

7. Fix api server debug mode bug

|

||||

8. The task is abnormal and task is running bug fix

|

||||

9. Fix bug: tasks queue length error

|

||||

10. Fix unsuitable error message

|

||||

11. Fix bug: phone can be empty

|

||||

12. Fix email error password

|

||||

13. Fix CheckUtils.checkUserParams method

|

||||

14. The process cannot be terminated while tasks in the status submit success

|

||||

15. Fix too many connection in upgrade or create

|

||||

16. Fix the bug when worker execute task using queue. and remove checking

|

||||

17. Resole verify udf name error and delete udf error

|

||||

18. Fix bug: task cannot submit when recovery failover

|

||||

19. Fix bug: the administrator authorizes the project to ordinary users,but ordinary users cannot see the process definition created by the administrator

|

||||

20. Fix bug: create dolphinscheduler sql failed

|

||||

@ -1,29 +1,13 @@

|

||||

#

|

||||

# Licensed to the Apache Software Foundation (ASF) under one or more

|

||||

# contributor license agreements. See the NOTICE file distributed with

|

||||

# this work for additional information regarding copyright ownership.

|

||||

# The ASF licenses this file to You under the Apache License, Version 2.0

|

||||

# (the "License"); you may not use this file except in compliance with

|

||||

# the License. You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

#

|

||||

|

||||

FROM ubuntu:18.04

|

||||

|

||||

MAINTAINER journey "825193156@qq.com"

|

||||

|

||||

ENV LANG=C.UTF-8

|

||||

ENV DEBIAN_FRONTEND=noninteractive

|

||||

|

||||

ARG version

|

||||

ARG tar_version

|

||||

|

||||

#1,install jdk

|

||||

#1,安装jdk

|

||||

|

||||

RUN apt-get update \

|

||||

&& apt-get -y install openjdk-8-jdk \

|

||||

@ -33,10 +17,17 @@ ENV JAVA_HOME /usr/lib/jvm/java-8-openjdk-amd64

|

||||

ENV PATH $JAVA_HOME/bin:$PATH

|

||||

|

||||

|

||||

#install wget

|

||||

#安装wget

|

||||

RUN apt-get update && \

|

||||

apt-get -y install wget

|

||||

#2,install ZK

|

||||

#2,安装ZK

|

||||

#RUN cd /opt && \

|

||||

# wget https://archive.apache.org/dist/zookeeper/zookeeper-3.4.6/zookeeper-3.4.6.tar.gz && \

|

||||

# tar -zxvf zookeeper-3.4.6.tar.gz && \

|

||||

# mv zookeeper-3.4.6 zookeeper && \

|

||||

# rm -rf ./zookeeper-*tar.gz && \

|

||||

# mkdir -p /tmp/zookeeper && \

|

||||

# rm -rf /opt/zookeeper/conf/zoo_sample.cfg

|

||||

|

||||

RUN cd /opt && \

|

||||

wget https://www-us.apache.org/dist/zookeeper/zookeeper-3.4.14/zookeeper-3.4.14.tar.gz && \

|

||||

@ -46,22 +37,22 @@ RUN cd /opt && \

|

||||

mkdir -p /tmp/zookeeper && \

|

||||

rm -rf /opt/zookeeper/conf/zoo_sample.cfg

|

||||

|

||||

ADD ./dockerfile/conf/zookeeper/zoo.cfg /opt/zookeeper/conf

|

||||

ADD ./conf/zookeeper/zoo.cfg /opt/zookeeper/conf

|

||||

ENV ZK_HOME=/opt/zookeeper

|

||||

ENV PATH $PATH:$ZK_HOME/bin

|

||||

|

||||

#3,install maven

|

||||

#3,安装maven

|

||||

RUN cd /opt && \

|

||||

wget http://apache-mirror.rbc.ru/pub/apache/maven/maven-3/3.3.9/binaries/apache-maven-3.3.9-bin.tar.gz && \

|

||||

tar -zxvf apache-maven-3.3.9-bin.tar.gz && \

|

||||

mv apache-maven-3.3.9 maven && \

|

||||

rm -rf ./apache-maven-*tar.gz && \

|

||||

rm -rf /opt/maven/conf/settings.xml

|

||||

ADD ./dockerfile/conf/maven/settings.xml /opt/maven/conf

|

||||

ADD ./conf/maven/settings.xml /opt/maven/conf

|

||||

ENV MAVEN_HOME=/opt/maven

|

||||

ENV PATH $PATH:$MAVEN_HOME/bin

|

||||

|

||||

#4,install node

|

||||

#4,安装node

|

||||

RUN cd /opt && \

|

||||

wget https://nodejs.org/download/release/v8.9.4/node-v8.9.4-linux-x64.tar.gz && \

|

||||

tar -zxvf node-v8.9.4-linux-x64.tar.gz && \

|

||||

@ -70,19 +61,67 @@ RUN cd /opt && \

|

||||

ENV NODE_HOME=/opt/node

|

||||

ENV PATH $PATH:$NODE_HOME/bin

|

||||

|

||||

#5,install postgresql

|

||||

RUN apt-get update && \

|

||||

apt-get install -y postgresql postgresql-contrib sudo && \

|

||||

sed -i 's/localhost/*/g' /etc/postgresql/10/main/postgresql.conf

|

||||

#5,下载escheduler

|

||||

RUN cd /opt && \

|

||||

wget https://github.com/analysys/EasyScheduler/archive/${version}.tar.gz && \

|

||||

tar -zxvf ${version}.tar.gz && \

|

||||

mv EasyScheduler-${version} easyscheduler_source && \

|

||||

rm -rf ./${version}.tar.gz

|

||||

|

||||

#6,install nginx

|

||||

#6,后端编译

|

||||

RUN cd /opt/easyscheduler_source && \

|

||||

mvn -U clean package assembly:assembly -Dmaven.test.skip=true

|

||||

|

||||

#7,前端编译

|

||||

RUN chmod -R 777 /opt/easyscheduler_source/escheduler-ui && \

|

||||

cd /opt/easyscheduler_source/escheduler-ui && \

|

||||

rm -rf /opt/easyscheduler_source/escheduler-ui/node_modules && \

|

||||

npm install node-sass --unsafe-perm && \

|

||||

npm install && \

|

||||

npm run build

|

||||

#8,安装mysql

|

||||

RUN echo "deb http://cn.archive.ubuntu.com/ubuntu/ xenial main restricted universe multiverse" >> /etc/apt/sources.list

|

||||

|

||||

RUN echo "mysql-server mysql-server/root_password password root" | debconf-set-selections

|

||||

RUN echo "mysql-server mysql-server/root_password_again password root" | debconf-set-selections

|

||||

|

||||

RUN apt-get update && \

|

||||

apt-get -y install mysql-server-5.7 && \

|

||||

mkdir -p /var/lib/mysql && \

|

||||

mkdir -p /var/run/mysqld && \

|

||||

mkdir -p /var/log/mysql && \

|

||||

chown -R mysql:mysql /var/lib/mysql && \

|

||||

chown -R mysql:mysql /var/run/mysqld && \

|

||||

chown -R mysql:mysql /var/log/mysql

|

||||

|

||||

|

||||

# UTF-8 and bind-address

|

||||

RUN sed -i -e "$ a [client]\n\n[mysql]\n\n[mysqld]" /etc/mysql/my.cnf && \

|

||||

sed -i -e "s/\(\[client\]\)/\1\ndefault-character-set = utf8/g" /etc/mysql/my.cnf && \

|

||||

sed -i -e "s/\(\[mysql\]\)/\1\ndefault-character-set = utf8/g" /etc/mysql/my.cnf && \

|

||||

sed -i -e "s/\(\[mysqld\]\)/\1\ninit_connect='SET NAMES utf8'\ncharacter-set-server = utf8\ncollation-server=utf8_general_ci\nbind-address = 0.0.0.0/g" /etc/mysql/my.cnf

|

||||

|

||||

|

||||

#9,安装nginx

|

||||

RUN apt-get update && \

|

||||

apt-get install -y nginx && \

|

||||

rm -rf /var/lib/apt/lists/* && \

|

||||

echo "\ndaemon off;" >> /etc/nginx/nginx.conf && \

|

||||

chown -R www-data:www-data /var/lib/nginx

|

||||

|

||||

#7,install sudo,python,vim,ping and ssh command

|

||||

#10,修改escheduler配置文件

|

||||

#后端配置

|

||||

RUN mkdir -p /opt/escheduler && \

|

||||

tar -zxvf /opt/easyscheduler_source/target/escheduler-${tar_version}.tar.gz -C /opt/escheduler && \

|

||||

rm -rf /opt/escheduler/conf

|

||||

ADD ./conf/escheduler/conf /opt/escheduler/conf

|

||||

#前端nginx配置

|

||||

ADD ./conf/nginx/default.conf /etc/nginx/conf.d

|

||||

|

||||

#11,开放端口

|

||||

EXPOSE 2181 2888 3888 3306 80 12345 8888

|

||||

|

||||

#12,安装sudo,python,vim,ping和ssh

|

||||

RUN apt-get update && \

|

||||

apt-get -y install sudo && \

|

||||

apt-get -y install python && \

|

||||

@ -93,44 +132,15 @@ RUN apt-get update && \

|

||||

apt-get -y install python-pip && \

|

||||

pip install kazoo

|

||||

|

||||

#8,add dolphinscheduler source code to /opt/dolphinscheduler_source

|

||||

ADD . /opt/dolphinscheduler_source

|

||||

|

||||

|

||||

#9,backend compilation

|

||||

RUN cd /opt/dolphinscheduler_source && \

|

||||

mvn clean package -Prelease -Dmaven.test.skip=true

|

||||

|

||||

#10,frontend compilation

|

||||

RUN chmod -R 777 /opt/dolphinscheduler_source/dolphinscheduler-ui && \

|

||||

cd /opt/dolphinscheduler_source/dolphinscheduler-ui && \

|

||||

rm -rf /opt/dolphinscheduler_source/dolphinscheduler-ui/node_modules && \

|

||||

npm install node-sass --unsafe-perm && \

|

||||

npm install && \

|

||||

npm run build

|

||||

|

||||

#11,modify dolphinscheduler configuration file

|

||||

#backend configuration

|

||||

RUN tar -zxvf /opt/dolphinscheduler_source/dolphinscheduler-dist/dolphinscheduler-backend/target/apache-dolphinscheduler-incubating-${tar_version}-dolphinscheduler-backend-bin.tar.gz -C /opt && \

|

||||

mv /opt/apache-dolphinscheduler-incubating-${tar_version}-dolphinscheduler-backend-bin /opt/dolphinscheduler && \

|

||||

rm -rf /opt/dolphinscheduler/conf

|

||||

|

||||

ADD ./dockerfile/conf/dolphinscheduler/conf /opt/dolphinscheduler/conf

|

||||

#frontend nginx configuration

|

||||

ADD ./dockerfile/conf/nginx/dolphinscheduler.conf /etc/nginx/conf.d

|

||||

|

||||

#12,open port

|

||||

EXPOSE 2181 2888 3888 3306 80 12345 8888

|

||||

|

||||

COPY ./dockerfile/startup.sh /root/startup.sh

|

||||

#13,modify permissions and set soft links

|

||||

COPY ./startup.sh /root/startup.sh

|

||||

#13,修改权限和设置软连

|

||||

RUN chmod +x /root/startup.sh && \

|

||||

chmod +x /opt/dolphinscheduler/script/create-dolphinscheduler.sh && \

|

||||

chmod +x /opt/escheduler/script/create_escheduler.sh && \

|

||||

chmod +x /opt/zookeeper/bin/zkServer.sh && \

|

||||

chmod +x /opt/dolphinscheduler/bin/dolphinscheduler-daemon.sh && \

|

||||

chmod +x /opt/escheduler/bin/escheduler-daemon.sh && \

|

||||

rm -rf /bin/sh && \

|

||||

ln -s /bin/bash /bin/sh && \

|

||||

mkdir -p /tmp/xls

|

||||

|

||||

|

||||

ENTRYPOINT ["/root/startup.sh"]

|

||||

ENTRYPOINT ["/root/startup.sh"]

|

||||

|

||||

@ -1,11 +0,0 @@

|

||||

## Build Image

|

||||

```

|

||||

cd ..

|

||||

docker build -t dolphinscheduler --build-arg version=1.1.0 --build-arg tar_version=1.1.0-SNAPSHOT -f dockerfile/Dockerfile .

|

||||

docker run -p 12345:12345 -p 8888:8888 --rm --name dolphinscheduler -d dolphinscheduler

|

||||

```

|

||||

* Visit the url: http://127.0.0.1:8888

|

||||

* UserName:admin Password:dolphinscheduler123

|

||||

|

||||

## Note

|

||||

* MacOS: The memory of docker needs to be set to 4G, default 2G. Steps: Preferences -> Advanced -> adjust resources -> Apply & Restart

|

||||

@ -1,50 +0,0 @@

|

||||

#

|

||||

# Licensed to the Apache Software Foundation (ASF) under one or more

|

||||

# contributor license agreements. See the NOTICE file distributed with

|

||||

# this work for additional information regarding copyright ownership.

|

||||

# The ASF licenses this file to You under the Apache License, Version 2.0

|

||||

# (the "License"); you may not use this file except in compliance with

|

||||

# the License. You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

#

|

||||

|

||||

#alert type is EMAIL/SMS

|

||||

alert.type=EMAIL

|

||||

|

||||

# mail server configuration

|

||||

mail.protocol=SMTP

|

||||

mail.server.host=smtp.126.com

|

||||

mail.server.port=

|

||||

mail.sender=dolphinscheduler@126.com

|

||||

mail.user=dolphinscheduler@126.com

|

||||

mail.passwd=escheduler123

|

||||

|

||||

# TLS

|

||||

mail.smtp.starttls.enable=false

|

||||

# SSL

|

||||

mail.smtp.ssl.enable=true

|

||||

mail.smtp.ssl.trust=smtp.126.com

|

||||

|

||||

#xls file path,need create if not exist

|

||||

xls.file.path=/tmp/xls

|

||||

|

||||

# Enterprise WeChat configuration

|

||||

enterprise.wechat.enable=false

|

||||

enterprise.wechat.corp.id=xxxxxxx

|

||||

enterprise.wechat.secret=xxxxxxx

|

||||

enterprise.wechat.agent.id=xxxxxxx

|

||||

enterprise.wechat.users=xxxxxxx

|

||||

enterprise.wechat.token.url=https://qyapi.weixin.qq.com/cgi-bin/gettoken?corpid=$corpId&corpsecret=$secret

|

||||

enterprise.wechat.push.url=https://qyapi.weixin.qq.com/cgi-bin/message/send?access_token=$token

|

||||

enterprise.wechat.team.send.msg={\"toparty\":\"$toParty\",\"agentid\":\"$agentId\",\"msgtype\":\"text\",\"text\":{\"content\":\"$msg\"},\"safe\":\"0\"}

|

||||

enterprise.wechat.user.send.msg={\"touser\":\"$toUser\",\"agentid\":\"$agentId\",\"msgtype\":\"markdown\",\"markdown\":{\"content\":\"$msg\"}}

|

||||

|

||||

|

||||

|

||||

@ -1,49 +0,0 @@

|

||||

<?xml version="1.0" encoding="UTF-8" ?>

|

||||

<!--

|

||||

~ Licensed to the Apache Software Foundation (ASF) under one or more

|

||||

~ contributor license agreements. See the NOTICE file distributed with

|

||||

~ this work for additional information regarding copyright ownership.

|

||||

~ The ASF licenses this file to You under the Apache License, Version 2.0

|

||||

~ (the "License"); you may not use this file except in compliance with

|

||||

~ the License. You may obtain a copy of the License at

|

||||

~

|

||||

~ http://www.apache.org/licenses/LICENSE-2.0

|

||||

~

|

||||

~ Unless required by applicable law or agreed to in writing, software

|

||||

~ distributed under the License is distributed on an "AS IS" BASIS,

|

||||

~ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

~ See the License for the specific language governing permissions and

|

||||

~ limitations under the License.

|

||||

-->

|

||||

|

||||

<!-- Logback configuration. See http://logback.qos.ch/manual/index.html -->

|

||||

<configuration scan="true" scanPeriod="120 seconds"> <!--debug="true" -->

|

||||

<property name="log.base" value="logs" />

|

||||

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">

|

||||

<encoder>

|

||||

<pattern>

|

||||

[%level] %date{yyyy-MM-dd HH:mm:ss.SSS} %logger{96}:[%line] - %msg%n

|

||||

</pattern>

|

||||

<charset>UTF-8</charset>

|

||||

</encoder>

|

||||

</appender>

|

||||

|

||||

<appender name="ALERTLOGFILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

|

||||

<file>${log.base}/dolphinscheduler-alert.log</file>

|

||||

<rollingPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedRollingPolicy">

|

||||

<fileNamePattern>${log.base}/dolphinscheduler-alert.%d{yyyy-MM-dd_HH}.%i.log</fileNamePattern>

|

||||

<maxHistory>20</maxHistory>

|

||||

<maxFileSize>64MB</maxFileSize>

|

||||

</rollingPolicy>

|

||||

<encoder>

|

||||

<pattern>

|

||||

[%level] %date{yyyy-MM-dd HH:mm:ss.SSS} %logger{96}:[%line] - %msg%n

|

||||

</pattern>

|

||||

<charset>UTF-8</charset>

|

||||

</encoder>

|

||||

</appender>

|

||||

|

||||

<root level="INFO">

|

||||

<appender-ref ref="ALERTLOGFILE"/>

|

||||

</root>

|

||||

</configuration>

|

||||

@ -1,40 +0,0 @@

|

||||

#

|

||||

# Licensed to the Apache Software Foundation (ASF) under one or more

|

||||

# contributor license agreements. See the NOTICE file distributed with

|

||||

# this work for additional information regarding copyright ownership.

|

||||

# The ASF licenses this file to You under the Apache License, Version 2.0

|

||||

# (the "License"); you may not use this file except in compliance with

|

||||

# the License. You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

#

|

||||

|

||||

logging.config=classpath:apiserver_logback.xml

|

||||

|

||||

# server port

|

||||

server.port=12345

|

||||

|

||||

# session config

|

||||

server.servlet.session.timeout=7200

|

||||

|

||||

server.servlet.context-path=/dolphinscheduler/

|

||||

|

||||

# file size limit for upload

|

||||

spring.servlet.multipart.max-file-size=1024MB

|

||||

spring.servlet.multipart.max-request-size=1024MB

|

||||

|

||||

#post content

|

||||

server.jetty.max-http-post-size=5000000

|

||||

|

||||

spring.messages.encoding=UTF-8

|

||||

|

||||

#i18n classpath folder , file prefix messages, if have many files, use "," seperator

|

||||

spring.messages.basename=i18n/messages

|

||||

|

||||

|

||||

@ -1,80 +0,0 @@

|

||||

<?xml version="1.0" encoding="UTF-8" ?>

|

||||

<!--

|

||||

~ Licensed to the Apache Software Foundation (ASF) under one or more

|

||||

~ contributor license agreements. See the NOTICE file distributed with

|

||||

~ this work for additional information regarding copyright ownership.

|

||||

~ The ASF licenses this file to You under the Apache License, Version 2.0

|

||||

~ (the "License"); you may not use this file except in compliance with

|

||||

~ the License. You may obtain a copy of the License at

|

||||

~

|

||||

~ http://www.apache.org/licenses/LICENSE-2.0

|

||||

~

|

||||

~ Unless required by applicable law or agreed to in writing, software

|

||||

~ distributed under the License is distributed on an "AS IS" BASIS,

|

||||

~ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

~ See the License for the specific language governing permissions and

|

||||

~ limitations under the License.

|

||||

-->

|

||||

|

||||

<!-- Logback configuration. See http://logback.qos.ch/manual/index.html -->

|

||||

<configuration scan="true" scanPeriod="120 seconds">

|

||||

<property name="log.base" value="logs"/>

|

||||

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">

|

||||

<encoder>

|

||||

<pattern>

|

||||

%highlight([%level]) %date{yyyy-MM-dd HH:mm:ss.SSS} %logger{10}:[%line] - %msg%n

|

||||

</pattern>

|

||||

<charset>UTF-8</charset>

|

||||

</encoder>

|

||||

</appender>

|

||||

<appender name="TASKLOGFILE" class="ch.qos.logback.classic.sift.SiftingAppender">

|

||||

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

|

||||

<level>INFO</level>

|

||||

</filter>

|

||||

<filter class="org.apache.dolphinscheduler.server.worker.log.TaskLogFilter"></filter>

|

||||

<Discriminator class="org.apache.dolphinscheduler.server.worker.log.TaskLogDiscriminator">

|

||||

<key>taskAppId</key>

|

||||

<logBase>${log.base}</logBase>

|

||||

</Discriminator>

|

||||

<sift>

|

||||

<appender name="FILE-${taskAppId}" class="ch.qos.logback.core.FileAppender">

|

||||

<file>${log.base}/${taskAppId}.log</file>

|

||||

<encoder>

|

||||

<pattern>

|

||||

[%level] %date{yyyy-MM-dd HH:mm:ss.SSS} %logger{96}:[%line] - %msg%n

|

||||

</pattern>

|

||||

<charset>UTF-8</charset>

|

||||

</encoder>

|

||||

<append>true</append>

|

||||

</appender>

|

||||

</sift>

|

||||

</appender>

|

||||

|

||||

<appender name="COMBINEDLOGFILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

|

||||

<file>${log.base}/dolphinscheduler-combined.log</file>

|

||||

<filter class="org.apache.dolphinscheduler.server.worker.log.WorkerLogFilter">

|

||||

<level>INFO</level>

|

||||

</filter>

|

||||

|

||||

<rollingPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedRollingPolicy">

|

||||

<fileNamePattern>${log.base}/dolphinscheduler-combined.%d{yyyy-MM-dd_HH}.%i.log</fileNamePattern>

|

||||

<maxHistory>168</maxHistory>

|

||||

<maxFileSize>200MB</maxFileSize>

|

||||

</rollingPolicy>

|

||||

|

||||

<encoder>

|

||||

<pattern>

|

||||

[%level] %date{yyyy-MM-dd HH:mm:ss.SSS} %logger{96}:[%line] - %msg%n

|

||||

</pattern>

|

||||

<charset>UTF-8</charset>

|

||||

</encoder>

|

||||

|

||||

</appender>

|

||||

|

||||

|

||||

<root level="INFO">

|

||||

<appender-ref ref="STDOUT"/>

|

||||

<appender-ref ref="TASKLOGFILE"/>

|

||||

<appender-ref ref="COMBINEDLOGFILE"/>

|

||||

</root>

|

||||

</configuration>

|

||||

@ -1,35 +0,0 @@

|

||||

#

|

||||

# Licensed to the Apache Software Foundation (ASF) under one or more

|

||||

# contributor license agreements. See the NOTICE file distributed with

|

||||

# this work for additional information regarding copyright ownership.

|

||||

# The ASF licenses this file to You under the Apache License, Version 2.0

|

||||

# (the "License"); you may not use this file except in compliance with

|

||||

# the License. You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

#

|

||||

|

||||

# ha or single namenode,If namenode ha needs to copy core-site.xml and hdfs-site.xml

|

||||

# to the conf directory,support s3,for example : s3a://dolphinscheduler

|

||||

fs.defaultFS=hdfs://mycluster:8020

|

||||

|

||||

# s3 need,s3 endpoint

|

||||

fs.s3a.endpoint=http://192.168.199.91:9010

|

||||

|

||||

# s3 need,s3 access key

|

||||

fs.s3a.access.key=A3DXS30FO22544RE

|

||||

|

||||

# s3 need,s3 secret key

|

||||

fs.s3a.secret.key=OloCLq3n+8+sdPHUhJ21XrSxTC+JK

|

||||

|

||||

#resourcemanager ha note this need ips , this empty if single

|

||||

yarn.resourcemanager.ha.rm.ids=192.168.xx.xx,192.168.xx.xx

|

||||

|

||||

# If it is a single resourcemanager, you only need to configure one host name. If it is resourcemanager HA, the default configuration is fine

|

||||

yarn.application.status.address=http://ark1:8088/ws/v1/cluster/apps/%s

|

||||

@ -1,20 +0,0 @@

|

||||

#

|

||||

# Licensed to the Apache Software Foundation (ASF) under one or more

|

||||

# contributor license agreements. See the NOTICE file distributed with

|

||||

# this work for additional information regarding copyright ownership.

|

||||

# The ASF licenses this file to You under the Apache License, Version 2.0

|

||||

# (the "License"); you may not use this file except in compliance with

|

||||

# the License. You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

#

|

||||

|

||||

installPath=/data1_1T/dolphinscheduler

|

||||

deployUser=dolphinscheduler

|

||||

ips=ark0,ark1,ark2,ark3,ark4

|

||||

@ -1,21 +0,0 @@

|

||||

#

|

||||

# Licensed to the Apache Software Foundation (ASF) under one or more

|

||||

# contributor license agreements. See the NOTICE file distributed with

|

||||

# this work for additional information regarding copyright ownership.

|

||||

# The ASF licenses this file to You under the Apache License, Version 2.0

|

||||

# (the "License"); you may not use this file except in compliance with

|

||||

# the License. You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

#

|

||||

|

||||

masters=ark0,ark1

|

||||

workers=ark2,ark3,ark4

|

||||

alertServer=ark3

|

||||

apiServers=ark1

|

||||

@ -1,20 +0,0 @@

|

||||

#

|

||||

# Licensed to the Apache Software Foundation (ASF) under one or more

|

||||

# contributor license agreements. See the NOTICE file distributed with

|

||||

# this work for additional information regarding copyright ownership.

|

||||

# The ASF licenses this file to You under the Apache License, Version 2.0

|

||||

# (the "License"); you may not use this file except in compliance with

|

||||

# the License. You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

#

|

||||

|

||||

export PYTHON_HOME=/usr/bin/python

|

||||

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

|

||||

export PATH=$PYTHON_HOME:$JAVA_HOME/bin:$PATH

|

||||

@ -1,20 +0,0 @@

|

||||

#

|

||||

# Licensed to the Apache Software Foundation (ASF) under one or more

|

||||

# contributor license agreements. See the NOTICE file distributed with

|

||||

# this work for additional information regarding copyright ownership.

|

||||

# The ASF licenses this file to You under the Apache License, Version 2.0

|

||||

# (the "License"); you may not use this file except in compliance with

|

||||

# the License. You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

#

|

||||

|

||||

export PYTHON_HOME=/usr/bin/python

|

||||

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

|

||||

export PATH=$PYTHON_HOME:$JAVA_HOME/bin:$PATH

|

||||

@ -1,17 +0,0 @@

|

||||

<#--

|

||||