mirror of

https://gitee.com/gokins/gokins.git

synced 2024-11-29 09:47:37 +08:00

feat: v1.0.0

This commit is contained in:

parent

612a9abee1

commit

74eccd2991

151

.gitignore

vendored

151

.gitignore

vendored

@ -1,135 +1,24 @@

|

||||

# https://github.com/github/gitignore/blob/master/Java.gitignore

|

||||

# Binaries for programs and plugins

|

||||

*.exe

|

||||

*.exe~

|

||||

*.dll

|

||||

*.so

|

||||

*.dylib

|

||||

|

||||

*.dat

|

||||

*.class

|

||||

/ruis.go

|

||||

|

||||

# Mobile Tools for Java (J2ME)

|

||||

.mtj.tmp/

|

||||

|

||||

# Package Files #

|

||||

#*.jar

|

||||

*.war

|

||||

*.ear

|

||||

*.log

|

||||

*.log.*

|

||||

|

||||

# virtual machine crash logs, see http://www.java.com/en/download/help/error_hotspot.xml

|

||||

hs_err_pid*

|

||||

|

||||

/.project

|

||||

|

||||

|

||||

# https://github.com/github/gitignore/blob/master/Maven.gitignore

|

||||

target/

|

||||

pom.xml.tag

|

||||

pom.xml.releaseBackup

|

||||

pom.xml.versionsBackup

|

||||

pom.xml.next

|

||||

release.properties

|

||||

dependency-reduced-pom.xml

|

||||

buildNumber.properties

|

||||

.mvn/timing.properties

|

||||

|

||||

|

||||

# https://github.com/github/gitignore/blob/master/Global/Eclipse.gitignore

|

||||

|

||||

.metadata

|

||||

tmp/

|

||||

*.tmp

|

||||

# Test binary, built with `go test -c`

|

||||

/.idea

|

||||

/.github

|

||||

/dbm.bat

|

||||

/dbm.sh

|

||||

/model.yml

|

||||

/install.html

|

||||

*.test

|

||||

*.bak

|

||||

*.swp

|

||||

*~.nib

|

||||

local.properties

|

||||

.settings/

|

||||

.loadpath

|

||||

.recommenders

|

||||

|

||||

# Eclipse Core

|

||||

.project

|

||||

|

||||

# External tool builders

|

||||

.externalToolBuilders/

|

||||

|

||||

# Locally stored "Eclipse launch configurations"

|

||||

*.launch

|

||||

|

||||

# PyDev specific (Python IDE for Eclipse)

|

||||

*.pydevproject

|

||||

|

||||

# CDT-specific (C/C++ Development Tooling)

|

||||

.cproject

|

||||

|

||||

# JDT-specific (Eclipse Java Development Tools)

|

||||

.classpath

|

||||

|

||||

# Java annotation processor (APT)

|

||||

.factorypath

|

||||

|

||||

# PDT-specific (PHP Development Tools)

|

||||

.buildpath

|

||||

|

||||

# sbteclipse plugin

|

||||

.target

|

||||

|

||||

# Tern plugin

|

||||

.tern-project

|

||||

|

||||

# TeXlipse plugin

|

||||

.texlipse

|

||||

|

||||

# STS (Spring Tool Suite)

|

||||

.springBeans

|

||||

|

||||

# Code Recommenders

|

||||

.recommenders/

|

||||

|

||||

|

||||

# https://github.com/github/gitignore/blob/master/Global/JetBrains.gitignore

|

||||

|

||||

# Covers JetBrains IDEs: IntelliJ, RubyMine, PhpStorm, AppCode, PyCharm, CLion, Android Studio and Webstorm

|

||||

# Reference: https://intellij-support.jetbrains.com/hc/en-us/articles/206544839

|

||||

|

||||

## File-based project format:

|

||||

*.iws

|

||||

|

||||

## Plugin-specific files:

|

||||

|

||||

# IntelliJ

|

||||

out/

|

||||

|

||||

# mpeltonen/sbt-idea plugin

|

||||

.idea_modules/

|

||||

|

||||

# JIRA plugin

|

||||

atlassian-ide-plugin.xml

|

||||

|

||||

# Crashlytics plugin (for Android Studio and IntelliJ)

|

||||

com_crashlytics_export_strings.xml

|

||||

crashlytics.properties

|

||||

crashlytics-build.properties

|

||||

fabric.properties

|

||||

|

||||

|

||||

# zeroturnaround

|

||||

rebel.xml

|

||||

|

||||

|

||||

# log

|

||||

log/

|

||||

logs/

|

||||

~$*.*

|

||||

|

||||

|

||||

# IntelliJ IDEA

|

||||

*.iml

|

||||

.idea/

|

||||

.gradle/

|

||||

cmake-build-*/

|

||||

|

||||

# Visual Studio

|

||||

.vs/

|

||||

# Output of the go coverage tool, specifically when used with LiteIDE

|

||||

*.out

|

||||

bin/

|

||||

obj/

|

||||

CMakeFiles/

|

||||

resource/*.o

|

||||

|

||||

# Dependency directories (remove the comment below to include it)

|

||||

# vendor/

|

||||

__debug_bin

|

||||

|

||||

22

Dockerfile

22

Dockerfile

@ -1,22 +0,0 @@

|

||||

## 感谢 lockp111 提供的Dockerfile

|

||||

|

||||

FROM golang:1.15.2-alpine AS builder

|

||||

|

||||

RUN apk add git gcc libc-dev && git clone https://github.com/mgr9525/gokins.git /build

|

||||

WORKDIR /build

|

||||

RUN GOOS=linux GOARCH=amd64 go build -o bin/gokins main.go

|

||||

|

||||

|

||||

FROM alpine:latest AS final

|

||||

|

||||

RUN apk update \

|

||||

&& apk upgrade \

|

||||

&& apk --no-cache add openssl \

|

||||

&& apk --no-cache add ca-certificates \

|

||||

&& rm -rf /var/cache/apk \

|

||||

&& mkdir -p /app

|

||||

|

||||

COPY --from=builder /build/bin/gokins /app

|

||||

WORKDIR /app

|

||||

ENTRYPOINT ["/app/gokins"]

|

||||

|

||||

215

LICENSE

215

LICENSE

@ -1,202 +1,21 @@

|

||||

MIT License

|

||||

|

||||

Apache License

|

||||

Version 2.0, January 2004

|

||||

http://www.apache.org/licenses/

|

||||

Copyright (c) 2021 gokins-main

|

||||

|

||||

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy

|

||||

of this software and associated documentation files (the "Software"), to deal

|

||||

in the Software without restriction, including without limitation the rights

|

||||

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

||||

copies of the Software, and to permit persons to whom the Software is

|

||||

furnished to do so, subject to the following conditions:

|

||||

|

||||

1. Definitions.

|

||||

The above copyright notice and this permission notice shall be included in all

|

||||

copies or substantial portions of the Software.

|

||||

|

||||

"License" shall mean the terms and conditions for use, reproduction,

|

||||

and distribution as defined by Sections 1 through 9 of this document.

|

||||

|

||||

"Licensor" shall mean the copyright owner or entity authorized by

|

||||

the copyright owner that is granting the License.

|

||||

|

||||

"Legal Entity" shall mean the union of the acting entity and all

|

||||

other entities that control, are controlled by, or are under common

|

||||

control with that entity. For the purposes of this definition,

|

||||

"control" means (i) the power, direct or indirect, to cause the

|

||||

direction or management of such entity, whether by contract or

|

||||

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

||||

outstanding shares, or (iii) beneficial ownership of such entity.

|

||||

|

||||

"You" (or "Your") shall mean an individual or Legal Entity

|

||||

exercising permissions granted by this License.

|

||||

|

||||

"Source" form shall mean the preferred form for making modifications,

|

||||

including but not limited to software source code, documentation

|

||||

source, and configuration files.

|

||||

|

||||

"Object" form shall mean any form resulting from mechanical

|

||||

transformation or translation of a Source form, including but

|

||||

not limited to compiled object code, generated documentation,

|

||||

and conversions to other media types.

|

||||

|

||||

"Work" shall mean the work of authorship, whether in Source or

|

||||

Object form, made available under the License, as indicated by a

|

||||

copyright notice that is included in or attached to the work

|

||||

(an example is provided in the Appendix below).

|

||||

|

||||

"Derivative Works" shall mean any work, whether in Source or Object

|

||||

form, that is based on (or derived from) the Work and for which the

|

||||

editorial revisions, annotations, elaborations, or other modifications

|

||||

represent, as a whole, an original work of authorship. For the purposes

|

||||

of this License, Derivative Works shall not include works that remain

|

||||

separable from, or merely link (or bind by name) to the interfaces of,

|

||||

the Work and Derivative Works thereof.

|

||||

|

||||

"Contribution" shall mean any work of authorship, including

|

||||

the original version of the Work and any modifications or additions

|

||||

to that Work or Derivative Works thereof, that is intentionally

|

||||

submitted to Licensor for inclusion in the Work by the copyright owner

|

||||

or by an individual or Legal Entity authorized to submit on behalf of

|

||||

the copyright owner. For the purposes of this definition, "submitted"

|

||||

means any form of electronic, verbal, or written communication sent

|

||||

to the Licensor or its representatives, including but not limited to

|

||||

communication on electronic mailing lists, source code control systems,

|

||||

and issue tracking systems that are managed by, or on behalf of, the

|

||||

Licensor for the purpose of discussing and improving the Work, but

|

||||

excluding communication that is conspicuously marked or otherwise

|

||||

designated in writing by the copyright owner as "Not a Contribution."

|

||||

|

||||

"Contributor" shall mean Licensor and any individual or Legal Entity

|

||||

on behalf of whom a Contribution has been received by Licensor and

|

||||

subsequently incorporated within the Work.

|

||||

|

||||

2. Grant of Copyright License. Subject to the terms and conditions of

|

||||

this License, each Contributor hereby grants to You a perpetual,

|

||||

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

||||

copyright license to reproduce, prepare Derivative Works of,

|

||||

publicly display, publicly perform, sublicense, and distribute the

|

||||

Work and such Derivative Works in Source or Object form.

|

||||

|

||||

3. Grant of Patent License. Subject to the terms and conditions of

|

||||

this License, each Contributor hereby grants to You a perpetual,

|

||||

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

||||

(except as stated in this section) patent license to make, have made,

|

||||

use, offer to sell, sell, import, and otherwise transfer the Work,

|

||||

where such license applies only to those patent claims licensable

|

||||

by such Contributor that are necessarily infringed by their

|

||||

Contribution(s) alone or by combination of their Contribution(s)

|

||||

with the Work to which such Contribution(s) was submitted. If You

|

||||

institute patent litigation against any entity (including a

|

||||

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

||||

or a Contribution incorporated within the Work constitutes direct

|

||||

or contributory patent infringement, then any patent licenses

|

||||

granted to You under this License for that Work shall terminate

|

||||

as of the date such litigation is filed.

|

||||

|

||||

4. Redistribution. You may reproduce and distribute copies of the

|

||||

Work or Derivative Works thereof in any medium, with or without

|

||||

modifications, and in Source or Object form, provided that You

|

||||

meet the following conditions:

|

||||

|

||||

(a) You must give any other recipients of the Work or

|

||||

Derivative Works a copy of this License; and

|

||||

|

||||

(b) You must cause any modified files to carry prominent notices

|

||||

stating that You changed the files; and

|

||||

|

||||

(c) You must retain, in the Source form of any Derivative Works

|

||||

that You distribute, all copyright, patent, trademark, and

|

||||

attribution notices from the Source form of the Work,

|

||||

excluding those notices that do not pertain to any part of

|

||||

the Derivative Works; and

|

||||

|

||||

(d) If the Work includes a "NOTICE" text file as part of its

|

||||

distribution, then any Derivative Works that You distribute must

|

||||

include a readable copy of the attribution notices contained

|

||||

within such NOTICE file, excluding those notices that do not

|

||||

pertain to any part of the Derivative Works, in at least one

|

||||

of the following places: within a NOTICE text file distributed

|

||||

as part of the Derivative Works; within the Source form or

|

||||

documentation, if provided along with the Derivative Works; or,

|

||||

within a display generated by the Derivative Works, if and

|

||||

wherever such third-party notices normally appear. The contents

|

||||

of the NOTICE file are for informational purposes only and

|

||||

do not modify the License. You may add Your own attribution

|

||||

notices within Derivative Works that You distribute, alongside

|

||||

or as an addendum to the NOTICE text from the Work, provided

|

||||

that such additional attribution notices cannot be construed

|

||||

as modifying the License.

|

||||

|

||||

You may add Your own copyright statement to Your modifications and

|

||||

may provide additional or different license terms and conditions

|

||||

for use, reproduction, or distribution of Your modifications, or

|

||||

for any such Derivative Works as a whole, provided Your use,

|

||||

reproduction, and distribution of the Work otherwise complies with

|

||||

the conditions stated in this License.

|

||||

|

||||

5. Submission of Contributions. Unless You explicitly state otherwise,

|

||||

any Contribution intentionally submitted for inclusion in the Work

|

||||

by You to the Licensor shall be under the terms and conditions of

|

||||

this License, without any additional terms or conditions.

|

||||

Notwithstanding the above, nothing herein shall supersede or modify

|

||||

the terms of any separate license agreement you may have executed

|

||||

with Licensor regarding such Contributions.

|

||||

|

||||

6. Trademarks. This License does not grant permission to use the trade

|

||||

names, trademarks, service marks, or product names of the Licensor,

|

||||

except as required for reasonable and customary use in describing the

|

||||

origin of the Work and reproducing the content of the NOTICE file.

|

||||

|

||||

7. Disclaimer of Warranty. Unless required by applicable law or

|

||||

agreed to in writing, Licensor provides the Work (and each

|

||||

Contributor provides its Contributions) on an "AS IS" BASIS,

|

||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

||||

implied, including, without limitation, any warranties or conditions

|

||||

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

||||

PARTICULAR PURPOSE. You are solely responsible for determining the

|

||||

appropriateness of using or redistributing the Work and assume any

|

||||

risks associated with Your exercise of permissions under this License.

|

||||

|

||||

8. Limitation of Liability. In no event and under no legal theory,

|

||||

whether in tort (including negligence), contract, or otherwise,

|

||||

unless required by applicable law (such as deliberate and grossly

|

||||

negligent acts) or agreed to in writing, shall any Contributor be

|

||||

liable to You for damages, including any direct, indirect, special,

|

||||

incidental, or consequential damages of any character arising as a

|

||||

result of this License or out of the use or inability to use the

|

||||

Work (including but not limited to damages for loss of goodwill,

|

||||

work stoppage, computer failure or malfunction, or any and all

|

||||

other commercial damages or losses), even if such Contributor

|

||||

has been advised of the possibility of such damages.

|

||||

|

||||

9. Accepting Warranty or Additional Liability. While redistributing

|

||||

the Work or Derivative Works thereof, You may choose to offer,

|

||||

and charge a fee for, acceptance of support, warranty, indemnity,

|

||||

or other liability obligations and/or rights consistent with this

|

||||

License. However, in accepting such obligations, You may act only

|

||||

on Your own behalf and on Your sole responsibility, not on behalf

|

||||

of any other Contributor, and only if You agree to indemnify,

|

||||

defend, and hold each Contributor harmless for any liability

|

||||

incurred by, or claims asserted against, such Contributor by reason

|

||||

of your accepting any such warranty or additional liability.

|

||||

|

||||

END OF TERMS AND CONDITIONS

|

||||

|

||||

APPENDIX: How to apply the Apache License to your work.

|

||||

|

||||

To apply the Apache License to your work, attach the following

|

||||

boilerplate notice, with the fields enclosed by brackets "[]"

|

||||

replaced with your own identifying information. (Don't include

|

||||

the brackets!) The text should be enclosed in the appropriate

|

||||

comment syntax for the file format. We also recommend that a

|

||||

file or class name and description of purpose be included on the

|

||||

same "printed page" as the copyright notice for easier

|

||||

identification within third-party archives.

|

||||

|

||||

Copyright [yyyy] [name of copyright owner]

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License");

|

||||

you may not use this file except in compliance with the License.

|

||||

You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software

|

||||

distributed under the License is distributed on an "AS IS" BASIS,

|

||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

See the License for the specific language governing permissions and

|

||||

limitations under the License.

|

||||

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

||||

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

||||

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

||||

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

||||

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

||||

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

||||

SOFTWARE.

|

||||

|

||||

296

README.md

296

README.md

@ -1,257 +1,121 @@

|

||||

<p align="center"><img src="static/logo.jpg" width="50%" height="50%"></p>

|

||||

|

||||

# Gokins: *More Power*

|

||||

构建赋能,更加强大

|

||||

# Gokins文档

|

||||

|

||||

[](https://www.apache.org/licenses/LICENSE-2.0.html)

|

||||

# Gokins: *More Power*

|

||||

|

||||

-------

|

||||

|

||||

## What does it do

|

||||

|

||||

Gokins是一个由Go语言和Vue编写的款轻量级、能够持续集成和持续交付的工具

|

||||

|

||||

|

||||

|

||||

* **持续集成和持续交付**

|

||||

|

||||

作为一个可扩展的自动化服务器,Gokins 可以用作简单的 CI 服务器,或者变成任何项目的持续交付中心

|

||||

|

||||

|

||||

|

||||

Gokins一款由Go语言和Vue编写的款轻量级、能够持续集成和持续交付的工具.

|

||||

|

||||

* **持续集成和持续交付**

|

||||

|

||||

作为一个可扩展的自动化服务器,Gokins 可以用作简单的 CI 服务器,或者变成任何项目的持续交付中心

|

||||

|

||||

* **简易安装**

|

||||

|

||||

Gokins 是一个基于 Go 的独立程序,可以立即运行,包含 Windows、Mac OS X 和其他类 Unix 操作系统。

|

||||

|

||||

* **配置简单**

|

||||

|

||||

Gokins 可以通过其网页界面轻松设置和配置,几乎没有难度。

|

||||

|

||||

|

||||

Gokins 是一个基于 Go 的独立程序,可以立即运行,包含 Windows、Mac OS X 和其他类 Unix 操作系统。

|

||||

|

||||

|

||||

* **安全**

|

||||

|

||||

绝不收集任何用户、服务器信息,是一个独立安全的服务

|

||||

|

||||

|

||||

绝不收集任何用户、服务器信息,是一个独立安全的服务

|

||||

|

||||

## Gokins 官网

|

||||

|

||||

**地址 : http://gokins.cn**

|

||||

**地址 : http://gokins.cn**

|

||||

|

||||

目前gokins的1.0版本正在重构中。

|

||||

可在官网上获取最新的Gokins动态

|

||||

|

||||

1.0版本将会比现在的版本提供更多的特性和更加的简洁。

|

||||

|

||||

|

||||

## Demo

|

||||

|

||||

**体验地址:http://demo.gokins.cn:8030**

|

||||

|

||||

|

||||

|

||||

1. 本示例仅提供展示作用(登录密码:123456)

|

||||

2. 此示例每天凌晨会有gokins定时器 __重新编译__

|

||||

3. 如果进不去,就是被其他人玩坏了,请等待重新编译(第二天早点来)

|

||||

4. 示例提供`git`、`gcc`、`golang`、`java8`、`maven`环境

|

||||

|

||||

|

||||

|

||||

## Gokins Demo

|

||||

http://gokins.cn:8030

|

||||

```

|

||||

用户名: guest

|

||||

密码: 123456

|

||||

```

|

||||

|

||||

## Quick Start

|

||||

|

||||

It is super easy to get started with your first project.

|

||||

|

||||

|

||||

#### Step 1: 下载

|

||||

#### Step 1: 环境准备

|

||||

|

||||

[latest stable release](https://github.com/mgr9525/gokins/releases).

|

||||

- Mysql

|

||||

- Dokcer(非必要)

|

||||

|

||||

#### Step 2: 启动服务

|

||||

#### Step 2: 下载

|

||||

- Linux下载:http://bin.gokins.cn/gokins-linux-amd64

|

||||

- Mac下载:http://bin.gokins.cn/gokins-darwin-amd64

|

||||

> 我们推荐使用docker或者直接下载release的方式安装Gokins`

|

||||

|

||||

#### Step 3: 启动服务

|

||||

|

||||

```

|

||||

./gokins

|

||||

```

|

||||

#### Step 3: 查看服务

|

||||

|

||||

访问 `http://localhost:8030`

|

||||

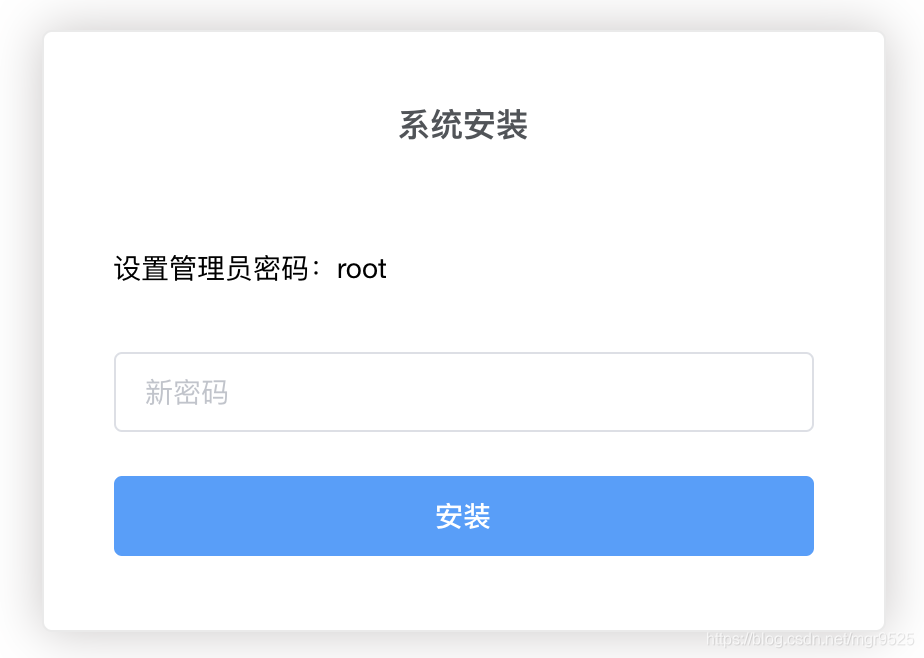

#### Step 3: 安装Gokins

|

||||

|

||||

访问 `http://localhost:8030`进入到Gokins安装页面

|

||||

|

||||

|

||||

|

||||

按页面上的提示填入信息

|

||||

|

||||

默认管理员账号密码

|

||||

|

||||

`username :gokins `

|

||||

|

||||

`pwd: 123456 `

|

||||

|

||||

#### Step 4: 新建流水线

|

||||

|

||||

- 进入到流水线页面

|

||||

|

||||

|

||||

|

||||

|

||||

### 使用gokins

|

||||

|

||||

- 点击新建流水线

|

||||

|

||||

|

||||

|

||||

|

||||

#### 下载运行

|

||||

填入流水线基本信息

|

||||

|

||||

- github地址 : https://github.com/mgr9525/gokins

|

||||

- gitee地址 : https://gitee.com/gokins/gokins

|

||||

- 流水线配置

|

||||

|

||||

可在对应平台需找发行版

|

||||

|

||||

- 或者直接在服务器上执行以下命令

|

||||

```

|

||||

version: 1.0

|

||||

vars:

|

||||

stages:

|

||||

- stage:

|

||||

displayName: build

|

||||

name: build

|

||||

steps:

|

||||

- step: shell@sh

|

||||

displayName: test-build

|

||||

name: build

|

||||

env:

|

||||

commands:

|

||||

- echo Hello World

|

||||

|

||||

```

|

||||

|

||||

//获取可执行文件

|

||||

wget -c https://github.com/mgr9525/gokins/releases/download/v0.1.2/gokins-linux-amd64

|

||||

关于流水线配置的YML更多信息请访问 [YML文档](http://gokins.cn/%E5%B7%A5%E4%BD%9C%E6%B5%81%E8%AF%AD%E6%B3%95/)

|

||||

|

||||

//授权

|

||||

chmod +x gokins-linux-amd64

|

||||

|

||||

//运行gokins

|

||||

./gokins-linux-amd64

|

||||

- 运行流水线

|

||||

|

||||

//查看帮助命令

|

||||

./gokins-linux-amd64 --help

|

||||

|

||||

|

||||

```

|

||||

|

||||

- 运行成功后 访问 `8030` 端口

|

||||

`这里可以选择输入仓库分支或者commitSha,如果不填则为默认分支`

|

||||

|

||||

#### 初始化配置

|

||||

- 设置root账号密码

|

||||

- 查看运行结果

|

||||

|

||||

|

||||

|

||||

- 登录后我们可以查看主界面

|

||||

|

||||

|

||||

|

||||

#### 流水线的使用

|

||||

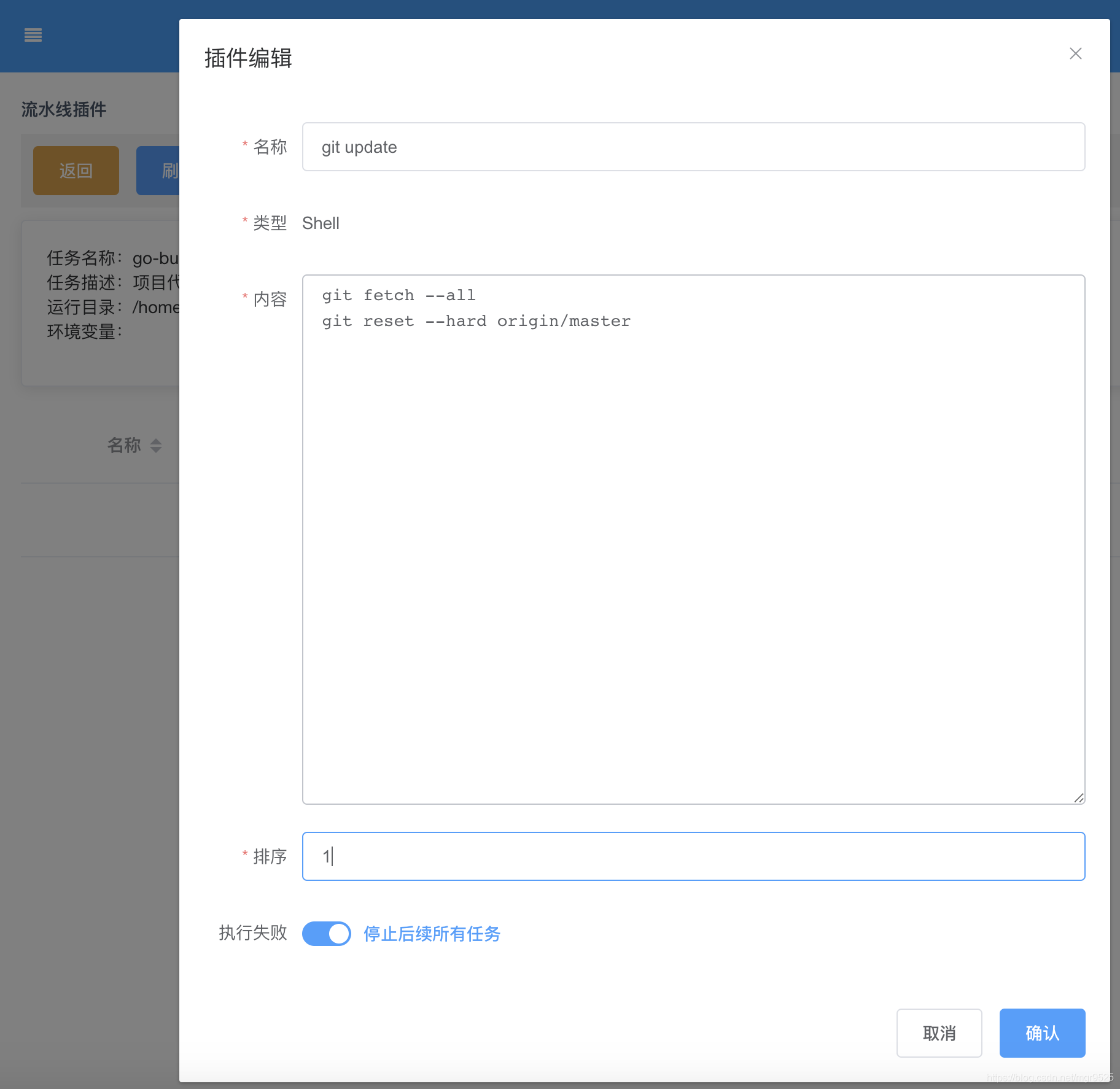

1. 更新git代码

|

||||

|

||||

配置代码目录

|

||||

|

||||

```

|

||||

|

||||

cd ~

|

||||

mkdir programs

|

||||

cd programs

|

||||

git clone http://用户名:密码@git.xxx.cn/IPFS/IPFS-Slave.git

|

||||

cd IPFS-Slave/

|

||||

pwd

|

||||

|

||||

```

|

||||

|

||||

使用用户名密码clone防止流水线更新代码时需要登录凭证

|

||||

|

||||

复制此目录路径,新建流水线时需要

|

||||

|

||||

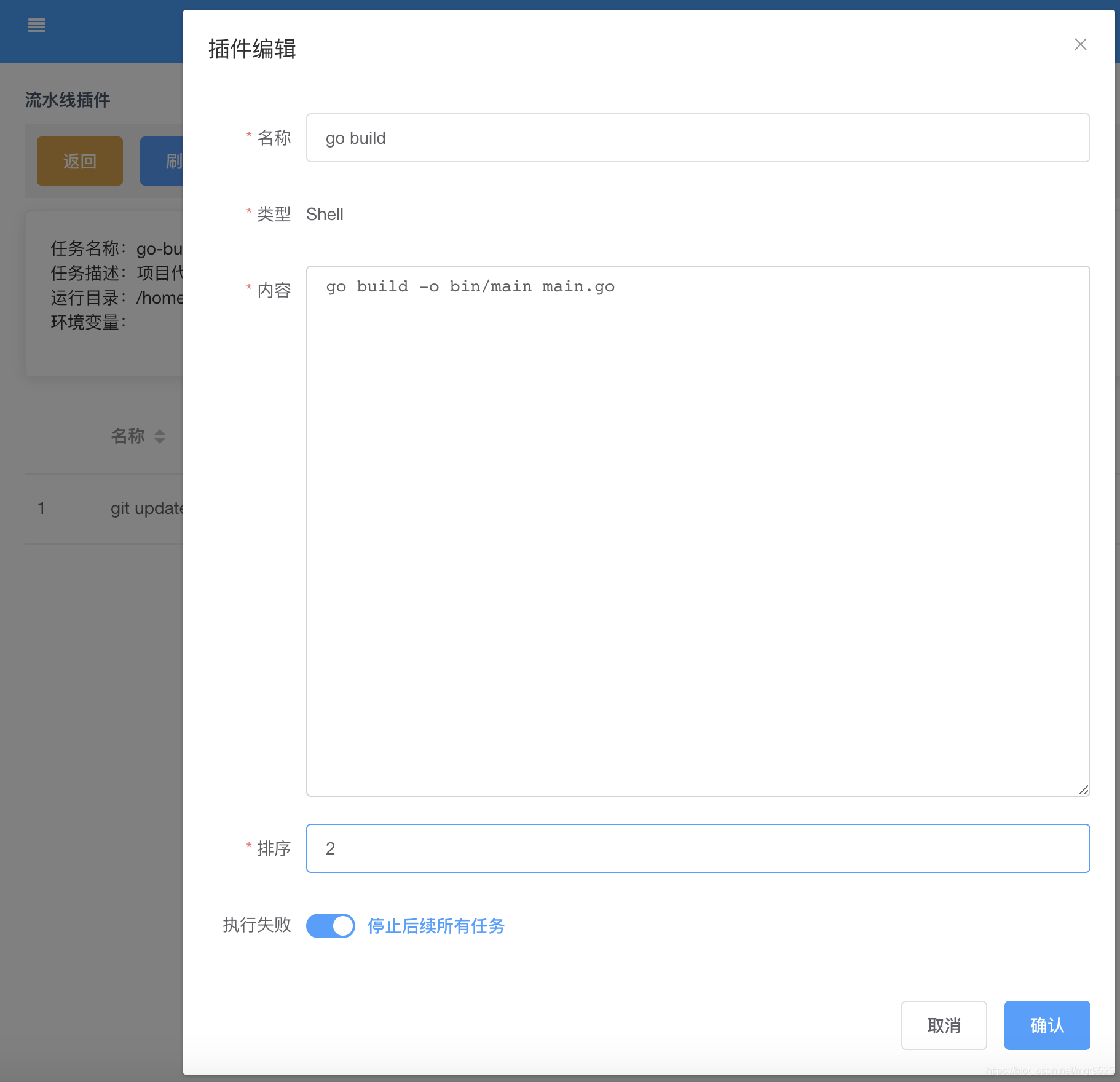

2. 流水线构建

|

||||

|

||||

|

||||

|

||||

3. 新建好之后进入插件列表新建插件

|

||||

|

||||

|

||||

|

||||

|

||||

4. 首先新建一个更新git的插件

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

5. 之后新建一个编译插件

|

||||

|

||||

|

||||

|

||||

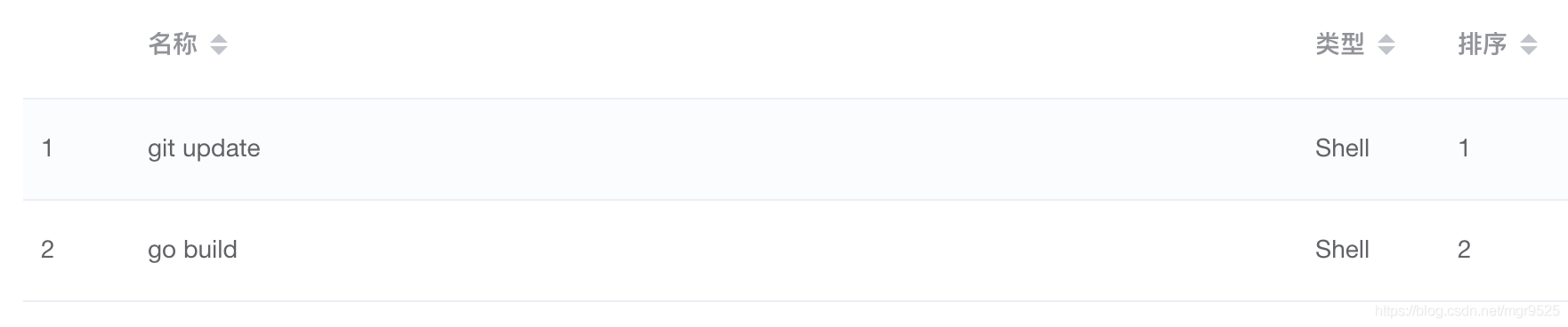

可以查看到有两个插件

|

||||

|

||||

|

||||

|

||||

|

||||

6. 返回运行流水线

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

- 以上就完成了一个简单的CI流水线工程

|

||||

|

||||

|

||||

|

||||

## 开发Gokins

|

||||

Gokins目前还处于需要不断进步的阶段,如果你有兴趣假如我们,可以在github上提交pr或者iss

|

||||

|

||||

|

||||

### 服务器环境

|

||||

服务器: ubuntu18 linux64位

|

||||

项目需要环境:git、golang、node.js

|

||||

|

||||

### 安装项目环境

|

||||

|

||||

1. git

|

||||

`要求 git 2.17.1 或者 2.x 其他版本`

|

||||

|

||||

2. node.js

|

||||

`要求 node 12.19.0 `

|

||||

|

||||

4. golang

|

||||

`要求 golang 1.15.2 `

|

||||

|

||||

|

||||

## Gokins的优势和未来趋势

|

||||

- 体积小,无论是可执行文件还是运行内存,相对于Jenkins的运行内存节约不止一个量级,目前Gokins的运行内存大概就是在20kb左右

|

||||

- 简单友好的UI交互,更加利于上手

|

||||

- 自定义的插件配置,让你可以应对多种情况

|

||||

- 安全,绝不收集任何用户或者服务器信息

|

||||

|

||||

在未来,一个简单、易用的CI/CD工具会大大提高企业的生产效率。同时Gokins不仅仅可以作为代码交付方面的工具,同样可以成为自动化运维的核心组件,用于支持大型工程。

|

||||

|

||||

|

||||

|

||||

## 更多

|

||||

|

||||

### 帮助

|

||||

|

||||

```

|

||||

./gokins -h

|

||||

```

|

||||

|

||||

### 升级

|

||||

|

||||

升级数据库、添加触发器功能,针对于于之前使用过Gokins的用户

|

||||

|

||||

```

|

||||

./gokins -up

|

||||

```

|

||||

|

||||

## Contact

|

||||

|

||||

* Join us from QQ(Group : 975316343).

|

||||

|

||||

<p align="center"><img src="static/qq.jpg" width="50%" height="50%"></p>

|

||||

|

||||

## Download

|

||||

|

||||

- [Github Release](https://github.com/mgr9525/gokins/releases)

|

||||

|

||||

|

||||

## Who is using

|

||||

<a href="http://1ydts.com" align="center"><img src="static/whouse/biaotou.jpg" width="50%" height="50%"></a>

|

||||

|

||||

## 开发日志

|

||||

|

||||

|

||||

### Gokins V0.2.0 (更新日期2020-10-24)

|

||||

- 新增功能 :

|

||||

1. 新增gitlab、gitee的webhook触发流水线的方式

|

||||

2. 新增流水行执行完毕后的后续再执行工作功能

|

||||

|

||||

- bug fix :

|

||||

1. 修复一些已知问题

|

||||

|

||||

***

|

||||

|

||||

### Gokins V0.1.2 (更新日期2020-10-20)

|

||||

- 新增功能 :

|

||||

1. 新增触发器(加入流水线日志触发方式)

|

||||

2. 实现触发器manager,task

|

||||

3. 新增升级功能 `./gokins -up`(升级数据库、添加触发器功能,针对于于之前使用过Gokins的用户)

|

||||

|

||||

- bug fix :

|

||||

1. 前端循环请求卡死问题

|

||||

2. 协程context严重bug整改

|

||||

|

||||

- 优化问题 :

|

||||

1. 日志入库改为日志文件,减少数据库大小

|

||||

2. 环境变量PATH优化,加入变量获取

|

||||

|

||||

|

||||

|

||||

|

||||

28

bdconts.go

28

bdconts.go

@ -1,28 +0,0 @@

|

||||

package main

|

||||

|

||||

import (

|

||||

"encoding/base64"

|

||||

"fmt"

|

||||

"io/ioutil"

|

||||

)

|

||||

|

||||

func main() {

|

||||

bdsqls()

|

||||

bdzip()

|

||||

}

|

||||

|

||||

func bdsqls() {

|

||||

bts, _ := ioutil.ReadFile("doc/sys.sql")

|

||||

ioutil.WriteFile("comm/dbfl.go",

|

||||

[]byte(fmt.Sprintf("package comm\n\nconst sqls = `\n%s\n`", string(bts))),

|

||||

0644)

|

||||

println("sql insert go ok!!!")

|

||||

}

|

||||

func bdzip() {

|

||||

bts, _ := ioutil.ReadFile("uis/vue-admin/dist/dist.zip")

|

||||

cont := base64.StdEncoding.EncodeToString(bts)

|

||||

ioutil.WriteFile("comm/vuefl.go",

|

||||

[]byte(fmt.Sprintf("package comm\n\nconst StaticPkg = \"%s\"", cont)),

|

||||

0644)

|

||||

println("ui insert go ok!!!")

|

||||

}

|

||||

107

bean/condition.go

Normal file

107

bean/condition.go

Normal file

@ -0,0 +1,107 @@

|

||||

package bean

|

||||

|

||||

import (

|

||||

"regexp"

|

||||

"strings"

|

||||

)

|

||||

|

||||

func skipBranch(c *Condition, branch string) bool {

|

||||

return !c.Match(branch)

|

||||

}

|

||||

func skipCommitMessages(c *Condition, branch string) bool {

|

||||

return !c.Match(branch)

|

||||

}

|

||||

func skipCommitNotes(c *Condition, branch string) bool {

|

||||

return !c.Match(branch)

|

||||

}

|

||||

|

||||

func (c *Condition) Match(v string) bool {

|

||||

if c == nil {

|

||||

return false

|

||||

}

|

||||

if c.Include != nil && c.Exclude != nil {

|

||||

return c.Includes(v) && !c.Excludes(v)

|

||||

}

|

||||

|

||||

if c.Include != nil && c.Includes(v) {

|

||||

return true

|

||||

}

|

||||

|

||||

if c.Exclude != nil && !c.Excludes(v) {

|

||||

return true

|

||||

}

|

||||

|

||||

return false

|

||||

}

|

||||

|

||||

func (c *Condition) Excludes(v string) bool {

|

||||

for _, in := range c.Exclude {

|

||||

if in == "" {

|

||||

continue

|

||||

}

|

||||

if in == v {

|

||||

return true

|

||||

}

|

||||

if isMatch(v, in) {

|

||||

return true

|

||||

}

|

||||

reg, err := regexp.Compile(in)

|

||||

if err != nil {

|

||||

return false

|

||||

}

|

||||

match := reg.Match([]byte(strings.Replace(v, "\n", "", -1)))

|

||||

if match {

|

||||

return true

|

||||

}

|

||||

}

|

||||

return false

|

||||

}

|

||||

|

||||

func (c *Condition) Includes(v string) bool {

|

||||

for _, in := range c.Include {

|

||||

if in == "" {

|

||||

continue

|

||||

}

|

||||

if in == v {

|

||||

return true

|

||||

}

|

||||

if isMatch(v, in) {

|

||||

return true

|

||||

}

|

||||

reg, err := regexp.Compile(in)

|

||||

if err != nil {

|

||||

return false

|

||||

}

|

||||

match := reg.Match([]byte(strings.Replace(v, "\n", "", -1)))

|

||||

if match {

|

||||

return true

|

||||

}

|

||||

}

|

||||

return false

|

||||

}

|

||||

|

||||

func isMatch(s string, p string) bool {

|

||||

m, n := len(s), len(p)

|

||||

dp := make([][]bool, m+1)

|

||||

for i := 0; i <= m; i++ {

|

||||

dp[i] = make([]bool, n+1)

|

||||

}

|

||||

dp[0][0] = true

|

||||

for i := 1; i <= n; i++ {

|

||||

if p[i-1] == '*' {

|

||||

dp[0][i] = true

|

||||

} else {

|

||||

break

|

||||

}

|

||||

}

|

||||

for i := 1; i <= m; i++ {

|

||||

for j := 1; j <= n; j++ {

|

||||

if p[j-1] == '*' {

|

||||

dp[i][j] = dp[i][j-1] || dp[i-1][j]

|

||||

} else if s[i-1] == p[j-1] {

|

||||

dp[i][j] = dp[i-1][j-1]

|

||||

}

|

||||

}

|

||||

}

|

||||

return dp[m][n]

|

||||

}

|

||||

15

bean/db.go

Normal file

15

bean/db.go

Normal file

@ -0,0 +1,15 @@

|

||||

package bean

|

||||

|

||||

type Page struct {

|

||||

Page int64 `json:"page"`

|

||||

Size int64 `json:"size"`

|

||||

Total int64 `json:"total"`

|

||||

Pages int64 `json:"pages"`

|

||||

Data interface{} `json:"data"`

|

||||

}

|

||||

type PageGen struct {

|

||||

SQL string

|

||||

Args []interface{}

|

||||

CountCols string

|

||||

FindCols string

|

||||

}

|

||||

18

bean/http.go

Normal file

18

bean/http.go

Normal file

@ -0,0 +1,18 @@

|

||||

package bean

|

||||

|

||||

type IdsRes struct {

|

||||

Id string `json:"id"`

|

||||

Aid int64 `json:"aid"`

|

||||

}

|

||||

type LoginReq struct {

|

||||

Name string `json:"name"`

|

||||

Pass string `json:"pass"`

|

||||

}

|

||||

type LoginRes struct {

|

||||

Token string `json:"token"`

|

||||

Id string `json:"id"`

|

||||

Name string `json:"name"`

|

||||

Nick string `json:"nick"`

|

||||

Avatar string `json:"avatar"`

|

||||

LastLoginTime string `json:"lastLoginTime"`

|

||||

}

|

||||

13

bean/models.go

Normal file

13

bean/models.go

Normal file

@ -0,0 +1,13 @@

|

||||

package bean

|

||||

|

||||

type PipelineShow struct {

|

||||

Id string `json:"id"`

|

||||

Uid string `json:"uid"`

|

||||

Name string `json:"name"`

|

||||

DisplayName string `json:"displayName"`

|

||||

PipelineType string `json:"pipelineType"`

|

||||

YmlContent string `json:"ymlContent"`

|

||||

Url string `json:"url"`

|

||||

Username string `json:"username"`

|

||||

AccessToken string `json:"accessToken"`

|

||||

}

|

||||

26

bean/pipeline.go

Normal file

26

bean/pipeline.go

Normal file

@ -0,0 +1,26 @@

|

||||

package bean

|

||||

|

||||

type NewPipeline struct {

|

||||

Name string `json:"name"`

|

||||

DisplayName string `json:"displayName"`

|

||||

Content string `json:"content"`

|

||||

OrgId string `json:"orgId"`

|

||||

AccessToken string `json:"accessToken"`

|

||||

Url string `json:"url"`

|

||||

Username string `json:"username"`

|

||||

Vars []*NewPipelineVar `json:"vars"`

|

||||

}

|

||||

|

||||

type NewPipelineVar struct {

|

||||

Name string `json:"name"`

|

||||

Value string `json:"value"`

|

||||

Remarks string `json:"remarks"`

|

||||

Public bool `json:"public"`

|

||||

}

|

||||

|

||||

func (p *NewPipeline) Check() bool {

|

||||

if p.Name == "" || p.Content == "" {

|

||||

return false

|

||||

}

|

||||

return true

|

||||

}

|

||||

10

bean/pipelinevar.go

Normal file

10

bean/pipelinevar.go

Normal file

@ -0,0 +1,10 @@

|

||||

package bean

|

||||

|

||||

type PipelineVar struct {

|

||||

Aid int64 `json:"aid"`

|

||||

PipelineId string `json:"pipelineId"`

|

||||

Name string ` json:"name"`

|

||||

Value string ` json:"value"`

|

||||

Remarks string ` json:"remarks"`

|

||||

Public bool ` json:"public"`

|

||||

}

|

||||

18

bean/runtime.go

Normal file

18

bean/runtime.go

Normal file

@ -0,0 +1,18 @@

|

||||

package bean

|

||||

|

||||

import "time"

|

||||

|

||||

type LogOutJson struct {

|

||||

Id string `json:"id"`

|

||||

Content string `json:"content"`

|

||||

Times time.Time `json:"times"`

|

||||

Errs bool `json:"errs"`

|

||||

}

|

||||

type LogOutJsonRes struct {

|

||||

Id string `json:"id"`

|

||||

Content string `json:"content"`

|

||||

Times time.Time `json:"times"`

|

||||

Errs bool `json:"errs"`

|

||||

|

||||

Offset int64 `json:"offset"`

|

||||

}

|

||||

121

bean/thirdbean/gitea.go

Normal file

121

bean/thirdbean/gitea.go

Normal file

@ -0,0 +1,121 @@

|

||||

package thirdbean

|

||||

|

||||

import "time"

|

||||

|

||||

type ResultGiteaRepo struct {

|

||||

Id int `json:"id"`

|

||||

Owner struct {

|

||||

Id int `json:"id"`

|

||||

Login string `json:"login"`

|

||||

FullName string `json:"full_name"`

|

||||

Email string `json:"email"`

|

||||

AvatarUrl string `json:"avatar_url"`

|

||||

Language string `json:"language"`

|

||||

IsAdmin bool `json:"is_admin"`

|

||||

LastLogin time.Time `json:"last_login"`

|

||||

Created time.Time `json:"created"`

|

||||

Restricted bool `json:"restricted"`

|

||||

Active bool `json:"active"`

|

||||

ProhibitLogin bool `json:"prohibit_login"`

|

||||

Location string `json:"location"`

|

||||

Website string `json:"website"`

|

||||

Description string `json:"description"`

|

||||

Username string `json:"username"`

|

||||

} `json:"owner"`

|

||||

Name string `json:"name"`

|

||||

FullName string `json:"full_name"`

|

||||

Description string `json:"description"`

|

||||

Empty bool `json:"empty"`

|

||||

Private bool `json:"private"`

|

||||

Fork bool `json:"fork"`

|

||||

Template bool `json:"template"`

|

||||

Parent interface{} `json:"parent"`

|

||||

Mirror bool `json:"mirror"`

|

||||

Size int `json:"size"`

|

||||

HtmlUrl string `json:"html_url"`

|

||||

SshUrl string `json:"ssh_url"`

|

||||

CloneUrl string `json:"clone_url"`

|

||||

OriginalUrl string `json:"original_url"`

|

||||

Website string `json:"website"`

|

||||

StarsCount int `json:"stars_count"`

|

||||

ForksCount int `json:"forks_count"`

|

||||

WatchersCount int `json:"watchers_count"`

|

||||

OpenIssuesCount int `json:"open_issues_count"`

|

||||

OpenPrCounter int `json:"open_pr_counter"`

|

||||

ReleaseCounter int `json:"release_counter"`

|

||||

DefaultBranch string `json:"default_branch"`

|

||||

Archived bool `json:"archived"`

|

||||

CreatedAt time.Time `json:"created_at"`

|

||||

UpdatedAt time.Time `json:"updated_at"`

|

||||

Permissions struct {

|

||||

Admin bool `json:"admin"`

|

||||

Push bool `json:"push"`

|

||||

Pull bool `json:"pull"`

|

||||

} `json:"permissions"`

|

||||

HasIssues bool `json:"has_issues"`

|

||||

InternalTracker struct {

|

||||

EnableTimeTracker bool `json:"enable_time_tracker"`

|

||||

AllowOnlyContributorsToTrackTime bool `json:"allow_only_contributors_to_track_time"`

|

||||

EnableIssueDependencies bool `json:"enable_issue_dependencies"`

|

||||

} `json:"internal_tracker"`

|

||||

HasWiki bool `json:"has_wiki"`

|

||||

HasPullRequests bool `json:"has_pull_requests"`

|

||||

HasProjects bool `json:"has_projects"`

|

||||

IgnoreWhitespaceConflicts bool `json:"ignore_whitespace_conflicts"`

|

||||

AllowMergeCommits bool `json:"allow_merge_commits"`

|

||||

AllowRebase bool `json:"allow_rebase"`

|

||||

AllowRebaseExplicit bool `json:"allow_rebase_explicit"`

|

||||

AllowSquashMerge bool `json:"allow_squash_merge"`

|

||||

DefaultMergeStyle string `json:"default_merge_style"`

|

||||

AvatarUrl string `json:"avatar_url"`

|

||||

Internal bool `json:"internal"`

|

||||

MirrorInterval string `json:"mirror_interval"`

|

||||

}

|

||||

type ResultGiteaRepoBranch struct {

|

||||

Name string `json:"name"`

|

||||

Commit struct {

|

||||

Id string `json:"id"`

|

||||

Message string `json:"message"`

|

||||

Url string `json:"url"`

|

||||

Author struct {

|

||||

Name string `json:"name"`

|

||||

Email string `json:"email"`

|

||||

Username string `json:"username"`

|

||||

} `json:"author"`

|

||||

Committer struct {

|

||||

Name string `json:"name"`

|

||||

Email string `json:"email"`

|

||||

Username string `json:"username"`

|

||||

} `json:"committer"`

|

||||

Verification struct {

|

||||

Verified bool `json:"verified"`

|

||||

Reason string `json:"reason"`

|

||||

Signature string `json:"signature"`

|

||||

Signer interface{} `json:"signer"`

|

||||

Payload string `json:"payload"`

|

||||

} `json:"verification"`

|

||||

Timestamp time.Time `json:"timestamp"`

|

||||

Added interface{} `json:"added"`

|

||||

Removed interface{} `json:"removed"`

|

||||

Modified interface{} `json:"modified"`

|

||||

} `json:"commit"`

|

||||

Protected bool `json:"protected"`

|

||||

RequiredApprovals int `json:"required_approvals"`

|

||||

EnableStatusCheck bool `json:"enable_status_check"`

|

||||

StatusCheckContexts []interface{} `json:"status_check_contexts"`

|

||||

UserCanPush bool `json:"user_can_push"`

|

||||

UserCanMerge bool `json:"user_can_merge"`

|

||||

EffectiveBranchProtectionName string `json:"effective_branch_protection_name"`

|

||||

}

|

||||

type ResultGetGiteaHook struct {

|

||||

Id int `json:"id"`

|

||||

Type string `json:"type"`

|

||||

Config struct {

|

||||

ContentType string `json:"content_type"`

|

||||

Url string `json:"url"`

|

||||

} `json:"config"`

|

||||

Events []string `json:"events"`

|

||||

Active bool `json:"active"`

|

||||

UpdatedAt time.Time `json:"updated_at"`

|

||||

CreatedAt time.Time `json:"created_at"`

|

||||

}

|

||||

171

bean/thirdbean/gitee.go

Normal file

171

bean/thirdbean/gitee.go

Normal file

@ -0,0 +1,171 @@

|

||||

package thirdbean

|

||||

|

||||

import "time"

|

||||

|

||||

type ResultGiteeCreateHooks struct {

|

||||

Id int `json:"id"`

|

||||

Url string `json:"url"`

|

||||

CreatedAt time.Time `json:"created_at"`

|

||||

Password string `json:"password"`

|

||||

ProjectId int `json:"project_id"`

|

||||

Result string `json:"result"`

|

||||

ResultCode interface{} `json:"result_code"`

|

||||

PushEvents bool `json:"push_events"`

|

||||

TagPushEvents bool `json:"tag_push_events"`

|

||||

IssuesEvents bool `json:"issues_events"`

|

||||

NoteEvents bool `json:"note_events"`

|

||||

MergeRequestsEvents bool `json:"merge_requests_events"`

|

||||

}

|

||||

|

||||

type ResultGiteeRepo struct {

|

||||

Id int64 `json:"id"`

|

||||

FullName string `json:"full_name"`

|

||||

HumanName string `json:"human_name"`

|

||||

Url string `json:"url"`

|

||||

Namespace struct {

|

||||

Id int `json:"id"`

|

||||

Type string `json:"type"`

|

||||

Name string `json:"name"`

|

||||

Path string `json:"path"`

|

||||

HtmlUrl string `json:"html_url"`

|

||||

} `json:"namespace"`

|

||||

Path string `json:"path"`

|

||||

Name string `json:"name"`

|

||||

Owner struct {

|

||||

Id int `json:"id"`

|

||||

Login string `json:"login"`

|

||||

Name string `json:"name"`

|

||||

AvatarUrl string `json:"avatar_url"`

|

||||

Url string `json:"url"`

|

||||

HtmlUrl string `json:"html_url"`

|

||||

FollowersUrl string `json:"followers_url"`

|

||||

FollowingUrl string `json:"following_url"`

|

||||

GistsUrl string `json:"gists_url"`

|

||||

StarredUrl string `json:"starred_url"`

|

||||

SubscriptionsUrl string `json:"subscriptions_url"`

|

||||

OrganizationsUrl string `json:"organizations_url"`

|

||||

ReposUrl string `json:"repos_url"`

|

||||

EventsUrl string `json:"events_url"`

|

||||

ReceivedEventsUrl string `json:"received_events_url"`

|

||||

Type string `json:"type"`

|

||||

} `json:"owner"`

|

||||

Description string `json:"description"`

|

||||

Private bool `json:"private"`

|

||||

Public bool `json:"public"`

|

||||

Internal bool `json:"internal"`

|

||||

Fork bool `json:"fork"`

|

||||

HtmlUrl string `json:"html_url"`

|

||||

SshUrl string `json:"ssh_url"`

|

||||

ForksUrl string `json:"forks_url"`

|

||||

KeysUrl string `json:"keys_url"`

|

||||

CollaboratorsUrl string `json:"collaborators_url"`

|

||||

HooksUrl string `json:"hooks_url"`

|

||||

BranchesUrl string `json:"branches_url"`

|

||||

TagsUrl string `json:"tags_url"`

|

||||

BlobsUrl string `json:"blobs_url"`

|

||||

StargazersUrl string `json:"stargazers_url"`

|

||||

ContributorsUrl string `json:"contributors_url"`

|

||||

CommitsUrl string `json:"commits_url"`

|

||||

CommentsUrl string `json:"comments_url"`

|

||||

IssueCommentUrl string `json:"issue_comment_url"`

|

||||

IssuesUrl string `json:"issues_url"`

|

||||

PullsUrl string `json:"pulls_url"`

|

||||

MilestonesUrl string `json:"milestones_url"`

|

||||

NotificationsUrl string `json:"notifications_url"`

|

||||

LabelsUrl string `json:"labels_url"`

|

||||

ReleasesUrl string `json:"releases_url"`

|

||||

Recommend bool `json:"recommend"`

|

||||

Homepage interface{} `json:"homepage"`

|

||||

Language string `json:"language"`

|

||||

ForksCount int `json:"forks_count"`

|

||||

StargazersCount int `json:"stargazers_count"`

|

||||

WatchersCount int `json:"watchers_count"`

|

||||

DefaultBranch string `json:"default_branch"`

|

||||

OpenIssuesCount int `json:"open_issues_count"`

|

||||

HasIssues bool `json:"has_issues"`

|

||||

HasWiki bool `json:"has_wiki"`

|

||||

IssueComment bool `json:"issue_comment"`

|

||||

CanComment bool `json:"can_comment"`

|

||||

PullRequestsEnabled bool `json:"pull_requests_enabled"`

|

||||

HasPage bool `json:"has_page"`

|

||||

License string `json:"license"`

|

||||

Outsourced bool `json:"outsourced"`

|

||||

ProjectCreator string `json:"project_creator"`

|

||||

Members []string `json:"members"`

|

||||

PushedAt time.Time `json:"pushed_at"`

|

||||

CreatedAt time.Time `json:"created_at"`

|

||||

UpdatedAt time.Time `json:"updated_at"`

|

||||

Parent interface{} `json:"parent"`

|

||||

Paas interface{} `json:"paas"`

|

||||

Stared bool `json:"stared"`

|

||||

Watched bool `json:"watched"`

|

||||

Permission struct {

|

||||

Pull bool `json:"pull"`

|

||||

Push bool `json:"push"`

|

||||

Admin bool `json:"admin"`

|

||||

} `json:"permission"`

|

||||

Relation string `json:"relation"`

|

||||

AssigneesNumber int `json:"assignees_number"`

|

||||

TestersNumber int `json:"testers_number"`

|

||||

Assignees []struct {

|

||||

Id int `json:"id"`

|

||||

Login string `json:"login"`

|

||||

Name string `json:"name"`

|

||||

AvatarUrl string `json:"avatar_url"`

|

||||

Url string `json:"url"`

|

||||

HtmlUrl string `json:"html_url"`

|

||||

FollowersUrl string `json:"followers_url"`

|

||||

FollowingUrl string `json:"following_url"`

|

||||

GistsUrl string `json:"gists_url"`

|

||||

StarredUrl string `json:"starred_url"`

|

||||

SubscriptionsUrl string `json:"subscriptions_url"`

|

||||

OrganizationsUrl string `json:"organizations_url"`

|

||||

ReposUrl string `json:"repos_url"`

|

||||

EventsUrl string `json:"events_url"`

|

||||

ReceivedEventsUrl string `json:"received_events_url"`

|

||||

Type string `json:"type"`

|

||||

} `json:"assignees"`

|

||||

Testers []struct {

|

||||

Id int `json:"id"`

|

||||

Login string `json:"login"`

|

||||

Name string `json:"name"`

|

||||

AvatarUrl string `json:"avatar_url"`

|

||||

Url string `json:"url"`

|

||||

HtmlUrl string `json:"html_url"`

|

||||

FollowersUrl string `json:"followers_url"`

|

||||

FollowingUrl string `json:"following_url"`

|

||||

GistsUrl string `json:"gists_url"`

|

||||

StarredUrl string `json:"starred_url"`

|

||||

SubscriptionsUrl string `json:"subscriptions_url"`

|

||||

OrganizationsUrl string `json:"organizations_url"`

|

||||

ReposUrl string `json:"repos_url"`

|

||||

EventsUrl string `json:"events_url"`

|

||||

ReceivedEventsUrl string `json:"received_events_url"`

|

||||

Type string `json:"type"`

|

||||

} `json:"testers"`

|

||||

}

|

||||

|

||||

type ResultGiteeRepoBranch struct {

|

||||

Name string `json:"name"`

|

||||

Commit struct {

|

||||

Sha string `json:"sha"`

|

||||

Url string `json:"url"`

|

||||

} `json:"commit"`

|

||||

Protected bool `json:"protected"`

|

||||

ProtectionUrl string `json:"protection_url"`

|

||||

}

|

||||

|

||||

type ResultGetGiteeHook struct {

|

||||

Id int `json:"id"`

|

||||

Url string `json:"url"`

|

||||

CreatedAt time.Time `json:"created_at"`

|

||||

Password string `json:"password"`

|

||||

ProjectId int `json:"project_id"`

|

||||

Result string `json:"result"`

|

||||

ResultCode int `json:"result_code"`

|

||||

PushEvents bool `json:"push_events"`

|

||||

TagPushEvents bool `json:"tag_push_events"`

|

||||

IssuesEvents bool `json:"issues_events"`

|

||||

NoteEvents bool `json:"note_events"`

|

||||

MergeRequestsEvents bool `json:"merge_requests_events"`

|

||||

}

|

||||

171

bean/thirdbean/giteepremium.go

Normal file

171

bean/thirdbean/giteepremium.go

Normal file

@ -0,0 +1,171 @@

|

||||

package thirdbean

|

||||

|

||||

import "time"

|

||||

|

||||

type ResultGiteePremiumCreateHooks struct {

|

||||

Id int `json:"id"`

|

||||

Url string `json:"url"`

|

||||

CreatedAt time.Time `json:"created_at"`

|

||||

Password string `json:"password"`

|

||||

ProjectId int `json:"project_id"`

|

||||

Result string `json:"result"`

|

||||

ResultCode interface{} `json:"result_code"`

|

||||

PushEvents bool `json:"push_events"`

|

||||

TagPushEvents bool `json:"tag_push_events"`

|

||||

IssuesEvents bool `json:"issues_events"`

|

||||

NoteEvents bool `json:"note_events"`

|

||||

MergeRequestsEvents bool `json:"merge_requests_events"`

|

||||

}

|

||||

|

||||

type ResultGiteePremiumRepo struct {

|

||||

Id int64 `json:"id"`

|

||||

FullName string `json:"full_name"`

|

||||

HumanName string `json:"human_name"`

|

||||

Url string `json:"url"`

|

||||

Namespace struct {

|

||||

Id int `json:"id"`

|

||||

Type string `json:"type"`

|

||||

Name string `json:"name"`

|

||||

Path string `json:"path"`

|

||||

HtmlUrl string `json:"html_url"`

|

||||

} `json:"namespace"`

|

||||

Path string `json:"path"`

|

||||

Name string `json:"name"`

|

||||

Owner struct {

|

||||

Id int `json:"id"`

|

||||

Login string `json:"login"`

|

||||

Name string `json:"name"`

|

||||

AvatarUrl string `json:"avatar_url"`

|

||||

Url string `json:"url"`

|

||||

HtmlUrl string `json:"html_url"`

|

||||

FollowersUrl string `json:"followers_url"`

|

||||

FollowingUrl string `json:"following_url"`

|

||||

GistsUrl string `json:"gists_url"`

|

||||

StarredUrl string `json:"starred_url"`

|

||||

SubscriptionsUrl string `json:"subscriptions_url"`

|

||||

OrganizationsUrl string `json:"organizations_url"`

|

||||

ReposUrl string `json:"repos_url"`

|

||||

EventsUrl string `json:"events_url"`

|

||||

ReceivedEventsUrl string `json:"received_events_url"`

|

||||

Type string `json:"type"`

|

||||

} `json:"owner"`

|

||||

Description string `json:"description"`

|

||||

Private bool `json:"private"`

|

||||

Public bool `json:"public"`

|

||||

Internal bool `json:"internal"`

|

||||

Fork bool `json:"fork"`

|

||||

HtmlUrl string `json:"html_url"`

|

||||

SshUrl string `json:"ssh_url"`

|

||||

ForksUrl string `json:"forks_url"`

|

||||

KeysUrl string `json:"keys_url"`

|

||||

CollaboratorsUrl string `json:"collaborators_url"`

|

||||

HooksUrl string `json:"hooks_url"`

|

||||

BranchesUrl string `json:"branches_url"`

|

||||

TagsUrl string `json:"tags_url"`

|

||||

BlobsUrl string `json:"blobs_url"`

|

||||

StargazersUrl string `json:"stargazers_url"`

|

||||

ContributorsUrl string `json:"contributors_url"`

|

||||

CommitsUrl string `json:"commits_url"`

|

||||

CommentsUrl string `json:"comments_url"`

|

||||

IssueCommentUrl string `json:"issue_comment_url"`

|

||||

IssuesUrl string `json:"issues_url"`

|

||||

PullsUrl string `json:"pulls_url"`

|

||||

MilestonesUrl string `json:"milestones_url"`

|

||||

NotificationsUrl string `json:"notifications_url"`

|

||||

LabelsUrl string `json:"labels_url"`

|

||||

ReleasesUrl string `json:"releases_url"`

|

||||

Recommend bool `json:"recommend"`

|

||||

Homepage interface{} `json:"homepage"`

|

||||

Language string `json:"language"`

|

||||

ForksCount int `json:"forks_count"`

|

||||

StargazersCount int `json:"stargazers_count"`

|

||||

WatchersCount int `json:"watchers_count"`

|

||||

DefaultBranch string `json:"default_branch"`

|

||||

OpenIssuesCount int `json:"open_issues_count"`

|

||||

HasIssues bool `json:"has_issues"`

|

||||

HasWiki bool `json:"has_wiki"`

|

||||

IssueComment bool `json:"issue_comment"`

|

||||

CanComment bool `json:"can_comment"`

|

||||

PullRequestsEnabled bool `json:"pull_requests_enabled"`

|

||||

HasPage bool `json:"has_page"`

|

||||

License string `json:"license"`

|

||||

Outsourced bool `json:"outsourced"`

|

||||

ProjectCreator string `json:"project_creator"`

|

||||

Members []string `json:"members"`

|

||||

PushedAt time.Time `json:"pushed_at"`

|

||||

CreatedAt time.Time `json:"created_at"`

|

||||

UpdatedAt time.Time `json:"updated_at"`

|

||||

Parent interface{} `json:"parent"`

|

||||

Paas interface{} `json:"paas"`

|

||||

Stared bool `json:"stared"`

|

||||

Watched bool `json:"watched"`

|

||||

Permission struct {

|

||||

Pull bool `json:"pull"`

|

||||

Push bool `json:"push"`

|

||||

Admin bool `json:"admin"`

|

||||

} `json:"permission"`

|

||||

Relation string `json:"relation"`

|

||||

AssigneesNumber int `json:"assignees_number"`

|

||||

TestersNumber int `json:"testers_number"`

|

||||

Assignees []struct {

|

||||

Id int `json:"id"`

|

||||

Login string `json:"login"`

|

||||

Name string `json:"name"`

|

||||

AvatarUrl string `json:"avatar_url"`

|

||||

Url string `json:"url"`

|

||||

HtmlUrl string `json:"html_url"`

|

||||

FollowersUrl string `json:"followers_url"`

|

||||

FollowingUrl string `json:"following_url"`

|

||||

GistsUrl string `json:"gists_url"`

|

||||

StarredUrl string `json:"starred_url"`

|

||||

SubscriptionsUrl string `json:"subscriptions_url"`

|

||||

OrganizationsUrl string `json:"organizations_url"`

|

||||

ReposUrl string `json:"repos_url"`

|

||||

EventsUrl string `json:"events_url"`

|

||||

ReceivedEventsUrl string `json:"received_events_url"`

|

||||

Type string `json:"type"`

|

||||

} `json:"assignees"`

|

||||

Testers []struct {

|

||||

Id int `json:"id"`

|

||||

Login string `json:"login"`

|

||||

Name string `json:"name"`

|

||||

AvatarUrl string `json:"avatar_url"`

|

||||

Url string `json:"url"`

|

||||

HtmlUrl string `json:"html_url"`

|

||||

FollowersUrl string `json:"followers_url"`

|

||||

FollowingUrl string `json:"following_url"`

|

||||

GistsUrl string `json:"gists_url"`

|

||||

StarredUrl string `json:"starred_url"`

|

||||

SubscriptionsUrl string `json:"subscriptions_url"`

|

||||

OrganizationsUrl string `json:"organizations_url"`

|

||||

ReposUrl string `json:"repos_url"`

|

||||

EventsUrl string `json:"events_url"`

|

||||

ReceivedEventsUrl string `json:"received_events_url"`

|

||||

Type string `json:"type"`

|

||||

} `json:"testers"`

|

||||

}

|

||||

|

||||

type ResultGiteePremiumRepoBranch struct {

|

||||

Name string `json:"name"`

|

||||

Commit struct {

|

||||

Sha string `json:"sha"`

|

||||

Url string `json:"url"`

|

||||

} `json:"commit"`

|

||||

Protected bool `json:"protected"`

|

||||

ProtectionUrl string `json:"protection_url"`

|

||||

}

|

||||

|

||||

type ResultGetGiteePremiumHook struct {

|

||||

Id int `json:"id"`

|

||||

Url string `json:"url"`

|

||||

CreatedAt time.Time `json:"created_at"`

|

||||

Password string `json:"password"`

|

||||

ProjectId int `json:"project_id"`

|

||||

Result string `json:"result"`

|

||||

ResultCode int `json:"result_code"`

|

||||

PushEvents bool `json:"push_events"`

|

||||

TagPushEvents bool `json:"tag_push_events"`

|

||||

IssuesEvents bool `json:"issues_events"`

|

||||

NoteEvents bool `json:"note_events"`

|

||||

MergeRequestsEvents bool `json:"merge_requests_events"`

|

||||

}

|

||||

148

bean/thirdbean/github.go

Normal file

148

bean/thirdbean/github.go

Normal file

@ -0,0 +1,148 @@

|

||||

package thirdbean

|

||||

|

||||

import "time"

|

||||

|

||||

type ResultGithubRepo struct {

|

||||

Id int `json:"id"`

|

||||

NodeId string `json:"node_id"`

|

||||

Name string `json:"name"`

|

||||

FullName string `json:"full_name"`

|

||||

Private bool `json:"private"`

|

||||

Owner struct {

|

||||

Login string `json:"login"`

|

||||

Id int `json:"id"`

|

||||

NodeId string `json:"node_id"`

|

||||

AvatarUrl string `json:"avatar_url"`

|

||||

GravatarId string `json:"gravatar_id"`

|

||||

Url string `json:"url"`

|

||||

HtmlUrl string `json:"html_url"`

|

||||

FollowersUrl string `json:"followers_url"`

|

||||

FollowingUrl string `json:"following_url"`

|

||||

GistsUrl string `json:"gists_url"`

|

||||

StarredUrl string `json:"starred_url"`

|

||||

SubscriptionsUrl string `json:"subscriptions_url"`

|

||||

OrganizationsUrl string `json:"organizations_url"`

|

||||

ReposUrl string `json:"repos_url"`

|

||||

EventsUrl string `json:"events_url"`

|

||||

ReceivedEventsUrl string `json:"received_events_url"`

|

||||

Type string `json:"type"`

|

||||

SiteAdmin bool `json:"site_admin"`

|

||||

} `json:"owner"`

|

||||

HtmlUrl string `json:"html_url"`

|

||||

Description *string `json:"description"`

|

||||

Fork bool `json:"fork"`

|

||||

Url string `json:"url"`

|

||||

ForksUrl string `json:"forks_url"`

|

||||

KeysUrl string `json:"keys_url"`

|

||||

CollaboratorsUrl string `json:"collaborators_url"`

|

||||

TeamsUrl string `json:"teams_url"`

|

||||

HooksUrl string `json:"hooks_url"`

|

||||

IssueEventsUrl string `json:"issue_events_url"`

|

||||

EventsUrl string `json:"events_url"`

|

||||

AssigneesUrl string `json:"assignees_url"`

|

||||

BranchesUrl string `json:"branches_url"`

|

||||

TagsUrl string `json:"tags_url"`

|

||||

BlobsUrl string `json:"blobs_url"`

|

||||

GitTagsUrl string `json:"git_tags_url"`

|

||||

GitRefsUrl string `json:"git_refs_url"`

|

||||

TreesUrl string `json:"trees_url"`

|

||||

StatusesUrl string `json:"statuses_url"`

|

||||